Welcome to the mesmerizing universe of artificial intelligence (AI). A cosmos where sci-fi meets reality, where dreams morph into tangible experiences, and, importantly, where data becomes art.

Standing at the forefront of this revolution are the ingenious, game-changing frameworks known as Generative Adversarial Networks (GANs).

These innovative AI models, since their introduction in 2014 by Ian Goodfellow and his team, have been causing quite a stir in both academia and industry.

They’re not just making waves; they’re essentially rewriting the rules of what’s possible in the field of data generation and analysis.

GANs are responsible for generating some astonishingly realistic images of human faces, creating mesmerizing pieces of art, transforming doodles into photorealistic images, and even synthesizing high-quality speech from text.

Their applications are sweeping across sectors, from healthcare to entertainment, to climate modeling, showing no signs of slowing down.

Ready to dive deep into this fascinating world? We promise it’s going to be an intriguing, insightful journey, as we demystify the world of Generative Adversarial Networks together.

Contents

- 1 What is a Generative Adversarial Network (GAN)?

- 2 Why Use Generative Adversarial Networks?

- 3 Advantages and Disadvantages of GANs

- 4 An Overview of the Generative Adversarial Network Architecture

- 5 Types of Generative Adversarial Networks

- 6 How to Train Generative Adversarial Networks

- 7 Generative Adversarial Networks (GANs) Loss Function

- 8 Exploring the Applications of Generative Adversarial Networks

- 9 Challenges Faced by Generative Adversarial Networks (GANs)

- 10 Future of Generative Adversarial Networks

- 11 Conclusion

What is a Generative Adversarial Network (GAN)?

Generative Adversarial Networks (GANs) present a unique take on machine learning frameworks. The name might sound daunting, but their core concept is elegantly simple.

Picture this: a battle of wits between a master forger and an astute detective. The forger’s task is to create impeccable counterfeit currency, while the detective’s mission is to identify these fakes.

As the forger’s techniques get more sophisticated, the detective hones their detection skills. This dynamic of continuous learning and adaptation is the crux of GANs.

In the world of artificial intelligence, this engaging duel takes place between two deep neural networks: the Generator, our aspiring counterfeiter, and the Discriminator, the unwavering detective. They are pitted against each other (hence “adversarial”) in a zero-sum game.

- The Generator: This network takes in random noise and outputs synthetic data. Its ultimate goal? To produce data that is so convincingly real that it can fool the discriminator into believing it’s the genuine article.

- The Discriminator: Its task is to identify whether the data it receives is real (from the actual dataset) or fake (created by the generator). It makes its judgment based on the differences it learns to spot between the real and generated data.

This competitive process leads to the generator improving its output continually, striving to make it indistinguishable from real data.

Simultaneously, the discriminator progressively enhances its ability to distinguish between real and fake. The result? A GAN that’s capable of generating incredibly realistic data.

The beauty of GANs lies in their ability to learn and replicate the hidden patterns in data, without any explicit instructions.

This feature opens up a world of possibilities across industries, from generating novel pharmaceutical molecules to creating realistic video game environments.

But to appreciate the full power and potential of GANs, we need to dive deeper into their architecture, and that’s exactly what we’re about to do.

Why Use Generative Adversarial Networks?

Generative Adversarial Networks, or GANs, have revolutionized deep learning techniques, particularly within the field of computer vision.

A key application of deep learning is the concept of data augmentation, which involves expanding datasets to improve model performance and provide a regularization effect.

This, in turn, reduces generalization error. Data augmentation accomplishes this by creating new, plausible examples within the domain the model is trained on.

Image-based data augmentation methods are somewhat rudimentary, involving flips, crops, zooms, and other simple transformations of existing images within a training dataset.

Generative modeling, such as that offered by GANs, provides a more advanced and potentially domain-specific alternative to data augmentation. In fact, data augmentation can be seen as a simplified form of generative modeling.

“[…] enlarging the sample with latent (unobserved) data. This is called data augmentation. […] In other problems, the latent data are actual data that should have been observed but are missing,”

— Page 276, The Elements of Statistical Learning, 2016.

In complex domains or those with a limited amount of data, generative modeling offers an avenue towards enhanced model training.

GANs have achieved remarkable success in this area, particularly in fields such as deep reinforcement learning.

Research-wise, GANs are interesting, significant, and warrant further study for several reasons.

Ian Goodfellow’s 2016 keynote at a conference and the associated technical report, titled “NIPS 2016 Tutorial: Generative Adversarial Networks,” outlines several of these reasons.

He particularly highlights GANs’ successful ability to model high-dimensional data, manage missing data, and their capacity to provide multi-modal outputs or multiple plausible answers.

However, where GANs truly shine is in their application for tasks requiring the generation of new examples, specifically through conditional GANs.

Goodfellow highlights three main examples: Image Super-Resolution (generating high-resolution versions of input images), Creating Art (generating new and artistic images, sketches, paintings, and more), and Image-to-Image Translation (translating photographs across domains, such as day to night or summer to winter).

Perhaps the most persuasive reason for the wide study, development, and use of GANs is their remarkable success. GANs can generate images so realistic that even humans find it hard to tell they are of objects, scenes, and people that do not exist in real life. The term “astonishing” barely covers their capabilities and success.

Advantages and Disadvantages of GANs

Let’s talk about Generative Adversarial Networks (GANs) and what makes them so great, along with a few hiccups you might come across.

Advantages

So, what’s cool about GANs?

Creating New Stuff

Think of GANs as these cool artists who can create brand-new data that looks like the stuff we already know.

They’re great for creating more data, spotting weird patterns, or even for those times when you want to let your creativity fly.

Amazing Quality

Another awesome thing about GANs is that they’re like perfectionists – they create really high-quality, lifelike images, videos, and even music. And who doesn’t love top-notch stuff?

They Learn on Their Own

Now, this is where they get really cool. They can learn without needing any labels on the data. So, if you’ve got a ton of data but no labels, GANs are your go-to guys.

Jack of All Trades

Whether it’s creating images, translating images, spotting weird patterns, or simply creating more data, GANs can handle it all. Talk about versatility!

Disadvantages

But just like anything else, they aren’t perfect. So, let’s look at some of the issues you might face with GANs.

Training Blues

Sometimes, GANs can be a bit stubborn when it comes to training. They can become unstable, get stuck in a loop, or just refuse to learn.

Heavy-duty

They’re a bit like those resource-heavy video games. They need a lot of computational power and can be slow to train, especially if you’re dealing with high-res images or tons of data.

Too Much of a Good Thing

At times, GANs can get too caught up in the training data and end up creating new data that’s way too similar, not leaving much room for diversity.

They’re Not Always Fair

If the data you’re training them on has any biases, they’ll end up learning those too. So you might end up with data that’s not exactly fair or unbiased.

Mystery boxes

Lastly, GANs can be like a magic trick – amazing to witness, but hard to understand or explain. This makes it a bit difficult to ensure they’re being fair, transparent, or accountable in their applications.

So there you have it – the good, the bad, and the exciting about GANs!

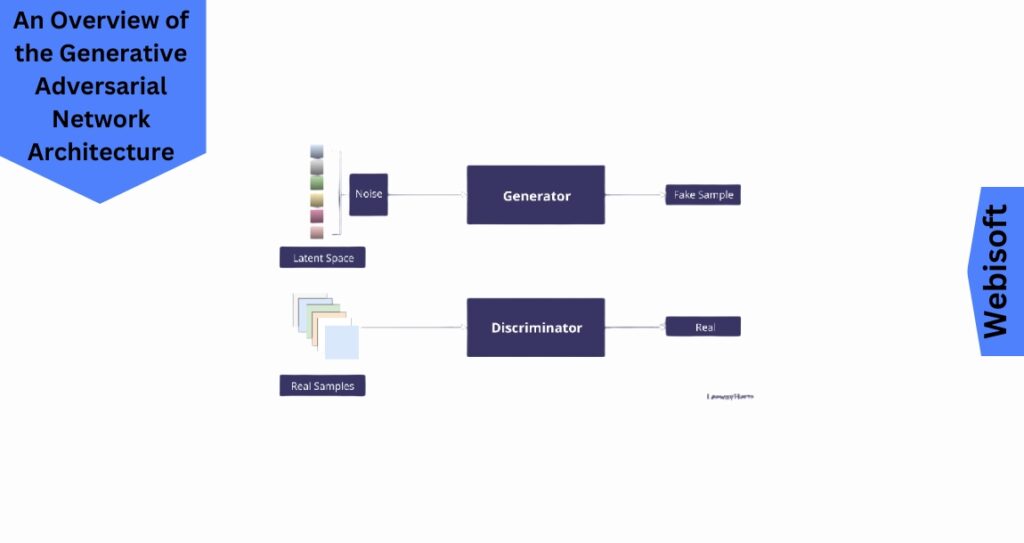

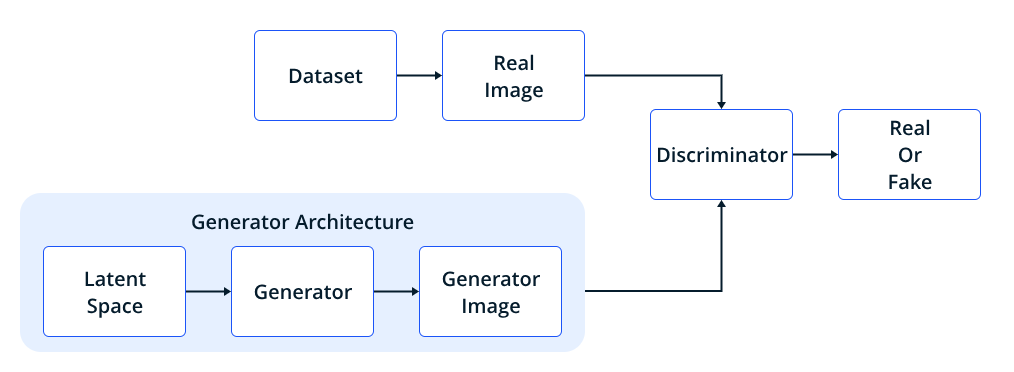

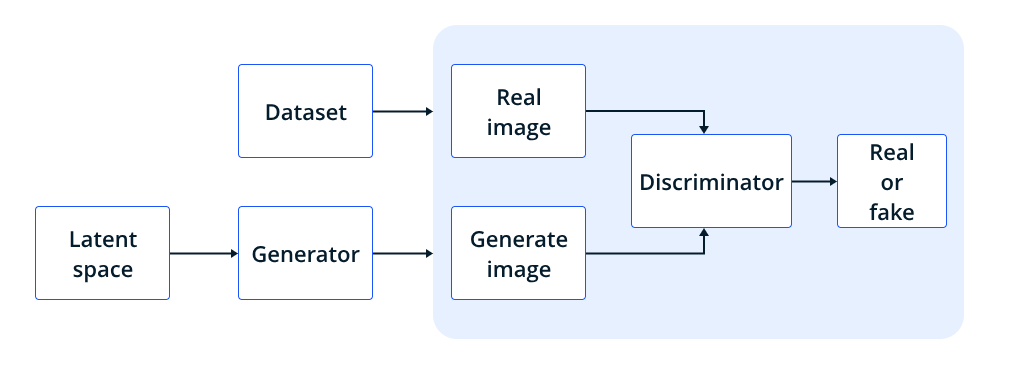

An Overview of the Generative Adversarial Network Architecture

To fully grasp the power and potential of GANs, we need to delve deeper into the nuts and bolts of their structure.

The architecture of GANs is a study in balance, a fascinating interplay between two deep neural networks – the Generator and the Discriminator.

Let’s explore the unique role of each component in this dynamic duo.

Generator Architecture

Meet the Generator, the master forger of our GAN setup. The Generator works tirelessly, creating new data instances from a place of randomness.

The more it works, the better it becomes at crafting data that is practically indistinguishable from the real deal.

It all starts with a noise vector – a fancy term for a bunch of random numbers. Think of this as a blob of clay ready to be molded into a sophisticated structure. This is the input to the Generator.

This noise vector then journeys through a network of artificial neurons, called layers. Picture these layers as different stations in a factory assembly line. At each station, the noise vector undergoes a transformation, slowly taking the shape of a real data instance.

The transformation at each layer is controlled by a set of parameters, or weights. These weights are initially set to random values but are adjusted through the learning process to generate data that closely resembles the real thing.

The final station, or output layer, presents us with a new data instance crafted by the Generator from random noise.

It might not be a perfect replica of the real data at first. But with time, as the Generator learns and adjusts its weights, it gets better at its job, producing increasingly realistic data.

Discriminator Architecture

On the flip side of our GAN setup, we have the Discriminator – the keen-eyed detective on the lookout for forgeries.

The Discriminator’s mission is to differentiate between real and fake data. The more it trains, the better it becomes at identifying the nuances that separate the Generator’s creations from the real data.

The Discriminator takes in a data instance as input. This could be a real data instance or a fake one produced by the Generator.

This data instance is then passed through a series of layers, similar to the Generator. But instead of creating data, these layers dissect the input, studying it for signs of authenticity or forgery.

Each layer in the Discriminator extracts features from the input data, gradually breaking it down into more abstract representations. These representations help the Discriminator decide whether the data is real or fake.

The final layer of the Discriminator outputs a single number – a probability that tells us how confident the Discriminator is that the input data is real. A high value close to 1 signals a verdict of ‘real’, while a low value close to 0 suggests ‘fake’.

It’s a cat-and-mouse game between the Generator and the Discriminator. While the Generator constantly improves its forgery skills, the Discriminator simultaneously hones its detective abilities.

This dueling dynamic is the driving force behind the learning process in GANs, enabling them to generate impressively realistic synthetic data.

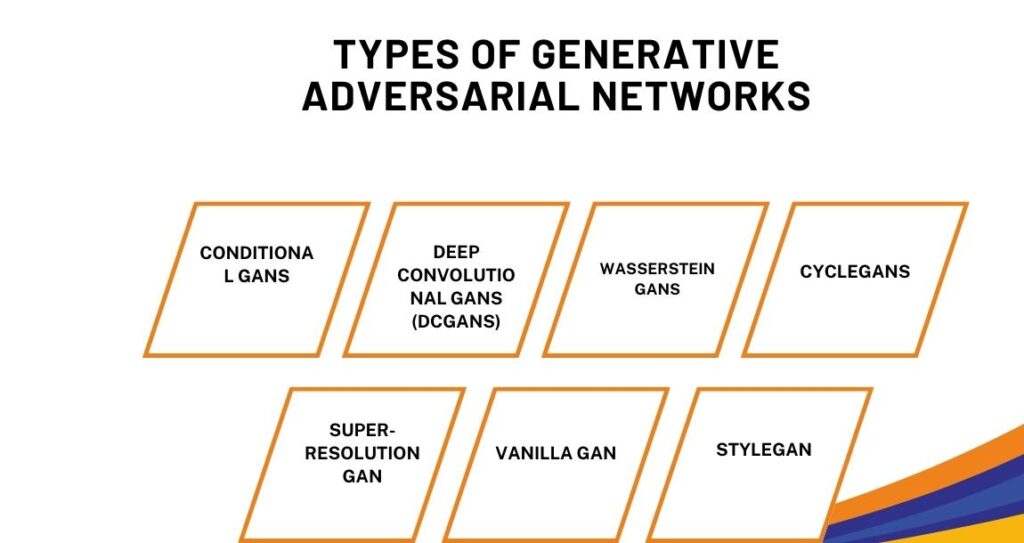

Types of Generative Adversarial Networks

Like a tree branching out, GANs have diversified into several types, each addressing different use cases and offering distinct features. Let’s unpack some of the most prominent ones.

1. Conditional GANs

Conditional GANs, or cGANs, introduced the concept of conditional generation in the realm of GANs.

The idea is to condition the generation process on some additional information, such as a label or another data instance.

This extra piece of information serves as a guiding light for the Generator, helping it produce data of a specific type.

For example, in a standard GAN setup, the Generator might produce images of random objects. But in a cGAN, if you provide a ‘cat’ label as a condition, the Generator will generate images of cats.

Key Features:

- Controlled Data Generation: With the conditioning, cGANs provide control over the type of data that the Generator creates.

- Useful for Label-Specific Tasks: cGANs are beneficial for tasks like multi-modal image generation where we want to generate multiple plausible images corresponding to a given label.

2. Deep Convolutional GANs (DCGANs)

DCGANs are a leap forward in the GAN architecture that uses convolutional layers in the Generator and Discriminator. This change allows DCGANs to perform exceptionally well when dealing with images.

In a DCGAN, the Generator uses convolutional transpose layers to upscale a random noise vector into an image. The Discriminator uses convolutional layers to take this generated image and downscale it to a single output – its decision.

Key Features:

- Convolutional Structure: The convolutional layers allow DCGANs to capture the spatial structure in images efficiently.

- Stable Training Dynamics: DCGANs introduced several architectural changes, leading to more stable training dynamics.

3. Wasserstein GANs

The Wasserstein GANs, or WGANs, are a groundbreaking variation of GANs that significantly improve the stability of the model’s training.

They introduce a new form of loss function, known as the Wasserstein loss, which changes how the Discriminator evaluates the Generator’s output.

Instead of the traditional binary decision (real vs. fake), the Discriminator in a WGAN outputs a score representing the ‘realness’ or ‘fakeness’ of an image. This change provides a more nuanced view of the generated data and contributes to a smoother training process.

Key Features:

- Improved Training Stability: The main advantage of WGANs is their improved training stability, which can lead to better results.

- Meaningful Loss Metric: The Wasserstein loss provides a useful metric for tracking the Generator’s performance during training.

4. CycleGANs

CycleGANs are a unique type of GAN designed for image-to-image translation tasks without paired examples.

This capability makes CycleGANs perfect for tasks like changing the style of an image or converting an image from one domain to another (for example, converting a horse’s image into a zebra’s image).

Key Features:

- Unpaired Image-to-Image Translation: CycleGANs can learn to translate between two image domains without paired examples, which is a significant advantage in many tasks.

- Cycle Consistency Loss: This unique loss function ensures that an original image can be reconstructed from its translated version, enforcing the right structure in the translated images.

5. Super-Resolution GAN (SRGAN)

Super-Resolution GAN, or SRGAN, is a specialized type of GAN designed to enhance the resolution of images. It’s a solution to a common problem in the field of computer vision known as Super-Resolution, which involves increasing the size of an image while keeping the high-frequency details intact.

In simpler terms, imagine you have a low-resolution image, and you want to make it larger, or ‘zoom in.’ But when you do that, the image becomes blurry.

SRGAN is designed to solve this problem by generating high-resolution images that retain the detailed textures of the original image.

Key Features:

- High-Resolution Image Generation: SRGANs are used to increase the resolution of images while preserving intricate details, improving the visual quality significantly.

- Perceptual Loss Function: SRGAN uses a perceptual loss function that encourages high-frequency details in the generated image. This loss function is based on high-level features from a pre-trained network, making it possible for SRGAN to generate realistic textures rather than merely interpolating pixels.

- Application in Image and Video Enhancement: SRGANs are particularly useful in applications involving image and video enhancement where high-resolution visuals are critical for a better user experience.

6. Vanilla GAN

The term “Vanilla GAN” is often used to refer to the original GAN model proposed by Ian Goodfellow and his colleagues in 2014. The Vanilla GAN is the cornerstone upon which all other GAN models have been built.

In a Vanilla GAN, the Generator and Discriminator are typically simple multilayer perceptrons. The Generator takes a noise vector as input and outputs an image, while the Discriminator takes in either a real or generated image and outputs a probability of the image being real.

Key Features:

- The Foundation of GANs: The Vanilla GAN is the original GAN model, serving as the foundation for the many GAN variants we see today.

- Simplicity: The Vanilla GAN’s simplicity is one of its key features. It has a straightforward structure with easy-to-understand training dynamics.

- Versatility: Despite its simplicity, Vanilla GANs can be used to generate a variety of data types, from images to music.

In conclusion, whether you’re enhancing the resolution of images with SRGAN or working with the original GAN architecture in Vanilla GANs, these models continue to push the boundaries of what’s possible in data generation.

7. StyleGAN

StyleGAN, developed by researchers at NVIDIA, stands out for its innovative layer-wise style control. This control means you can alter the style of an image at different scales, independently.

For example, you can change aspects like the hairstyle or glasses in an image without affecting other details like facial features.

Key Features:

- Style Control: StyleGAN allows you to control the style of the generated image at each layer.

- High-Resolution Images: StyleGAN is capable of generating high-resolution and highly-detailed images. This makes it especially useful for tasks where detail is important, such as generating realistic human faces.

- Adaptive Instance Normalization (AdaIN): StyleGAN utilizes AdaIN, a feature normalization technique that allows the network to inject different styles at different layers of the generator. This is what enables the model to manipulate different aspects of the image style at different scales.

- Mapping Network: StyleGAN introduces a mapping network, which takes a latent code and maps it to an intermediate latent space. This adds more disentanglement in the representations, enabling more control over the generated images.

- Noise Inputs: StyleGAN also introduces noise inputs at each layer of the generator which add fine details to the generated image, such as skin pores or hair.

- Stochastic Variation: A major contribution of StyleGAN is the addition of stochastic variation which helps in generating more realistic images by adding randomness at both global and local scales.

- Progressive Growing: StyleGAN employs a training method called progressive growing, which starts the training process with low-resolution images, and gradually adds layers to increase the resolution. This results in improved stability and image quality.

Each of these GAN variants has a unique twist, making them suitable for different tasks and applications. Whether you want to generate images, perform style transfer, or create data under specific conditions, there’s likely a GAN for that!

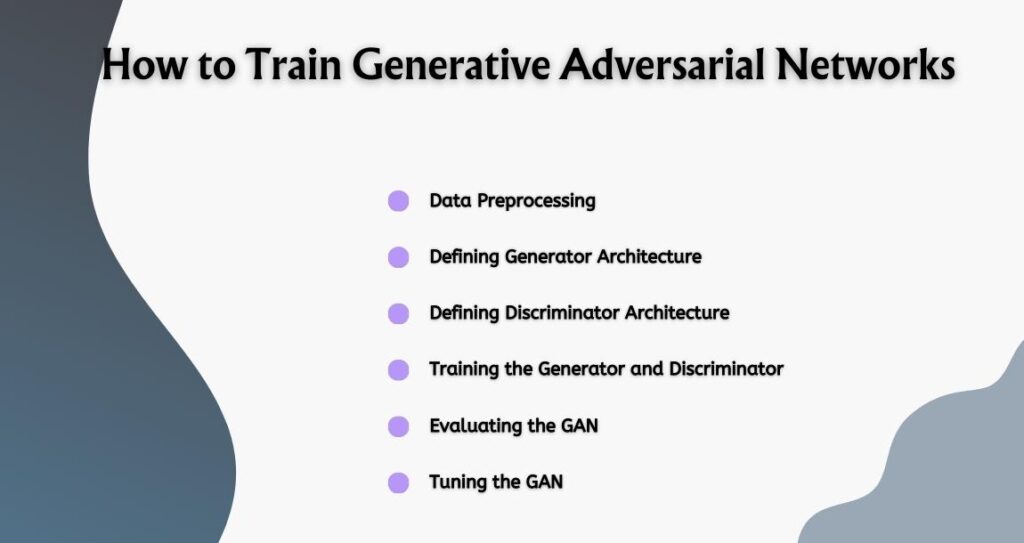

How to Train Generative Adversarial Networks

Ready to train your GAN? Great! Here’s a step-by-step guide to get you started.

Data Preprocessing

The first thing to do is to whip your data into shape. This step will differ depending on your dataset but generally involves:

- Cleaning the data: Make sure there are no erroneous or irrelevant parts of the data that could negatively impact the model’s performance.

- Normalization: Scaling your data to a certain range, often between -1 and 1 or 0 and 1. This helps the model to learn more effectively.

- Data Formatting: Transform your data into a format that the GAN can handle. For instance, you might need to reshape image data or convert categorical data into one-hot vectors.

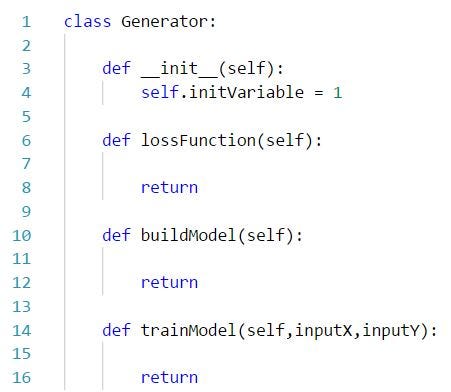

Defining Generator Architecture

Now let’s move on to designing your Generator. Remember, this is the part of the GAN that creates the fake data, so it’s an essential component. Here’s what you need to focus on:

- Input Size: The generator’s input is a random noise vector. The size of this vector, known as the latent space’s dimensionality, is a key parameter to decide.

- Layers and Neurons: Decide on the number of layers and the number of neurons per layer. More layers can allow the Generator to learn more complex representations, but can also make training more difficult.

- Activation Functions: The choice of activation functions can significantly impact the learning dynamics. Often, a ReLU (Rectified Linear Unit) activation function is used in the Generator.

Defining Discriminator Architecture

The Discriminator is the GAN’s data critic, and its architecture is equally important. Here’s what you need to decide:

- Input Size: This should match the size of your real data instances, as the Discriminator needs to evaluate both real and fake data.

- Layers and Neurons: Again, choose the number of layers and neurons per layer. For the Discriminator, it’s often advisable not to make the architecture too complex, as it could overpower the Generator.

- Activation Functions: In the Discriminator, the last layer often uses a sigmoid activation function to output a probability.

Training the Generator and Discriminator

It’s time for the main event: training the GAN. This involves:

- Adversarial Training: The Generator and Discriminator are trained in tandem. While the Generator is trying to fool the Discriminator, the Discriminator is learning not to be fooled.

- Loss Function: The choice of loss function is crucial. It measures how well the Generator is doing at fooling the Discriminator and how well the Discriminator is doing at not being fooled.

- Balancing Act: Training GANs is often described as a balancing act. If the Generator or Discriminator becomes too powerful, it can destabilize the training process.

Evaluating the GAN

Now that you’ve trained your GAN, it’s time to see how well it’s doing. Evaluating GANs is tricky, but here are some common approaches:

- Visual Inspection: One of the simplest ways to evaluate the GAN is to inspect the generated data visually. Does it look similar to the real data?

- Inception Score (IS): This measure uses a pretrained model (like Inception) to evaluate the quality and diversity of the generated images.

- Frechet Inception Distance (FID): This is another metric that compares the generated data to the real data in terms of their Inception feature distributions. Lower FID scores indicate better generated data.

Tuning the GAN

Finally, you may need to tweak your GAN to improve its performance. Tuning a GAN can involve:

- Hyperparameter tuning: Adjust the learning rate, batch size, number of layers, etc. to improve performance.

- Architecture tweaks: Depending on your results, you may need to adjust the architecture of your Generator or Discriminator.

- Data adjustments: Sometimes, changes in the way you preprocess your data can lead to better results.

And voila! You’ve trained and tuned your Generative Adversarial Network! Remember, training GANs can be a bit of an art form.

So don’t be disheartened if you don’t get it right the first time. Keep experimenting and learning, and you’ll get there. Now, let’s take a peek into the future of GANs.

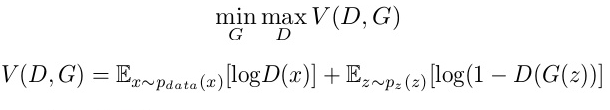

Generative Adversarial Networks (GANs) Loss Function

Let’s dive into the nitty-gritty details of how Generative Adversarial Networks (GANs) work, focusing on their loss function, which is a little like the rules of a game they’re playing. And like any good game, there are challenges!

Think about GANs as if they’re in a contest. The generator is trying to make counterfeit money that looks so real that the discriminator, playing the part of a detective, can’t tell it’s fake.

The generator is doing its best to minimize its chances of getting caught, while the discriminator is maximizing its efforts to catch the fakes. Sounds a bit like a “minimax” game, right?

Now, let’s get to the math behind this game.

D(x): This represents the discriminator’s judgment on the real data instance ‘x’. Essentially, it’s the discriminator’s guess on the probability that ‘x’ is real, not counterfeit.

Ex: It’s all about expectations! Ex is the expected value, or the average if you like, across all real data instances.

G(z): Think of this as the generator’s art project. It’s the output the generator creates when it’s given a random noise ‘z’.

D(G(z)): This is the discriminator’s take on the generator’s output. It’s essentially the discriminator’s guess on how likely the generator’s output (the counterfeit) is real.

Ez: Again, we’re dealing with expectations. Ez represents the expected value over all random inputs to the generator. In other words, it’s the average over all instances the generator churns out.

The loss function used in GANs is derived from the concept of cross-entropy, which is a measure of how well the predictions (in this case, the counterfeit data) match up with the actual targets (the real data).

All this is related to something called cross-entropy. In simple terms, it measures how well the predictions of our model (the generator’s fakes) match the actual targets (the real data).

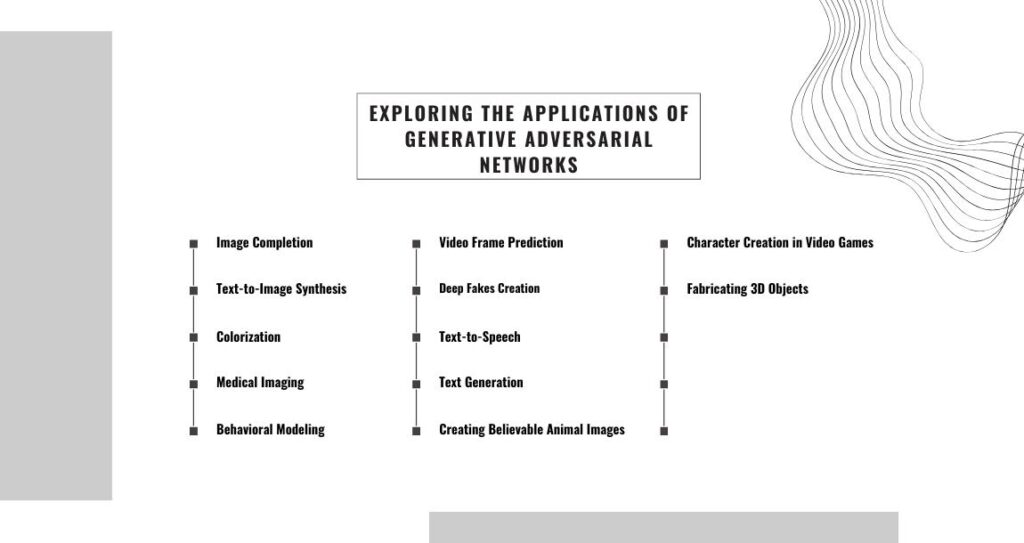

Exploring the Applications of Generative Adversarial Networks

Generative Adversarial Networks (GANs) are becoming increasingly popular in various domains, particularly online retail sales, thanks to their capability to comprehend and recreate visual content with remarkable precision.

Here are some common use cases of GANs, each showcasing their unique abilities in a variety of tasks.

Image Completion

GANs can fill in missing or corrupted parts of an image by inferring from the context within the image. Imagine having an old, damaged photo with missing parts.

Using GANs, you can restore this photo to its original state, filling in the gaps with realistic data inferred from the surrounding information.

Text-to-Image Synthesis

GANs have been used to generate realistic images from textual descriptions. This ability is especially beneficial in situations where visual representation of text is required.

For instance, an online retailer could use a textual description of a product to generate a realistic image of the product prototype, thereby simplifying the process of product design and visualization.

Colorization

GANs can convert black and white images into color by learning the mapping between grayscale and color images.

This is highly useful in the restoration of old black and white photos or films, where GANs can accurately predict the color scheme, bringing vintage photos or films to life.

Medical Imaging

GANs can translate medical scans into more understandable images, facilitating diagnoses.

For example, a GAN might convert a medical scan into a more visually interpretable format, providing a tool for doctors to understand the underlying medical condition better.

In the realm of video production, GANs provide the following benefits:

Behavioral Modeling:

GANs can learn and reproduce patterns of human behavior and movement within a frame, contributing to more realistic animations or simulations.

Video Frame Prediction

GANs can predict subsequent video frames based on the current and past frames, creating smooth and visually coherent video sequences. This is particularly useful in video editing and post-production work.

Deep Fakes Creation

GANs are behind the creation of deep fakes, which are synthetic media where a person’s likeness is replaced with another’s.

While this technology has ethical implications, it also showcases the power of GANs in creating highly realistic images and videos.

Aside from visual tasks, GANs also find use in the auditory and textual domains:

Text-to-Speech

GANs can generate realistic speech sounds from text, contributing to more natural-sounding text-to-speech systems. This technology is especially useful in creating virtual assistants or in accessibility applications.

Text Generation

GAN-based models can generate coherent and contextually appropriate text for blogs, articles, product descriptions, and more.

Such AI-generated texts find application in advertising, social media content generation, research, and various forms of communication.

Creating Believable Animal Images

Beyond human faces, GANs are equally adept at producing realistic imagery of animals. Google’s research model, BigGAN, is renowned for generating high-quality, lifelike images of various animals, ranging from birds to dogs.

Character Creation in Video Games

The gaming industry also benefits from GANs’ generative capabilities.

An illustrative case is NVIDIA’s use of GANs for character creation in the renowned video game, Final Fantasy XV. This enabled the creation of unique, detailed characters, enriching the gaming experience.

Fabricating 3D Objects:

GANs are not limited to 2D images; they can create three-dimensional objects as well.

Researchers at the Massachusetts Institute of Technology leveraged GANs to generate 3D models of furniture items, such as chairs, with a degree of realism that they could be mistaken for human-made designs.

These models have potential applications in architectural visualization and enhancing the realism of video game environments.

Each of these applications illustrates the adaptability and potency of GANs, transforming industries with their ability to generate realistic data.

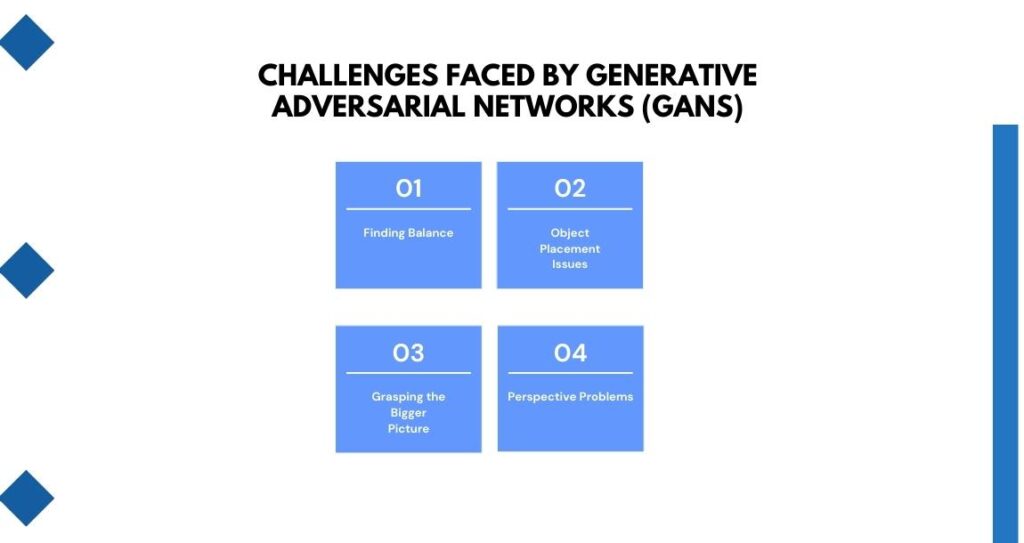

Challenges Faced by Generative Adversarial Networks (GANs)

Of course, it’s not all fun and games. There are a few hurdles along the way. Let’s take a look at some challenges GANs face:

Finding Balance

We don’t want our discriminator to become a tough nut to crack. We want it to be a fair player. If it becomes too strict, our generator might lose hope!

Object Placement Issues

Sometimes, the generator can get a bit confused about where objects should be. Imagine a picture with three horses, and our generator creates six eyes for just one horse. Awkward, right?

Grasping the Bigger Picture

GANs can struggle to understand the overall structure of images. Like an artist focusing too much on the details, they sometimes miss out on the broader view, creating images that don’t quite look realistic.

Perspective Problems

3D images are a tough one for GANs. They’re more comfortable working with 1D images. If we try to train them on 3D images, they might not be able to recreate them accurately.

So, while GANs are super cool, they do have their own set of challenges to overcome. But hey, who doesn’t love a good challenge, right?

Future of Generative Adversarial Networks

GANs have already made a considerable impact in the world of artificial intelligence. But what’s next? The potential applications of GANs are expanding at an exciting pace. Here’s a glimpse into the future.

Creating High-Quality Data

One of the greatest challenges in machine learning is obtaining high-quality data. GANs, with their ability to generate artificial data that’s nearly identical to real data, can solve this problem.

We can anticipate the creation of sophisticated datasets for training purposes, leading to more accurate and robust models.

Media and Entertainment

In the media and entertainment industry, the possibilities are endless. From generating realistic characters for video games or movies to creating AI-generated artwork, music, and even stories, GANs could reshape creativity in the digital age.

Healthcare

In healthcare, GANs could be used to generate synthetic medical data, such as MRI scans or X-rays, to aid diagnosis without exposing patients to potentially harmful radiation.

Furthermore, they could be utilized in drug discovery, predicting molecular interactions and effectiveness.

Privacy and Security

While GANs offer great potential, they also pose new challenges in terms of privacy and security. Deepfakes, created using GANs, have already shown how convincing AI-generated media can be, leading to concerns about misinformation and identity theft. Addressing these issues will be a significant focus in the future.

Advances in AI Research

As GANs continue to evolve and improve, they’re likely to play a key role in pushing the boundaries of AI research.

They’ve already shown their ability to learn incredibly complex patterns and distributions. Who knows what they’ll be capable of in the future?

GANs are a thrilling frontier in artificial intelligence, promising to reshape how we generate and analyze data.

While there are challenges ahead, the potential benefits are significant. Who knows what the future holds as we continue to explore and innovate?

Conclusion

There’s no doubt about it: Generative Adversarial Networks (GANs) are one of the most exciting developments in artificial intelligence.

From understanding the basic dynamics between the Generator and the Discriminator to exploring the various types of GANs, it’s been quite a journey!

Through this exploration, we’ve discovered the powerful capabilities of GANs. We’ve learned how they can generate new, realistic data from random noise, and the myriad of applications this has, from creating synthetic media in entertainment, to aiding medical diagnoses, to even helping address data privacy issues.

But our journey doesn’t end here. As GANs continue to evolve, they’re creating new frontiers for innovation.

Whether it’s the development of new types of GANs, refining the training processes, or exploring the ethical considerations, there’s a lot more to anticipate in the world of GANs.

So, as we step into the future of artificial intelligence, let’s continue to explore, innovate, and harness the power of Generative Adversarial Networks with Webisoft. The possibilities are truly endless!