You know those moments when your smartphone seems to read your mind, or when an app makes a suggestion that’s just perfect for you? It’s not magic. But it’s close.

It’s the power of embedding machine learning. This fascinating realm within artificial intelligence is the hero behind many of the conveniences we enjoy today. And at the heart of this intricate world lies a gem called ’embeddings’.

So, grab a virtual seat, and get into the captivating world of machine learning and the pivotal role of what is embedding actually.

Contents

- 1 Definition of Embeddings

- 2 Why Are Embeddings Handy?

- 3 Significance of Embeddings

- 4 Why Should You Use Embeddings?

- 5 Different Embedding Types

- 6 Popular Embedding Models

- 7 Different Applications of Embeddings

- 8 Understanding Embeddings in ChatGPT

- 9 Storing and Accessing Embeddings in ChatGPT

- 10 Pioneering Advanced Computer Vision with Webisoft

- 11 Wrapping Up!

- 12 FAQs

Definition of Embeddings

Imagine you have a big, detailed painting. Now, imagine if you could capture the essence of that painting in a small sketch. That’s what embeddings do but for data.

They take big, complex info and turn it into a set of numbers. The cool part is these numbers still hold the core meaning of the original data.

Why Are Embeddings Handy?

Here’s the thing: once you’ve turned your data into these number sets (or embeddings), you can use them in many ways.

Let’s say you have a question, “Why should we recycle?” This question can be turned into a number set. Now, if you have another question or statement, you can quickly see how close its meaning is to your original question just by looking at the numbers.

And it’s not just about words. Pictures can be turned into number sets too. So, you can check if a line of text matches a picture, just by comparing their number sets.

Let’s see how you can use embeddings:

Storing and Accessing Embeddings

Do you know how you have a big closet with clothes neatly organized in sections? It helps you find exactly what you’re looking for, right?

In the tech scene, there’s a similar concept called a vector store. Think of it as a dedicated closet for embeddings.

By keeping these embeddings well-arranged, it becomes a breeze to do tasks like determining the similarity between two words.

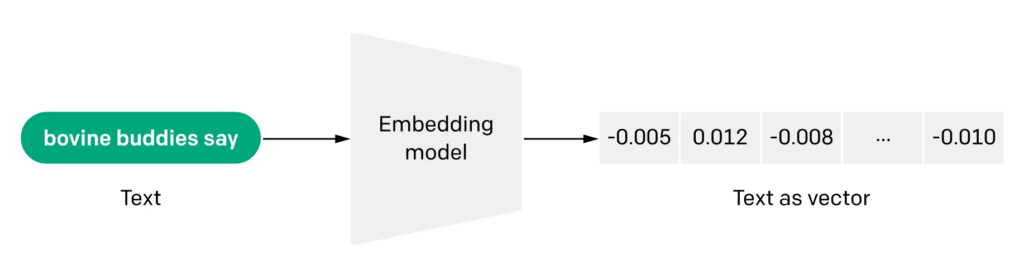

From Text to Embeddings

Before we get into the details, let’s see how to get these embeddings.

First, we take a piece of text and break it down into smaller parts. Think of it like breaking a chocolate bar into pieces. These pieces can be as big as paragraphs or as small as individual words.

Now, with the help of some smart models (like Word2Vec or FastText), we turn these text pieces into embeddings. Words that have similar meanings get similar number sets.

So, “cat” and “kitten” might have number sets that are close, while “cat” and “apple” would be quite different.

Chunks Matter

Breaking text into chunks isn’t just for fun. It helps in tasks where we want to understand or classify parts of the text.

For instance, if we’re trying to figure out if a sentence is happy or sad, breaking it down can give us more detailed insights.

Retrieving Embeddings from the Store

Once we have our embeddings, we keep them in the vector store. Think of it as a library. When we need to use an embedding, we just look it up. It’s like searching for a book by its title and getting it off the shelf.

The best part about this “library” or vector store? It’s super fast. So, if we want to see how close “apple” is to “fruit” or “computer”, it’s a breeze.

Significance of Embeddings

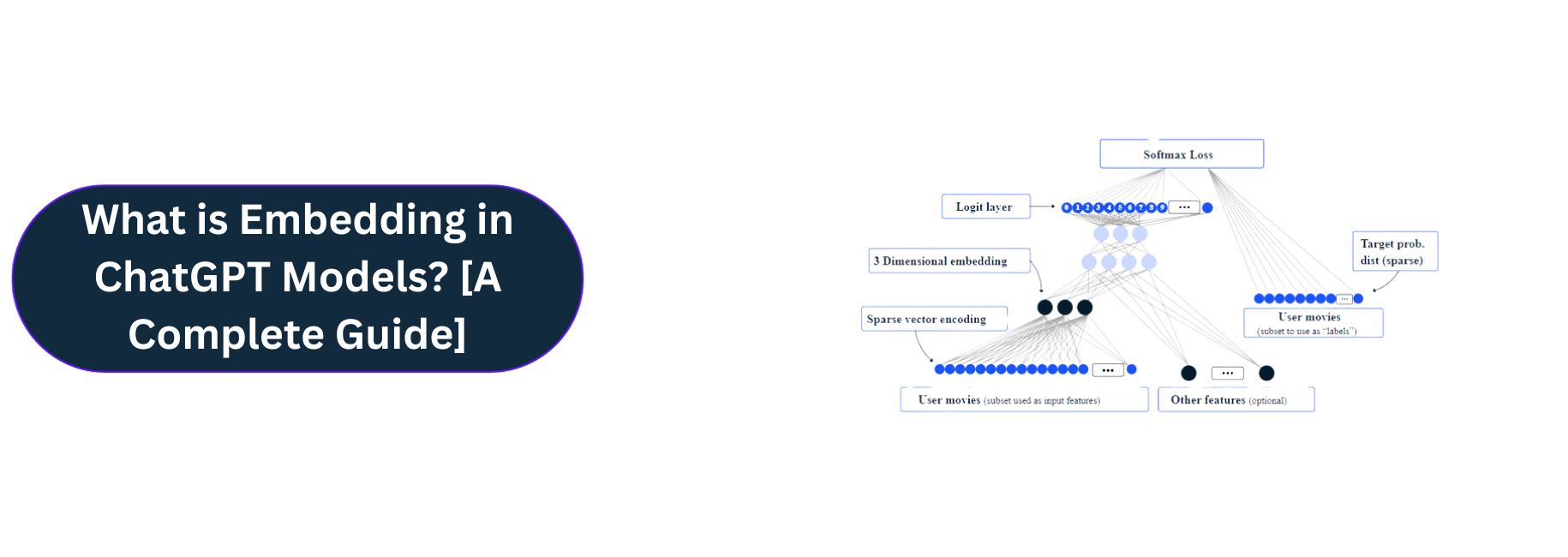

If you’ve ever wondered what makes platforms like ChatGPT tick, you’re in for a treat. One of the key players behind the scenes is something called embeddings. But why are they such a big deal?

Well, transformers are like the engine under the hood for platforms like ChatGPT. Unlike some other tech models that are set in stone once they’re made, transformers are more flexible. They can adjust on the go, kind of like some programming languages.

And here’s where embeddings come in. They add a layer that simplifies big, complex data, making it easier for transformers to understand. They also work with human language.

Why Should You Use Embeddings?

Here are actually many reasons why you need to try embeddings. They include:

Understanding Meaning

Embeddings have a unique capability to capture the underlying semantics of data. Instead of just processing raw data, they delve into the deeper layers of meaning, allowing for a richer understanding.

This depth is invaluable, especially in tasks like sentiment analysis or topic modeling where discerning the mood, tone, or theme of a text is paramount.

Simplicity in Complexity

The world of big data can be overwhelmingly complex. Embeddings come to the rescue by converting vast and intricate data into a more digestible and manageable format.

Hence, by representing data in a condensed form, embeddings streamline the process of handling and analyzing it, making the task less daunting.

One Size Fits Many

The versatility of embeddings is truly commendable. Once an embedding is created, it’s not restricted to a single application. Instead, it can be repurposed and utilized across various domains and tasks.

Think of embeddings as a multi-tool for data, adaptable and ready to be deployed wherever needed.

Sturdy and Reliable

The robustness of embeddings stems from the extensive amount of data they are trained on. By processing and learning from vast datasets, embeddings develop a comprehensive understanding of patterns, nuances, and relationships.

This extensive training makes them akin to expert detectives, adept at spotting intricate patterns and drawing connections. It also ensures their reliability across a multitude of tasks.

A Catalyst for Smarter Technology

At the heart of many advanced technological solutions lies the power of embeddings. They bridge the gap between machines and human language.

Plus, they enable machines to interpret and process data in a way that’s closer to human understanding.

As a result, they play a pivotal role in enhancing the intelligence and efficiency of our technological systems, leading to more accurate and impressive outcomes.

Different Embedding Types

Now, there are various ways to create embeddings. So, the method you pick really depends on what you’re aiming to achieve and the kind of data you’re working with. Let’s break it down.

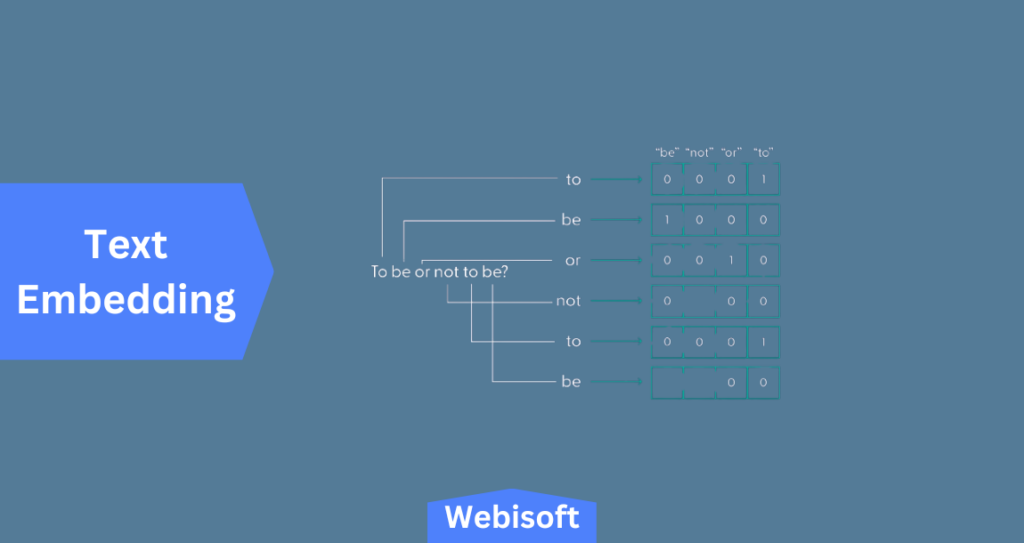

Text Embedding

Think of text embedding as giving numbers to words or sentences. It’s like turning a story into a barcode.

This helps machines understand and work with text. Before we get to this step, there’s something called text encoding, which is like breaking a story into individual words or phrases.

It’s essential to ensure that the method used to turn text into numbers matches the initial breaking down of the story. There are several tools out there, like NNLM and Word2vec, that help in this process.

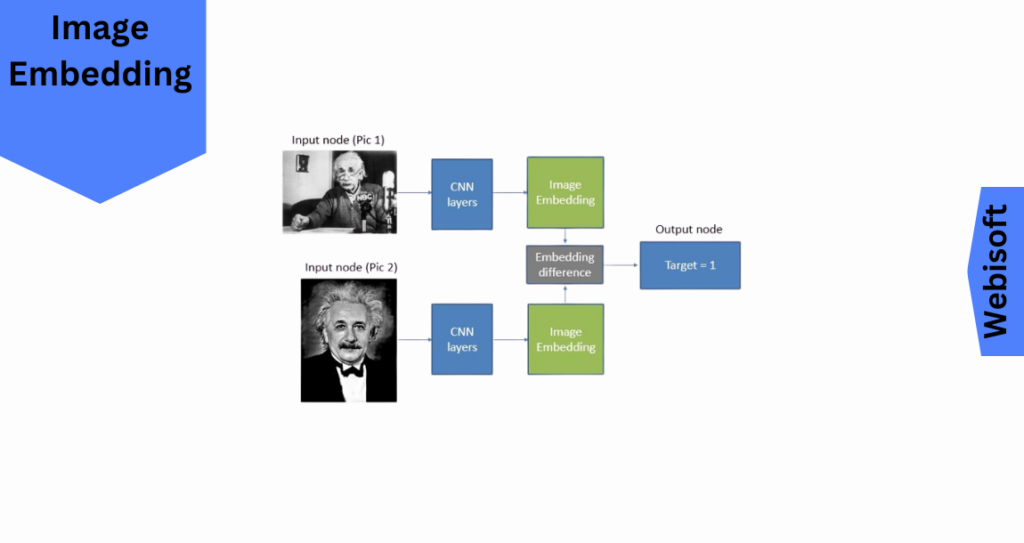

Image Embedding

Now, let’s talk about pictures. Image embedding is about understanding pictures and turning them into number sets.

It’s like describing a painting using only numbers. Deep learning tools help in this, picking out the details of an image and turning them into these number sets.

These number sets can then help in tasks like finding similar images or recognizing objects in a picture.

There are different tools and methods for this though. Some need you to send the image to a server, while others can do the job right on your computer.

For instance, there’s something called the SqueezeNet embedder that’s great for quickly understanding images without needing an internet connection.

Popular Embedding Models

Here are some popular embedding models that help machines understand data better.

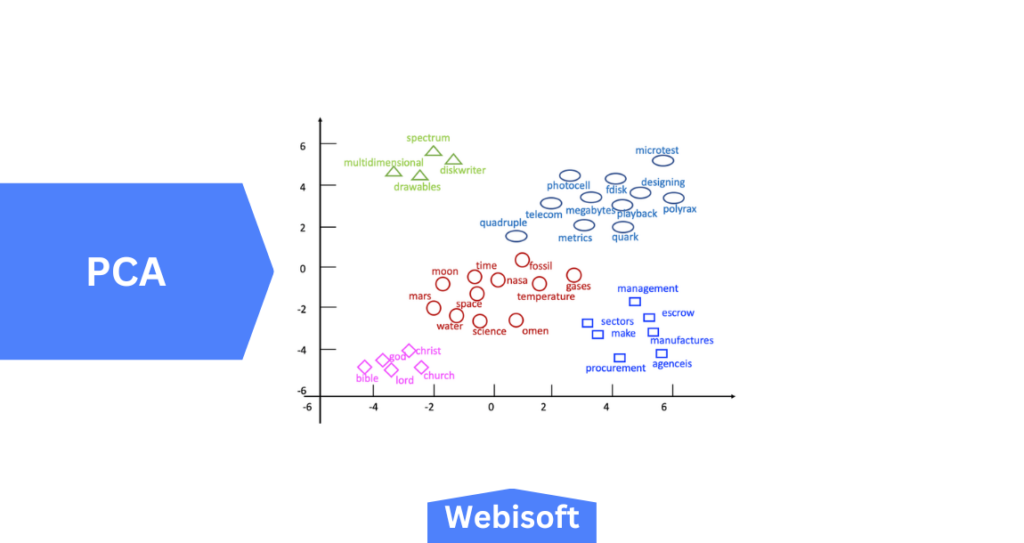

PCA

Ever felt overwhelmed by too much information? PCA, or Principal Component Analysis, is like a superhero that simplifies complex data. It finds the most important parts of the data and represents them in a simpler way.

Think of it as turning a detailed map into a basic sketch. This makes it easier to see patterns and use the data in machine-learning tasks. It’s a favorite tool for tasks like understanding images or texts.

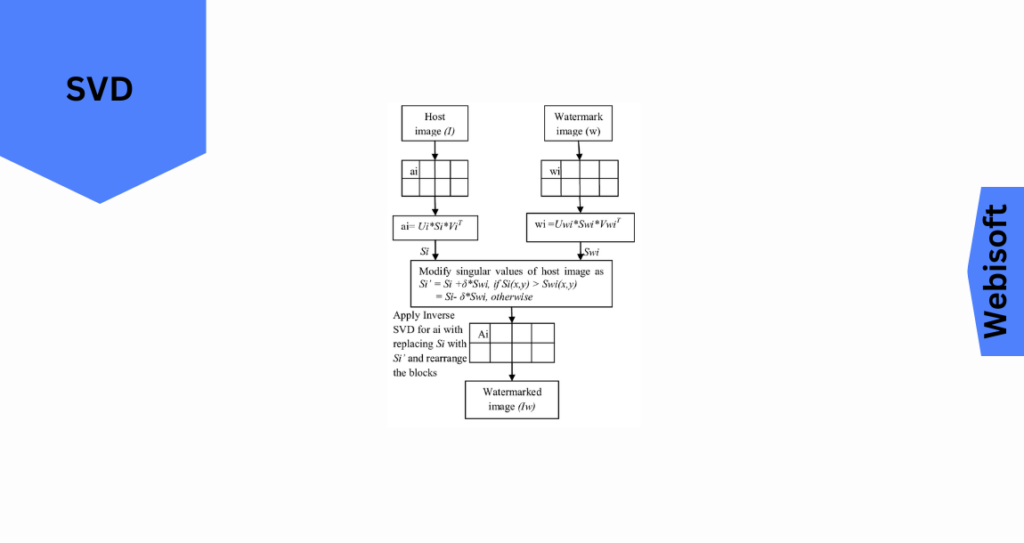

SVD

Singular Value Decomposition (SVD), is all about understanding relationships in data.

Imagine you have a big table of movie ratings from different people. SVD breaks this table down into two smaller tables.

Such as one for people and one for movies. By looking at these tables, we can guess how a person might rate a movie they haven’t seen yet.

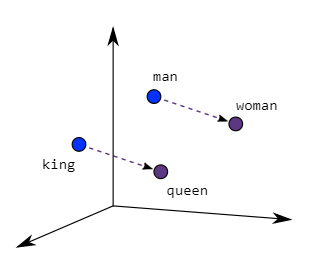

Word2Vec

Word2Vec is like a detective for words. It looks at how words hang out together in sentences and then gives each word a special code. Words that are often seen together get similar codes.

So, words like “dog” and “puppy” might get codes that are close, while “dog” and “apple” would be quite different. This helps in understanding word embeddings and even in making word guesses.

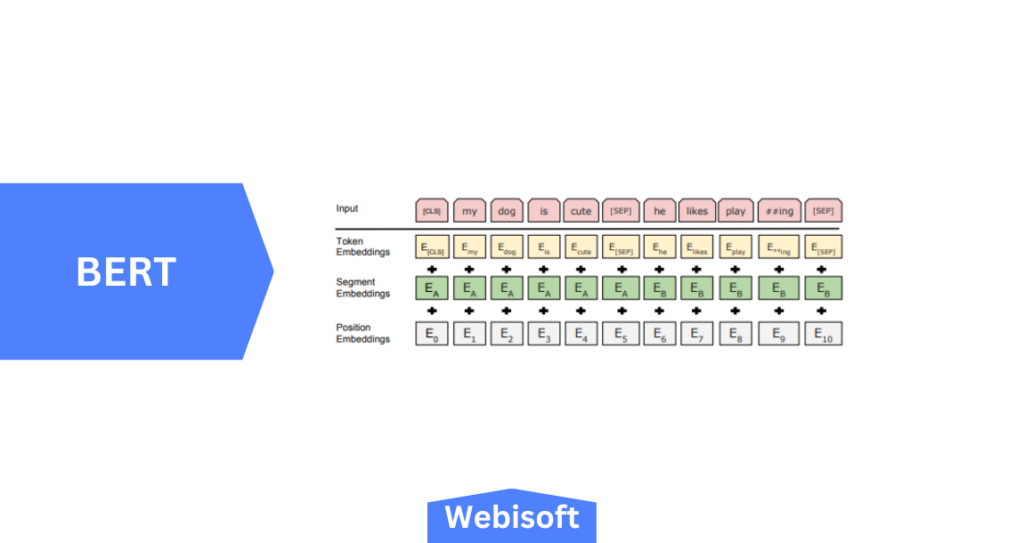

BERT

Meet BERT, a genius when it comes to understanding language. What sets BERT apart is its two-step learning process.

- First, it reads a ton of text (imagine reading all of Wikipedia!) to understand word relationships.

- Then, it gets specialized training, like studying medical texts.

The magic of BERT is its ability to understand words based on their surroundings. For instance, it knows that “bank” in “I went to the bank” is different from “bank” in “I sat on the river bank.”

It’s this knack for context that makes BERT a star in understanding and generating text.

Different Applications of Embeddings

From helping voice assistants understand us better to making our online searches more relevant, embeddings are the absolute saviors in the tech world. The answers are below,

Making Choices Easier

Ever wondered how platforms like Netflix seem to know just what movie you’re in the mood for? That’s the essence of recommender systems. They predict what you might like based on your past choices.

Now, there are different ways to do this, but one popular method involves using embeddings. By understanding the relationship between users and products, these systems can suggest items that are just right for you.

Think of it as a friend who knows your taste really well. So, it always has the best recommendations.

Semantic Search

We’ve all been there, typing something into a search engine and getting results that are way off.

Enter semantic search. With the help of embeddings, search engines can now grasp the deeper meaning behind your query.

So, if you’re searching for “How to bake a cake,” it knows you’re after a recipe, not just random cake facts. It’s like having a search engine that truly gets you.

Computer Vision

Now, let’s talk about how machines see the world. Embeddings are key in guiding self-driving cars and turning text into art.

They help translate images into a language machines understand. For instance, companies like Tesla are using this approach to train their cars using game-generated images. Of course, it’s instead of real-world photos.

And there’s even tech that can turn your words into pictures, bridging the gap between what we say and what we see.

Understanding Embeddings in ChatGPT

Well now let’s see how ChatGPT, a popular language model, uses embeddings to understand and generate text.

Breaking Down Text

When you ask ChatGPT something like “Write me a poem about cats,” it starts by splitting that sentence into smaller parts, like “Write,” “me,” “a,” “poem,” “about,” and “cats.”

But here’s the thing: computers don’t really “get” words. So, these parts need to be turned into something a computer can understand.

Turning Words into Numbers

This is where embeddings come in. They transform these text parts into lists of numbers. The amazing part is words with similar meanings end up with similar number lists.

This is done by training a system to recognize which words often hang out together in sentences.

Predicting the Next Word

ChatGPT has a special way of predicting the next word in a sentence. It looks at all the words before it and uses something called a transformer.

Without getting too technical, transformers are really good at figuring out which words in a sentence are most important. They’ve been trained on a ton of text to make these decisions.

Generating a Response

Once ChatGPT has all these number lists (embeddings), it processes them to come up with a response. It does this step by step, word by word.

After deciding on a word, it adds it to the list. And then thinks about the next word. It keeps going until it feels it’s given a complete answer.

The Role of OpenAI Embeddings

OpenAI’s approach to embeddings is what makes ChatGPT so impressive. When you type something into ChatGPT, it uses these embeddings to represent your words as numbers.

Then, it processes these numbers through layers that decide which words are key to crafting a good response. The end result? A coherent and relevant answer to your question.

Storing and Accessing Embeddings in ChatGPT

Now we’ll see how we can efficiently store and pull up embeddings. Especially when we’re dealing with a ton of data, like in ChatGPT.

Meet the Llama Index

Think of the Llama index as a super-smart organizer for embeddings. It’s built to handle these high-dimensional vectors, making it quick and easy to find similar ones based on how close they are in meaning.

Imagine you have a massive room filled with boxes, and you need to find one specific item. Instead of searching box by box, wouldn’t it be easier if the boxes were grouped by category?

That’s kind of how the Llama index operates. It breaks down the vast space of embeddings into smaller sections. Each section holds a group of vectors that are somewhat similar.

Llama Index in ChatGPT

When ChatGPT is trying to come up with a response, it needs to find context vectors that match the input text. The Llama index speeds up this process. It helps ChatGPT give answers that make sense and feel relevant.

Now that question is can it handle big data?

Absolutely! The Llama index is like a storage champ. It can manage datasets with millions, even billions, of vectors. So, for tasks that need to sift through heaps of text data, this index is a lifesaver.

Another cool thing is The Llama index doesn’t hog memory. It smartly keeps only some vectors in immediate memory and fetches others as needed.

This means models that use embeddings, like ChatGPT, can run without gobbling up all your computer’s memory.

Pioneering Advanced Computer Vision with Webisoft

Webisoft demonstrates a profound expertise in the domain of computer vision, particularly with the Vision Transformer (ViT) model.

Their comprehensive article not only delves into the technical intricacies of the model but also its practical applications, advanced techniques, and future potential.

Their holistic understanding suggests a capability to provide well-rounded solutions tailored to diverse needs.

Furthermore, Webisoft’s forward-looking perspective on the evolution of Vision Transformers and their invitation to businesses to harness this technology indicates their commitment to innovation and offering bespoke solutions.

Thus, for businesses aiming to leverage advanced computer vision techniques, Webisoft emerges as a knowledgeable and reliable choice.

Wrapping Up!

That’s all about the discussion of what is embedding. In the dynamic world of machine learning, embeddings shine brightly.

They act as numerical codes that summarize the essence of words. These codes enable models, especially powerhouses like ChatGPT.

OpenAI’s unique approach to embeddings ensures that ChatGPT doesn’t just view words in isolation but grasps the broader narrative. It’s evident that embeddings, with their ability to capture the richness of language.

Discover the power of embeddings in ChatGPT with Webisoft!

FAQs

Why are embeddings important for ChatGPT?

Embeddings allow ChatGPT to understand the relationships between different words and their contexts, enabling it to generate coherent and contextually relevant responses.

How are embeddings created for ChatGPT?

Embeddings are created by training neural networks on vast amounts of text data. The network learns to represent words or phrases as high-dimensional vectors based on their context.

Do embeddings make ChatGPT’s responses more accurate?

Yes, embeddings help the model capture the nuances and complexities of language, leading to more accurate and contextually appropriate responses.

Are embeddings unique to ChatGPT?

No, embeddings are used in various machine learning and natural language processing models. However, the way ChatGPT utilizes embeddings is tailored to its architecture and objectives.