The Vision Transformer (ViT) is a deep learning model that applies the transformer architecture, originally designed for natural language processing, to computer vision tasks.

Unlike traditional convolutional neural networks (CNNs) that have been widely used in computer vision, ViT replaces convolutions with self-attention mechanisms to capture global relationships between image patches. This allows ViT to handle both local and global visual information effectively.

Contents

- 1 Advantages of using transformers in computer vision tasks:

- 2 The Architecture of Vision Transformer

- 3 Building and Implementing a Vision Transformer

- 4 Advanced Techniques and Enhancements of Vision Transformer

- 4.1 A. Pretraining with Large-scale Datasets (e.g., ImageNet):

- 4.2 B. Data augmentation Strategies for Better Generalization:

- 4.3 C. Regularization Techniques (e.g., dropout, weight decay):

- 4.4 D. Attention Mechanisms and Their Variants (e.g., sparse attention):

- 4.5 E. Architectural Modifications and Model Variants (e.g., DeiT, TNT):

- 5 Case Studies and Applications of Vision Transformer

- 6 Limitations and Future Directions

- 7 Final Thoughts

Advantages of using transformers in computer vision tasks:

Capture global context

Transformers excel at capturing long-range dependencies in data, making them well-suited for tasks that require understanding global contexts, such as image classification, object detection, and semantic segmentation.

Scalability

Transformers can process inputs of variable size, making them more flexible compared to CNNs, which typically require fixed-size inputs.

Interpretability

The self-attention mechanism in transformers provides interpretability, as the model attends to different regions of the input when making predictions.

Transfer learning

Pretrained transformer models trained on large-scale datasets can be fine-tuned on specific tasks, allowing for transfer learning and improved performance on smaller datasets.

Generalization

Transformers have shown impressive generalization capabilities across various computer vision tasks, even without task-specific architectural modifications.

By leveraging the power of transformers, Vision Transformers have achieved state-of-the-art performance on several benchmark datasets and have demonstrated their effectiveness in various computer vision applications.

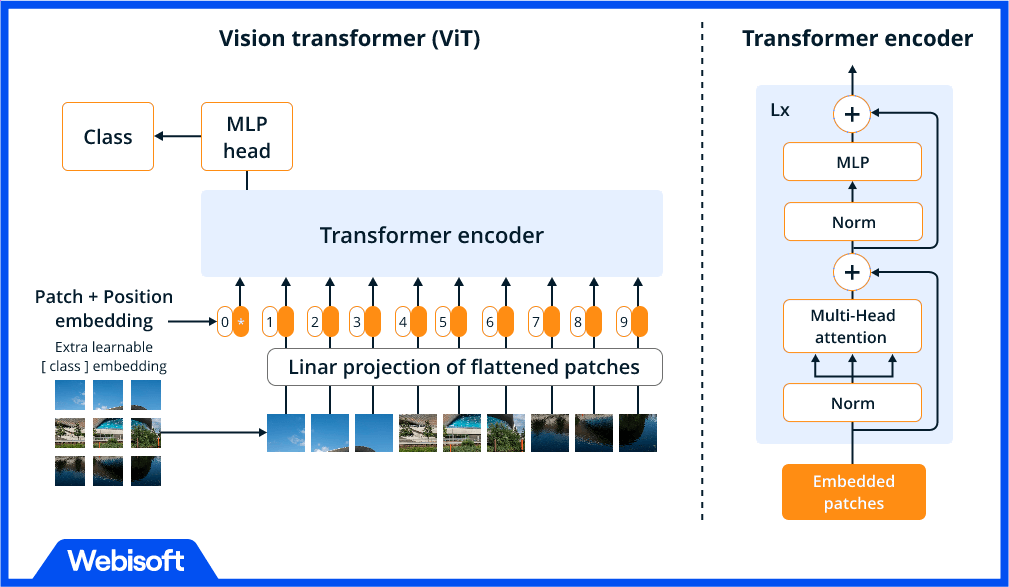

The Architecture of Vision Transformer

A. Input Embeddings:

- Patching the image: The input image is divided into smaller patches of equal size. Each patch is then treated as a token, similar to words in natural language processing tasks. This allows the model to process local information within each patch.

- Positional encodings: To capture spatial information, positional encodings are added to the patch embeddings. These encodings encode the relative positions of the patches in the image, providing the model with information about the spatial arrangement of the image.

B. Transformer Encoder:

- Self-Attention mechanism: The core component of the Transformer Encoder is the self-attention mechanism. It allows the model to attend to different patches within the image and capture global relationships. Self-attention computes the importance of each patch by considering its relationships with other patches, allowing the model to focus on relevant regions during prediction.

- Multi-layer perceptron (MLP) blocks: After the self-attention mechanism, the patch embeddings undergo a series of feed-forward transformations using multi-layer perceptron (MLP) blocks. These blocks consist of fully connected layers with non-linear activations, enabling the model to capture complex interactions between patches.

C. Classification Head:

- Global Average Pooling: Once the patch embeddings have been processed through the Transformer Encoder, a global average pooling operation is applied to aggregate the information across patches into a fixed-length vector. This pooling operation helps to summarize the global context of the image.

- Fully connected layers: The output of the global average pooling is fed into a stack of fully connected layers. These layers further process the aggregated features and learn high-level representations.

- Softmax activation: The final fully connected layer is followed by a softmax activation function, which produces the probabilities for different classes in the classification task. The class with the highest probability is predicted as the output.

The architecture of the Vision Transformer is highly modular, with the Transformer Encoder being the key component responsible for capturing global relationships and extracting meaningful representations from the input image. The combination of self-attention, MLP blocks, and global average pooling allows the model to effectively process and classify images using transformers.

Building and Implementing a Vision Transformer

A. Data Preprocessing:

Image resizing and normalization: Before feeding the images into the Vision Transformer, they are typically resized to a fixed input size to ensure consistency. Additionally, normalization techniques such as subtracting the mean and dividing by the standard deviation are applied to the image pixels to normalize the input data.

Splitting the dataset into training and validation sets: The dataset containing labeled images is divided into training and validation sets. The training set is used to train the Vision Transformer model, while the validation set is used to monitor the model’s performance during training and fine-tuning.

B. Model Configuration:

Defining the input size and number of classes: The input size of the images is specified, which determines the size of the patch embeddings. The number of classes in the classification task is also defined, indicating the output dimension of the final softmax layer.

Choosing hyperparameters: Hyperparameters such as learning rate, batch size, number of transformer layers, hidden dimensions, and dropout rates need to be determined. These choices can significantly impact the model’s performance and training dynamics.

C. Model Initialization:

Initializing the Vision Transformer model architecture: The Vision Transformer model architecture, including the Transformer Encoder, classification head, and the connections between them, is initialized according to the chosen configuration.

Initializing the weights and biases: The weights and biases of the model are initialized either randomly or using pretraining techniques. Pretraining involves initializing the model with weights learned on a large-scale dataset, such as ImageNet, to leverage transfer learning.

D. Training the Model:

Forward and backward propagation: During training, images are passed through the Vision Transformer in batches. Forward propagation computes the predicted probabilities for each image, and the loss function (e.g., cross-entropy) quantifies the discrepancy between the predicted and true labels. Backward propagation calculates the gradients of the loss concerning the model’s parameters.

Gradient descent and optimization: The gradients are used to update the model’s parameters using optimization algorithms like stochastic gradient descent (SGD), Adam, or RMSprop. These algorithms adjust the weights and biases in the direction that minimizes the loss function.

Monitoring training progress with validation set: The model’s performance is periodically evaluated on the validation set to monitor its accuracy and identify potential overfitting. Early stopping techniques can be employed based on the validation performance to prevent the model from overfitting the training data.

E. Evaluation and Fine-tuning:

Testing the model on a separate test set: Once training is complete, the model’s performance is evaluated on a separate test set that was not used during training or validation. This provides an unbiased assessment of the model’s generalization ability.

Analyzing model performance and adjusting hyperparameters: The model’s performance metrics, such as accuracy, precision, recall, or F1 score, are analyzed to understand its strengths and weaknesses. Hyperparameters can be fine-tuned based on this analysis to further improve the model’s performance.

F. Inference and Deployment:

Loading the trained model: The trained Vision Transformer model is loaded into memory for making predictions on new, unseen images.

Preprocessing new input images: Similar to the preprocessing done during training, new input images need to be resized, normalized, and prepared as input to the Vision Transformer model.

Making predictions with the Vision Transformer model: The preprocessed images are passed through the Vision Transformer, and the model generates predictions by assigning class probabilities to the input images.

Building and implementing a Vision Transformer involves data preprocessing, model configuration, initialization, training, evaluation, and inference steps. Iterative experimentation and fine-tuning are often performed to optimize the model’s performance on the specific computer vision task at hand.

Advanced Techniques and Enhancements of Vision Transformer

A. Pretraining with Large-scale Datasets (e.g., ImageNet):

Pre-Training process: Vision Transformers can benefit from pretraining on large-scale datasets such as ImageNet, where the model learns general visual representations. This pre-trained model can then be fine-tuned on specific downstream tasks, reducing the need for extensive training on task-specific datasets.

Transfer learning benefits: Pre Training enables the model to capture rich visual features from diverse images, leading to improved generalization and performance on downstream tasks with limited labeled data.

B. Data augmentation Strategies for Better Generalization:

Augmentation techniques: Data augmentation helps increase the diversity of the training data and reduces overfitting. Common techniques include random cropping, horizontal flipping, rotation, scaling, and color jittering. These augmentations are applied to the input images before feeding them into the Vision Transformer model during training.

CutMix and MixUp: Advanced augmentation techniques like CutMix and MixUp involve combining multiple images or patches from different samples to create new training examples. These methods encourage the model to learn robust representations and improve generalization.

C. Regularization Techniques (e.g., dropout, weight decay):

Dropout: Dropout is a regularization technique that randomly sets a fraction of the activations to zero during training, preventing over-reliance on specific features and promoting generalization.

Weight decay: Weight decay, also known as L2 regularization, adds a penalty term to the loss function that encourages the model’s weights to be small, reducing overfitting.

D. Attention Mechanisms and Their Variants (e.g., sparse attention):

Sparse attention: Vision Transformers can be computationally expensive due to the quadratic complexity of self-attention. To address this, sparse attention mechanisms have been proposed. These methods limit the attention computation to a subset of patches, reducing computational requirements while still capturing relevant global relationships.

Axial attention: Axial attention is an attention mechanism that operates independently along spatial and channel dimensions. It allows the model to capture both spatial and channel-wise dependencies efficiently.

E. Architectural Modifications and Model Variants (e.g., DeiT, TNT):

DeiT (Data-efficient Image Transformers): DeiT introduces a distillation-based training approach to train Vision Transformers using a teacher-student framework. It enables the model to achieve competitive performance with reduced computational resources.

TNT (Token-wise Transformations): TNT combines the power of convolutional neural networks and Vision Transformers by applying token-wise transformations to the patch embeddings. This hybrid model leverages the strengths of both architectures and achieves state-of-the-art results on various computer vision tasks.

These advanced techniques and enhancements in Vision Transformers aim to improve performance, reduce computational complexity, enhance generalization, and enable efficient training on limited labeled data. Researchers are continuously exploring new methods and variations to push the boundaries of Vision Transformer models in computer vision tasks.

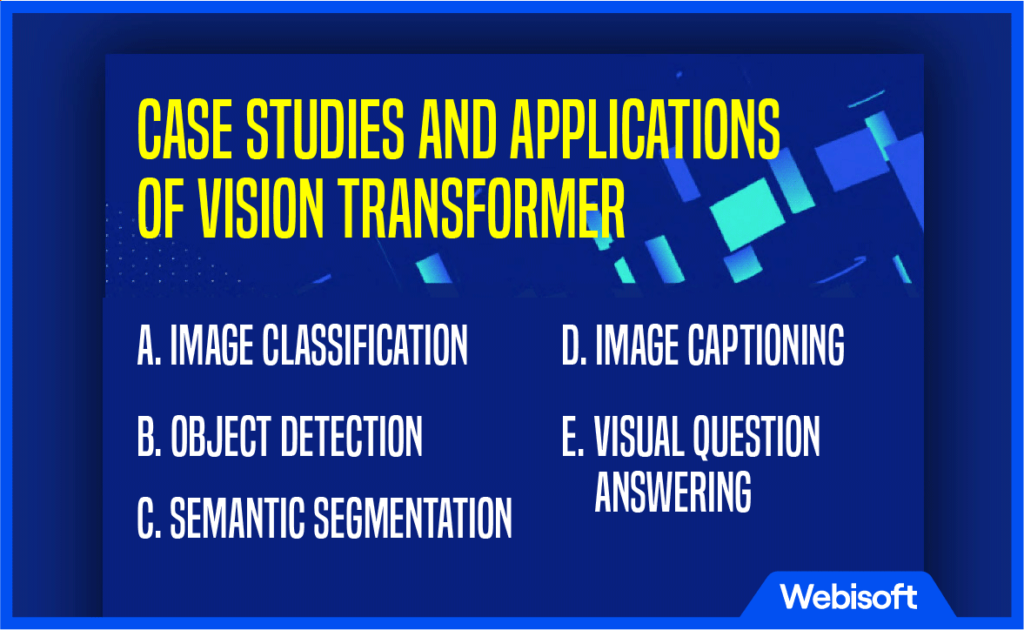

Case Studies and Applications of Vision Transformer

A. Image Classification:

- ImageNet classification: Vision Transformers have demonstrated impressive performance on the ImageNet dataset, achieving competitive accuracy rates comparable to traditional convolutional neural networks. They excel in recognizing and classifying objects within images.

- Fine-grained classification: Vision Transformers have been used for fine-grained classification tasks where subtle differences between similar classes need to be distinguished, such as differentiating between species of birds or dog breeds.

B. Object Detection:

- Single-stage object detection: Vision Transformers have been applied to single-stage object detection tasks, where the goal is to detect and localize objects in an image in a single pass. They have shown promising results, achieving competitive accuracy rates compared to traditional object detection architectures like Faster R-CNN or YOLO.

- Transformer-based detectors: Recent research has explored dedicated transformer-based architectures for object detection, such as DETR (DEtection TRansformer), which combines transformers with bipartite matching and set prediction to achieve end-to-end object detection without the need for anchor boxes.

C. Semantic Segmentation:

- Fully convolutional Transformers: Vision Transformers have been adapted for semantic segmentation tasks by leveraging the fully convolutional nature of CNNs. By replacing the CNN backbone with a Vision Transformer, the model can capture both local and global context, leading to accurate pixel-wise segmentation.

- Hybrid architectures: Hybrid architectures that combine convolutional neural networks with Vision Transformers have also been proposed for semantic segmentation. This allows the model to leverage the spatial hierarchy learned by CNNs while utilizing the self-attention capabilities of Transformers for capturing long-range dependencies.

D. Image Captioning:

Vision Transformers have been explored for image captioning tasks, where the goal is to generate natural language descriptions for images. By encoding the image using a Vision Transformer and then using a language generation model like a recurrent neural network or a transformer-based decoder, accurate and contextually relevant captions can be generated.

E. Visual Question Answering:

Vision Transformers have been applied to visual question-answering (VQA) tasks, where the model needs to understand an image and answer questions based on its content. By encoding the image with a Vision Transformer and combining it with a language processing module, the model can effectively reason about the visual information and generate appropriate answers.

The versatility of Vision Transformers allows them to be applied to various computer vision tasks, including image classification, object detection, semantic segmentation, image captioning, and visual question answering.

Their ability to capture global context and interpretability makes them a promising choice for understanding and analyzing visual data. Ongoing research continues to explore their performance and adaptability in different applications within the field of computer vision.

Limitations and Future Directions

A. Computational Complexity:

- Memory requirements: Vision Transformers typically require higher memory compared to traditional convolutional neural networks due to the quadratic self-attention computation, limiting their scalability to larger images or datasets.

- Computational resources: Training Vision Transformers can be computationally intensive, especially when dealing with large-scale datasets or complex architectures. Efficient implementation and optimization techniques are needed to address these challenges.

B. Limited Spatial Invariance:

- CNN strengths: Vision Transformers may struggle with capturing spatial invariance, which is a strength of convolutional neural networks. CNNs inherently leverage local convolutional operations, enabling them to extract local patterns and handle translation invariance better.

- Hybrid approaches: Combining Vision Transformers with convolutional layers or incorporating spatial information explicitly through architectural modifications can help address this limitation and improve performance.

C. Data Efficiency:

- Training with limited labeled data: Vision Transformers may require large amounts of labeled data to achieve state-of-the-art performance. Training them with limited labeled data remains a challenge, although techniques like pretraining and transfer learning can partially alleviate this issue.

- Semi-supervised and unsupervised learning: Exploring semi-supervised or unsupervised learning methods that leverage self-supervision or weak supervision can enhance the data efficiency of Vision Transformers, allowing them to learn from unlabeled or partially labeled datasets.

D. Interpretability and Explainability:

- Attention visualization: While the self-attention mechanism in Vision Transformers provides interpretability, understanding how the model attends to different image regions and makes its decisions transparent to users remains an active area of research.

- Explainable predictions: Developing methods to provide explanations or justifications for the model’s predictions can enhance trust and reliability, especially in critical applications where interpretability is crucial.

E. Task-specific Architectural Modifications:

- Customized architectures: Different computer vision tasks may require task-specific architectural modifications to optimize performance. Developing specialized architectures that integrate domain knowledge and leverage the strengths of Vision Transformers can further advance their application in specific tasks.

- Model distillation: Techniques like knowledge distillation can help transfer knowledge from large Vision Transformer models to smaller and more efficient variants, making them more accessible for deployment on resource-constrained devices.

F. Multimodal Learning and Integration:

Vision Transformers have primarily been applied to visual tasks, but integrating them with other modalities such as text or audio can enable multimodal learning and reasoning.

Exploring the fusion of Vision Transformers with other models like language models or audio transformers can lead to advancements in areas like image-text understanding or audio-visual tasks.

As the field of Vision Transformers continues to evolve, addressing these limitations and exploring future directions will be crucial. Overcoming computational challenges, improving spatial invariance, enhancing data efficiency, ensuring interpretability, and integrating multimodal learning will pave the way for the broader adoption of Vision Transformers in diverse computer vision applications.

Final Thoughts

In summary, Vision Transformers have ushered in a new era in the realm of computer vision, providing innovative insights and pushing the limits of image comprehension. Their prowess in tasks like image classification, object detection, semantic segmentation, image captioning, and visual question answering is underpinned by their global context and interpretability.

We stand on the cusp of a visual revolution. The trajectory of Vision Transformers is filled with promise. With advancements in reducing computational complexity, improving spatial invariance, and enhancing data efficiency, these models will persist in sculpting the future of computer vision.

Ready to leverage the power of Vision Transformers for your business? At Webisoft, we’re primed to help. Our team of experts can deploy these transformative models to provide unparalleled image understanding for your projects. Reach out to us today to learn more.