Have you ever been curious about how computers can understand text, images, sounds, and videos all at once? Well, that’s where multimodal models come into play.

Multimodal models are advanced AI systems that process various types of data, such as text, images, audio, and video, simultaneously. They find applications in fields like natural language processing, image analysis, virtual assistants, and more, by providing a complete understanding of complex, multi-modal information.

In this blog, we’ll explore how multimodal models are used in different fields, how they make your interactions with technology smoother, and why they’re such an exciting part of the future of AI. Let’s start!

Contents

- 1 What is Multimodal Model AI?

- 2 Examples of Multimodal AI Models

- 3 Key Components of Multimodal AI Models

- 4 Benefits of a Multimodal Model

- 5 How Do Multimodal Models Work?

- 6 How to Create a Multimodal Model

- 7 Multimodal AI Applications

- 8 Challenges in Multimodal Learning

- 9 Use Cases of Multimodal Model

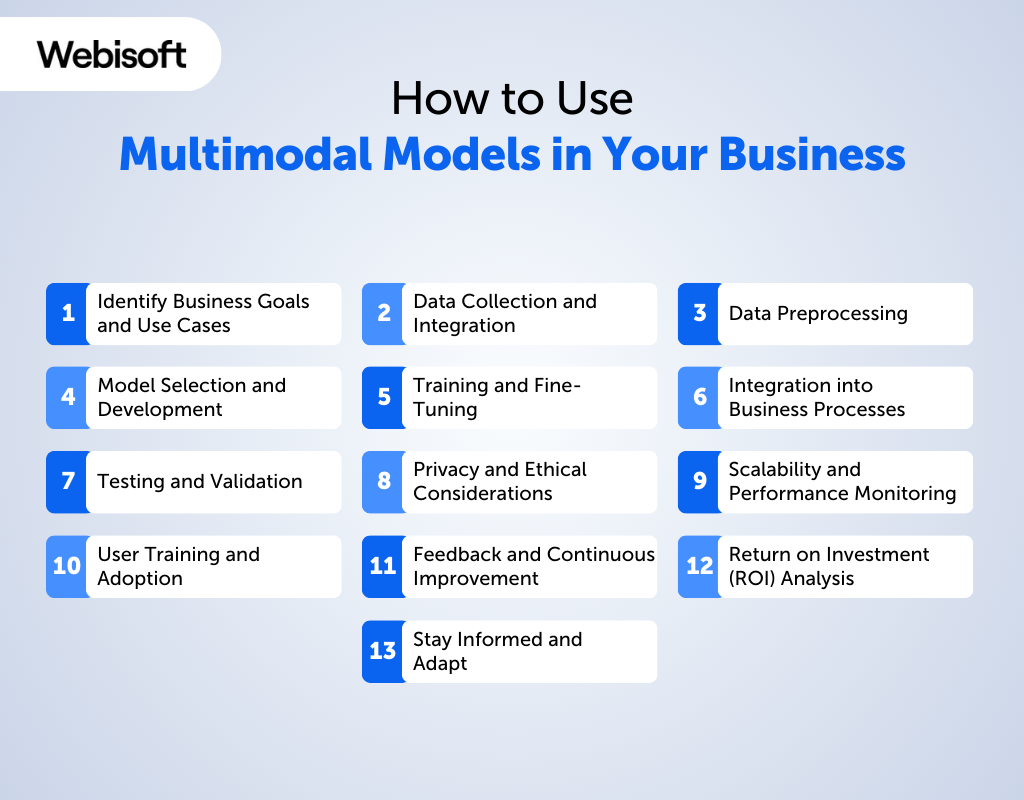

- 10 How to Use Multimodal Models in Your Business

- 10.1 Identify Business Goals and Use Cases

- 10.2 Data Collection and Integration

- 10.3 Data Preprocessing

- 10.4 Model Selection and Development

- 10.5 Training and Fine-Tuning

- 10.6 Integration into Business Processes

- 10.7 Testing and Validation

- 10.8 Privacy and Ethical Considerations

- 10.9 Scalability and Performance Monitoring

- 10.10 User Training and Adoption

- 10.11 Feedback and Continuous Improvement

- 10.12 Return on Investment (ROI) Analysis

- 10.13 Stay Informed and Adapt

- 11 Multimodal vs Unimodal AI Models

- 12 Why is Webisoft the Best for Getting Multimodal AI Services?

- 13 Conclusion

- 14 FAQs

What is Multimodal Model AI?

A Multimodal Model AI is an advanced artificial intelligence system. It is designed to process multiple types of sensory data simultaneously, including text, images, audio, and video.

Unlike traditional unimodal AI systems that focus on one type of data, Multimodal AI models can analyze and interpret various input sources concurrently. Which provides a more comprehensive understanding of the data and context.

These models use deep learning techniques and neural networks to transform and integrate sensory information. It enables them to generate more accurate predictions and insights across different modes of data.

Examples of Multimodal AI Models

Let’s explore some Multimodal AI examples and their applications in various fields:

Transfer Learning Across Data Sources

Deep learning models excel in transferring knowledge across different data sources. For example, a model trained on one organization’s data can be adapted to another’s via transfer learning, reducing development costs and benefiting multiple departments or teams within a company.

Visual Content Analysis

Multimodal deep learning is commonly used in tasks like image analysis, visual question answering, and caption generation. These AI systems are trained on datasets containing labeled images, allowing them to analyze and label unseen images more accurately as they receive better training.

AI Writing Tools

Another example of multimodal models includes AI writing tools that generate content based on specific prompts. Traditionally, these models were trained within specific domains, but recent developments have led to versatile models applicable across various fields.

Key Components of Multimodal AI Models

Here are the key components of Multimodal AI models:

Fusion Mechanisms

Fusion mechanisms are fundamental to multimodal models. They effortlessly integrate information from various sources using techniques such as combination, addition, or advanced methods to create a coherent understanding of diverse data.

Computer Vision

Multimodal models tap into advanced computer vision techniques to interpret visual content effectively. Think of Convolutional Neural Networks (CNNs) as the engines that help these models recognize patterns and identify objects in images and videos.

Natural Language Processing (NLP)

With Natural Language Processing (NLP) capabilities, multimodal models become adept at understanding and generating human-like text. Technologies like Recurrent Neural Networks (RNNs) and Transformer architectures, such as BERT, empower these models to excel in comprehending and creating textual content.

Benefits of a Multimodal Model

Multimodal models are a significant AI advancement. They combine different types of data to analyze things more comprehensively, bringing us closer to AI that understands and interacts with the world like humans.

Let’s explore the benefits that multimodal models present:

Contextual Comprehension

Multimodal models have a unique ability to understand context, which is vital for tasks like natural language processing and generating appropriate responses. They achieve this by analyzing both visual and textual data together.

This contextual understanding also benefits conversation-based systems. Multimodal models can use both visual and text cues to generate responses that feel more human-like.

Natural Interaction

Multimodal models improve how we interact with computers. Traditional AI systems struggle to understand us naturally because they rely on just one type of input, like text or voice.

However, multimodal models can combine different types of input, like speech, text, and visual cues. This means they can better understand what we want.

Multimodal models also make it easier to have conversations with computers. A chatbot, for instance, can use these models to understand our messages more naturally.

It can even take into account visual cues like emojis or images to better understand our tone and emotions. This makes the chatbot’s responses more accurate and closer to how a real conversation would go.

Accuracy Enhancement

Multimodal models offer a big accuracy boost. They do this by combining different types of data like text, voice, images, and video. This helps them understand information better, making their predictions more precise and improving their performance in various tasks.

Additionally, these models are handy when dealing with incomplete or noisy data. They can fill in missing information or correct errors by using insights from different data types.

For instance, in noisy places, they can improve speech recognition by looking at lip movements, resulting in more reliable results.

Capability Enhancement

Multimodal models make AI systems much more capable. They do this by using data from different sources like text, images, audio, and video to understand the world and its context better. This broader understanding empowers AI systems to perform a wider range of tasks with greater accuracy.

Also, when these models consider contextual data, like the environment or behavior, they gain a more comprehensive understanding of the situation. This results in AI systems making smarter decisions based on a better understanding of what’s happening.

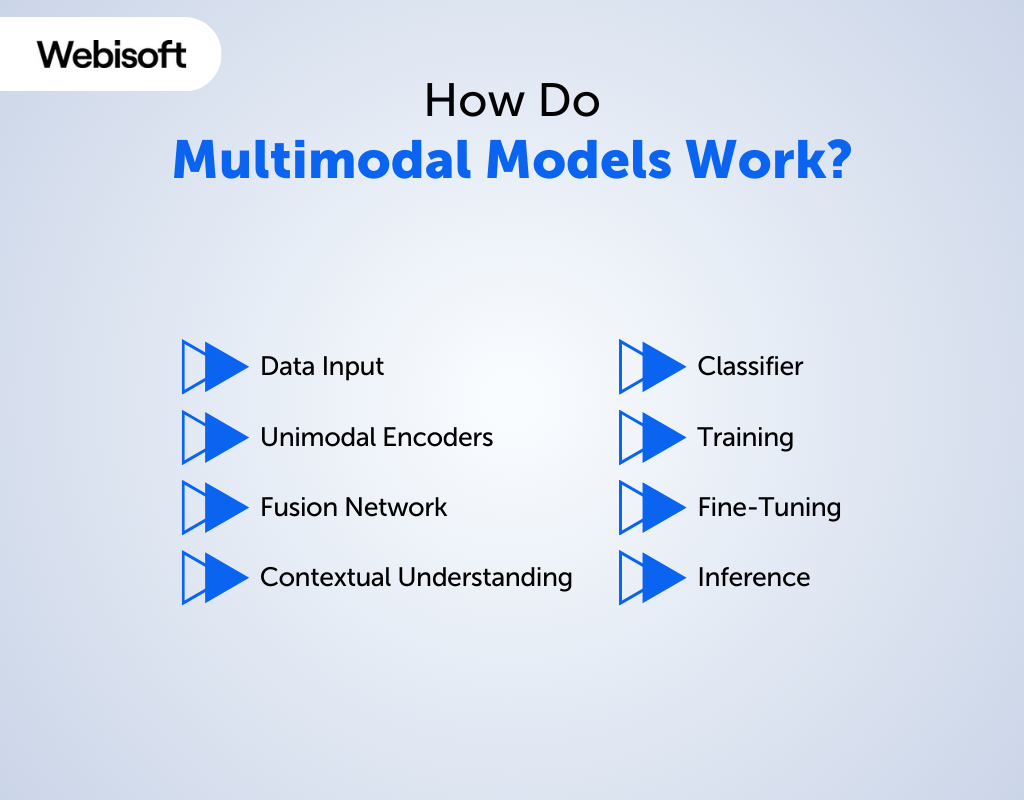

How Do Multimodal Models Work?

Now here’s a closer look at how Multimodal Models work:

Data Input

These models start by taking in data from various sources. This data could be in the form of text, images, audio clips, or video segments, each offering a unique perspective.

Unimodal Encoders

Next, the data goes through what we call “unimodal encoders.” These are like specialized processors.

For example, a text encoder handles text data, while an image encoder deals with images. These encoders pick out important features specific to each data type.

Fusion Network

After being processed by unimodal encoders, data from different sources comes together in what we call a “fusion network.”

This part plays a crucial role by merging the features extracted from each source into a single, unified representation. It uses various techniques, like paying attention to important parts, combining features, and making connections between different types of data.

Contextual Understanding

The fusion network helps the model understand the context better by blending insights from various sources. This is super important for tasks that need a complete picture of the data, like describing images, figuring out emotions in text, or understanding natural language.

Classifier

The final piece of the puzzle is the “classifier.” It’s like the decision-maker. It takes the unified representation from the fusion network and makes predictions or classifications.

Depending on the task, it might create descriptions for images, analyze feelings in text, spot objects in images, or do other specific jobs.

Training

These multimodal models learn by looking at huge sets of data that have many different types of information. During this learning process, the model figures out how to connect and understand data from different sources. This helps it give accurate and context-aware answers when used in real-world situations.

Fine-Tuning

Following the initial training, multimodal models sometimes go through a fine-tuning process. Fine-tuning optimizes the models for particular tasks or situations.

Inference

Once they’re trained and fine-tuned, multimodal models are ready to work with new, unseen data. They can provide insights, create descriptions, answer questions, or do various jobs that need a deep understanding of different types of data.

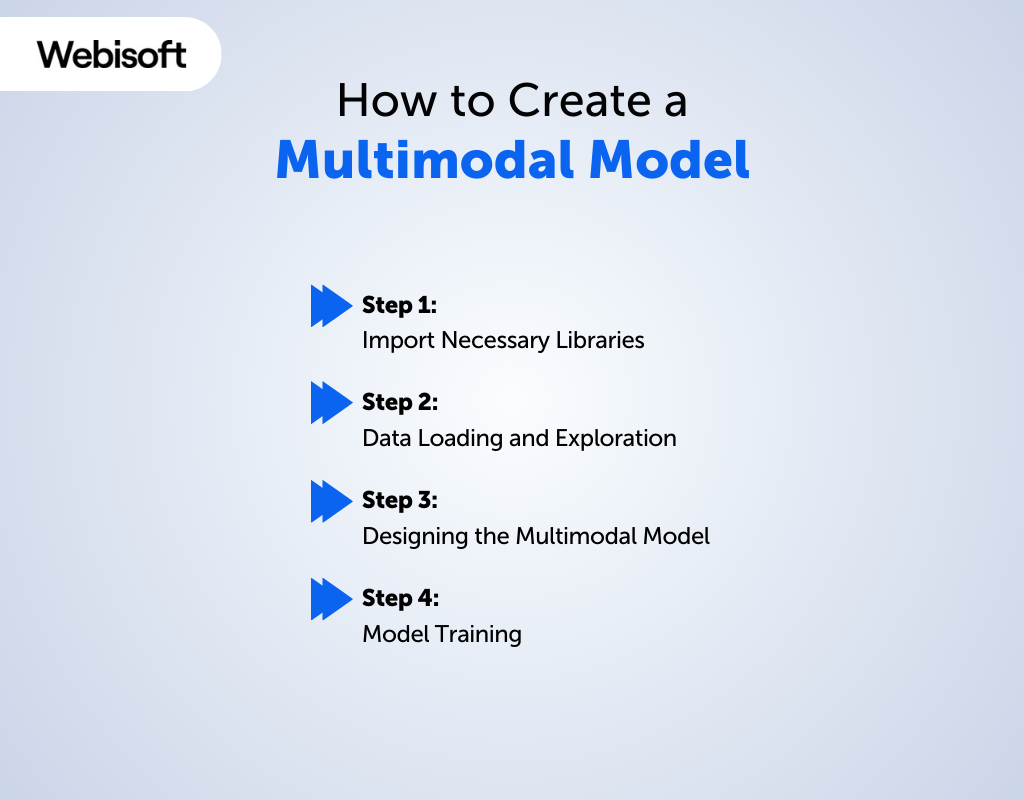

How to Create a Multimodal Model

Creating a multimodal model involves combining various types of data, such as text, images, audio, and video, to build a versatile AI system. Here’s a step-by-step guide on how to create a multimodal model:

Step 1: Import Necessary Libraries

Begin by importing essential libraries, including tools for data manipulation and deep learning. You’ll need libraries like PyTorch, Torchvision (for image-related tasks), and potentially fasttext (for text processing).

Code:

# Import required libraries

import torch

import torchvision

from torch.utils.data import DataLoader

import torch.optim as optim

import torch.nn as nn

from torchvision import transforms

Step 2: Data Loading and Exploration

Load and preprocess your data, particularly image data. Utilize the torchvision.transforms module to apply transformations like resizing and converting images to tensors. This step prepares your data for training and visualization.

Code:

# Define image transformations

image_transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor()

])

# Load and preprocess image data

train_dataset = torchvision.datasets.ImageFolder(root=’train_data’, transform=image_transform)

val_dataset = torchvision.datasets.ImageFolder(root=’val_data’, transform=image_transform)

# Create data loaders

train_loader = DataLoader(train_dataset, batch_size=32, shuffle=True)

val_loader = DataLoader(val_dataset, batch_size=32, shuffle=False)

# Visualize sample images (optional)

import matplotlib.pyplot as plt

import numpy as np

data_iter = iter(train_loader)

images, labels = next(data_iter)

plt.figure(figsize=(20, 5))

plt.axis(‘off’)

plt.imshow(np.transpose(torchvision.utils.make_grid(images), (1, 2, 0)))

Step 3: Designing the Multimodal Model

In this crucial step, design the multimodal model architecture. Pay attention to three key aspects:

- Dataset Management: Organize your data using PyTorch’s Dataset class.

- Model Structure: Create the neural network architecture. In this example, we’ll refer to a model named LanguageAndVisionConcat.

- Training Logic: Define how your model will be trained, including loss functions and optimization methods.

Code:

# Define the multimodal model

class LanguageAndVisionConcat(nn.Module):

def __init__(self, num_classes):

super(LanguageAndVisionConcat, self).__init__()

# Define your model architecture here

def forward(self, image, text):

# Implement the forward pass of your model here

return output

Step 4: Model Training

Train your model over a set number of epochs (iterations over the dataset). During each epoch, the model processes data in batches, calculates loss, and updates its parameters.

Additionally, assess your model’s performance using a validation set after each epoch. Here’s a simplified example of this process:

- Define a loss function (e.g., CrossEntropyLoss) and instantiate the model (e.g., LanguageAndVisionConcat).

- Choose an optimizer (e.g., Adam) to update the model’s parameters.

- Create data loaders for both the training and validation datasets.

- Iterate through epochs and batches, calculating loss and updating the model.

- Periodically print training loss for monitoring.

- After each epoch, evaluate the model’s accuracy on the validation set to assess performance.

Code:

# Define loss function and optimizer

criterion = nn.CrossEntropyLoss()

model = LanguageAndVisionConcat(num_classes=number_of_classes)

optimizer = optim.Adam(model.parameters(), lr=0.001)

# Train the model

num_epochs = 10

for epoch in range(num_epochs):

for i, batch in enumerate(train_loader):

images, texts, labels = batch

optimizer.zero_grad()

output = model(images, texts)

loss = criterion(output, labels)

loss.backward()

optimizer.step()

if i % 100 == 0:

print(f”Epoch {epoch + 1}, batch {i + 1}: loss {loss.item():.4f}”)

# Evaluate the model on the validation set

total_correct = 0

total_samples = 0

with torch.no_grad():

for batch in val_loader:

images, texts, labels = batch

output = model(images, texts)

predictions = torch.argmax(output, dim=1)

total_correct += (predictions == labels).sum().item()

total_samples += len(labels)

accuracy = total_correct / total_samples

print(f”Epoch {epoch + 1} validation accuracy: {accuracy:.4f}”)

Following these steps, you can successfully create a multimodal model capable of handling both image and text data, making it a powerful tool for various AI tasks.

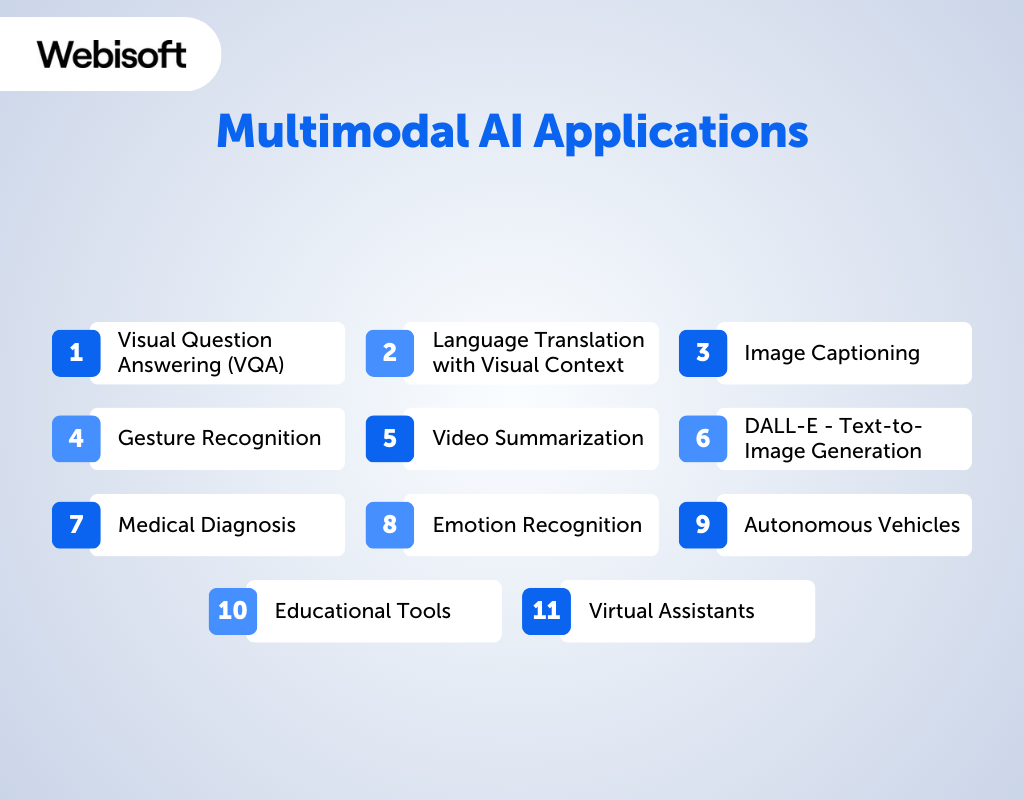

Multimodal AI Applications

Multimodal models are widely applied across various domains. Let’s explore some of its applications:

Visual Question Answering (VQA)

Multimodal models proficiently answer questions related to images by merging visual understanding with natural language processing. This capability is invaluable in interactive systems, educational platforms, and more.

Language Translation with Visual Context

Integrating visual information into language translation models improves the contextual accuracy of translations. Multimodal models consider both textual and visual cues, resulting in more context-aware and relevant translations, particularly beneficial in domains where visual context is crucial.

Image Captioning

Multimodal models excel at generating descriptive captions for images, showcasing a deep understanding of both visual and textual information. They are indispensable in automated image tagging, content recommendation, and enhancing accessibility for visually impaired individuals.

Gesture Recognition

These models interpret and recognize human gestures, playing a pivotal role in sign language translation. Multimodal models bridge the communication gap by processing gestures and converting them into text or speech, fostering inclusive communication.

Video Summarization

Multimodal models possess the ability to summarize lengthy videos by extracting key visual and audio elements. Video summarization streamlines content consumption, facilitates efficient content browsing, and enhances video content management platforms.

DALL-E – Text-to-Image Generation

DALL-E, a remarkable variant of multimodal AI, can generate images from textual descriptions. This groundbreaking technology expands creative possibilities in content creation and visual storytelling, finding applications in art, design, advertising, and more.

Medical Diagnosis

In healthcare, multimodal models assist in medical image analysis by combining data from various sources such as medical scans, patient records, and textual reports. They aid healthcare professionals in making accurate diagnoses and formulating effective treatment plans, ultimately improving patient care.

Emotion Recognition

Multimodal models can detect and comprehend human emotions from diverse sources, including facial expressions, voice tone, and text sentiment. They find applications in sentiment analysis on social media platforms and in mental health support systems that gauge and respond to users’ emotional states.

Autonomous Vehicles

Multimodal models play a pivotal role in the development of autonomous vehicles. They process data from cameras, LiDAR, radar, sensors, and GPS to navigate, detect obstacles, and make real-time driving decisions. This technology is essential for achieving safe and reliable self-driving cars.

Educational Tools

Multimodal models enhance learning experiences by providing interactive educational content that responds to both visual and verbal cues from students. They are integral to adaptive learning platforms, which dynamically adjust content and difficulty based on students’ performance and feedback.

Virtual Assistants

Powering virtual assistants, multimodal models understand and respond to voice commands while processing visual data for a comprehensive user interaction. They are instrumental in applications like smart home automation, voice-controlled devices, and digital personal assistants.

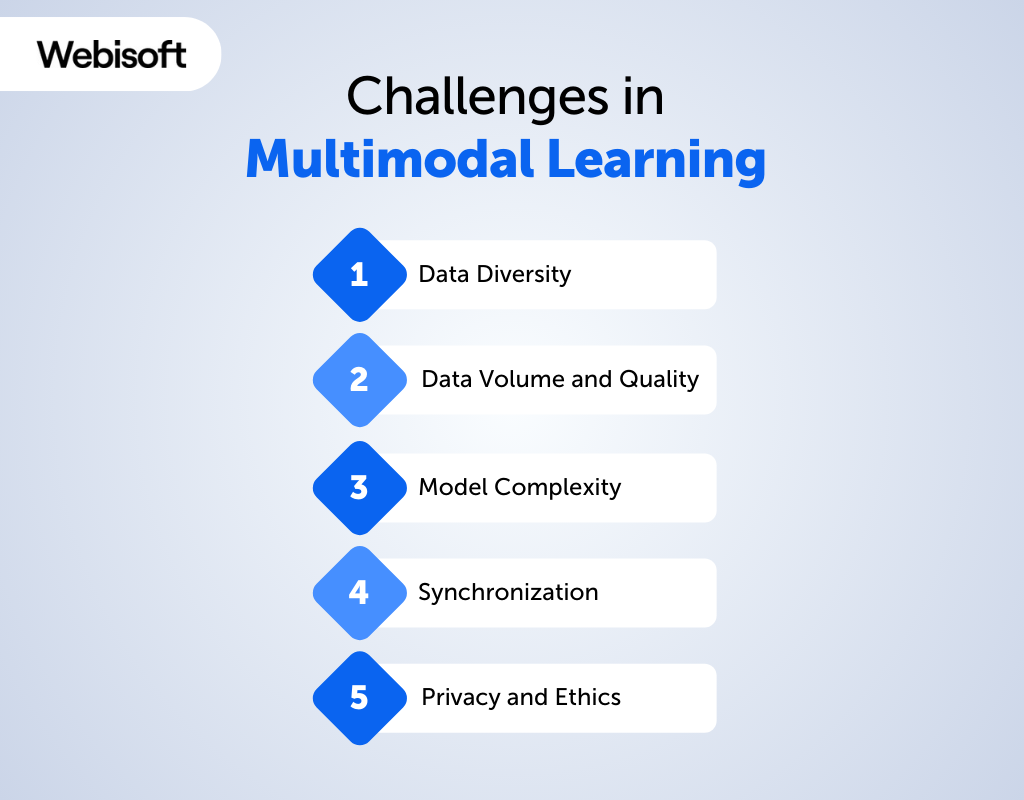

Challenges in Multimodal Learning

Multimodal learning, which involves working with different types of data like text, images, audio, and video all at once, comes with some challenges:

Data Diversity

Different types of data, like text, images, audio, and video, come in different shapes and sizes. Combining all these diverse data sources needs careful preparation and matching.

Data Volume and Quality

Multimodal models often require large and high-quality datasets for effective training. Collecting, verifying, and organizing such extensive datasets can be a time-consuming and resource-intensive task.

Model Complexity

Creating models that can understand and work with diverse data types is like designing a versatile and complex machine – it demands advanced techniques and expertise.

Synchronization

Aligning data from different modalities, such as audio and video, can be challenging. Ensuring that data samples are synchronized accurately is essential for meaningful analysis.

Privacy and Ethics

Multimodal models often deal with sensitive information, raising ethical concerns and privacy considerations. Safeguarding user data and adhering to ethical guidelines are crucial.

Use Cases of Multimodal Model

Multimodal models are used in different areas and they significantly improve our capabilities and interactions in multiple ways. Here are some use cases of multimodal:

Weather Forecasting

Weather experts use many different sources of information, like pictures from space, radar for rain, sensors on the ground, and past weather records, to predict what the weather will be like. This helps us plan for things like outdoor activities or when to carry an umbrella.

Human-Computer Interaction

This is how we interact with computers and devices like smartphones. When we can touch the screen, talk to it, or have it recognize our faces, it makes using technology easier and more fun.

Multimedia Content Creation

People use special computer programs to make videos, presentations, and artwork. These programs let them put together different things like videos, music, text, and pictures to make cool stuff.

Medical Diagnosis

Doctors use many kinds of information to figure out what’s wrong with a patient. This includes things like past health records, physical exams, lab tests, and pictures of the inside of the body. Putting all this together helps doctors make the right diagnosis and treatment plan.

Sensory Integration Devices

Some devices are designed for people who can’t communicate like others. These devices use different ways like pictures, touch, and even talking to help them express themselves and interact with others.

Language Translation

Sometimes we need to understand and talk to people who speak a different language. Translation tools help with this by changing written words into spoken words and vice versa, making it easier for people from different languages to understand each other.

How to Use Multimodal Models in Your Business

Using multimodal models in your business can offer various advantages, but it requires careful planning and execution. Here’s a step-by-step guide on how to effectively use multimodal models for your business:

Identify Business Goals and Use Cases

Begin by defining your specific business objectives and the areas where multimodal models can create value. Identify use cases where combining data from different sources can lead to improved decision-making, customer experiences, or operational efficiency.

Data Collection and Integration

Gather data from various sources and modalities that are relevant to your identified use cases. Ensure the collected data is of high quality, well-annotated, and structured for training your models.

Data Preprocessing

Prepare your data for multimodal model training by performing tasks like data cleaning, alignment, and normalization. Consider employing data augmentation techniques to increase the diversity of your training dataset.

Model Selection and Development

Choose or develop multimodal models that suit your specific use cases and business needs. You can opt for pre-trained models, customize existing ones, or build models from the ground up. Ensure that your chosen models are capable of effectively handling data integration from different sources.

Training and Fine-Tuning

Train your multimodal models using the prepared dataset. Fine-tune the models to optimize their performance for your particular tasks. This may involve iterative experimentation.

Integration into Business Processes

Now seamlessly integrate the trained multimodal models into your existing business processes, applications, and workflows. Collaborate with your IT and development teams to ensure smooth integration with your software systems.

Testing and Validation

Conduct continuous testing and validation to verify that the models perform as expected in real-world scenarios. Evaluate their accuracy, robustness, and reliability and make necessary adjustments based on feedback and results.

Privacy and Ethical Considerations

Address privacy and ethical concerns associated with multimodal data processing. Implement measures to safeguard user data and ensure compliance with data protection regulations.

Scalability and Performance Monitoring

Plan for scalability to accommodate business growth. Continuously monitor the performance of your multimodal models and be prepared to retrain or upgrade them to maintain accuracy and relevance.

User Training and Adoption

If your multimodal models are used by employees or customers, provide training and support to ensure users can effectively interact with the technology. User adoption is crucial for realizing the benefits of multimodal models.

Feedback and Continuous Improvement

Establish mechanisms for collecting feedback from users and stakeholders. Use this feedback to drive ongoing improvement and innovation in your multimodal applications.

Return on Investment (ROI) Analysis

Evaluate the ROI of implementing multimodal models by measuring their impact on key business outcomes. Assess whether they have contributed to revenue growth, cost savings, improved customer satisfaction, or other relevant metrics.

Stay Informed and Adapt

Keep yourself updated on the latest developments, research, and best practices in the field of multimodal AI. Be prepared to adapt and refine your multimodal strategies in response to evolving technology and business needs.

Multimodal vs Unimodal AI Models

Unimodal and multimodal models are two distinct methods for creating Artificial Intelligence (AI) systems. Here’s a straightforward comparison:

| Metrics | Multimodal AI | Unimodal AI |

| Applications | Suitable for tasks needing multiple types of input like image and video captioning | Ideal for tasks involving a single type of input like sentiment analysis |

| Resources | Need more computational resources due to complexity | Require less resources due to simpler processing |

| Data Input | Utilize multiple types of data input (e.g. text, images, audio) | Rely on a single type of data input (e.g. text or images only) |

| AI Models | Examples include CLIP, DALL-E, METER, ALIGN, SwinBERT | Examples include BERT, ResNet, GPT-3, YOLO |

| Information Processing | Handle multiple modalities simultaneously for a richer understanding | Process a single modality, limiting the depth of understanding |

| Interaction | Support natural interaction through multiple communication modes | Limit interaction to a single mode such as text input |

Why is Webisoft the Best for Getting Multimodal AI Services?

Webisoft stands out as the best choice for getting Multimodal AI services for several compelling reasons. Some of them are:

Experienced Team

Webisoft has a team of skilled professionals with extensive experience in Multimodal AI. Our expertise enables us to tackle complex challenges and provide effective solutions for your business.

Cutting-Edge Technology

Webisoft stays up-to-date with the latest advancements in AI technology. We invest in research and development to offer solutions that align with current industry trends, ensuring you receive the most innovative AI services.

Customized Solutions

At Webisoft we understand that every business is unique. We work closely with you to understand your specific needs, challenges, and industry context. This personalized approach ensures that our AI solutions are a perfect fit for your requirements.

Proven Track Record

Webisoft has a history of successfully delivering Multimodal AI projects across various sectors. Our track record demonstrates our ability to make a meaningful impact on organizations through AI solutions.

Ethical Practices

Webisoft places a strong emphasis on data privacy and ethical AI practices. We maintain strict data security standards, ensuring that your data is managed with integrity and responsibility. By choosing Webisoft, you can trust that your information is in safe hands.

Conclusion

To sum it up, in this article, we’ve explored multimodal models. We’ve seen how they’re used across different industries and how they improve how we interact with technology. These models can understand various types of data like text, images, sounds, and videos all at once, which is incredibly useful in today’s data-driven world.

Whether it’s in healthcare, self-driving cars, creative content generation, or making user experiences better, multimodal models are changing the game.

If you’re thinking about using these models in your business, our team at Webisoft is here to help. Get in touch, and let’s unlock the full potential of multimodal AI models for your company.

FAQs

Is ChatGPT a multimodal model?

No, ChatGPT is not a multimodal model. ChatGPT is primarily a text-based language model that processes and generates text.

Do Multimodal Models have potential applications in the field of art and creativity?

Absolutely, Multimodal Models can aid in creative content generation, helping artists, designers, and content creators explore new realms of expression.

Can Multimodal Models in AI be used for creative content generation?

Yes, Multimodal Models like DALL-E can generate creative content by transforming textual descriptions into images, expanding possibilities in art and design.