Generative AI Stack: Architecture, Layers, and How It Works

- BLOG

- Artificial Intelligence

- January 19, 2026

Generative AI is no longer just about powerful models. What actually determines success is the generative AI stack behind those models, including how data, infrastructure, and systems work together in production. Many teams discover this the hard way.

A model demo may look impressive, but without the right stack, performance, reliability, and costs quickly spiral out of control. This article breaks down the generative AI stack clearly. You will learn its architecture, key layers, and how it works end to end, without buzzwords or hand-waving.

Contents

- 1 What is a Generative AI stack?

- 2 Core Purpose of a Generative AI Stack

- 2.0.1 Establishes a complete execution path for generative workloads

- 2.0.2 Separates responsibilities across technical layers

- 2.0.3 Enables controlled use of large foundation models

- 2.0.4 Supports scalability without degrading system behavior

- 2.0.5 Creates operational visibility and long-term maintainability

- 3 Build generative AI with clear enterprise boundaries.

- 4 Generative AI Stack Architecture: Key Layers

- 5 Application Frameworks in a Generative AI Stack

- 6 How a Generative AI Stack Works End to End

- 7 How to Choose the Right Generative AI Stack

- 7.1 Align stack choices with your use case requirements

- 7.2 Assess data sensitivity and privacy requirements

- 7.3 Evaluate model selection and customization needs

- 7.4 Prioritize infrastructure scalability and cost efficiency

- 7.5 Balance managed services with custom engineering

- 7.6 Ensure operational visibility and monitoring support

- 7.7 Evaluate ecosystem support and integration capabilities

- 7.8 Plan for security, compliance, and governance

- 7.9 Align stack complexity with team capabilities

- 8 How Webisoft Builds Production-Ready Generative AI Stacks

- 8.1 Discovery and Strategic Alignment

- 8.2 AI Stack Architecture and Blueprinting

- 8.3 Data Preparation and Quality Engineering

- 8.4 Custom Model Development and Fine-Tuning

- 8.5 Integration with Existing Systems

- 8.6 Production Deployment and Scaling

- 8.7 Monitoring, Retraining, and Optimization

- 8.8 Security, governance, and compliance built in

- 9 Build generative AI with clear enterprise boundaries.

- 10 Conclusion

- 11 Frequently Asked Question

What is a Generative AI stack?

A generative AI stack is the complete set of technologies, tools, and components used to build, deploy, and operate artificial intelligence systems. It can create new content such as text, images, audio, or code rather than only analyze or classify existing data.

It is not just about the AI model itself. It includes layers that allow a generative AI solution to function in scenarios, from infrastructure and data processing to application frameworks and deployment. At its core, the stack follows a layered architecture.

Each layer performs a distinct role, including compute for model processing, data preparation, model hosting or tuning, workflow orchestration, and delivery of AI outputs through applications. All components must work together to ensure the system generates content reliably, efficiently, and at scale.

In essence, a generative AI stack supports language models, image generators, and multimodal systems as real-world AI stack examples. It allows organizations to move from experimental prototypes to production-ready generative AI solutions.

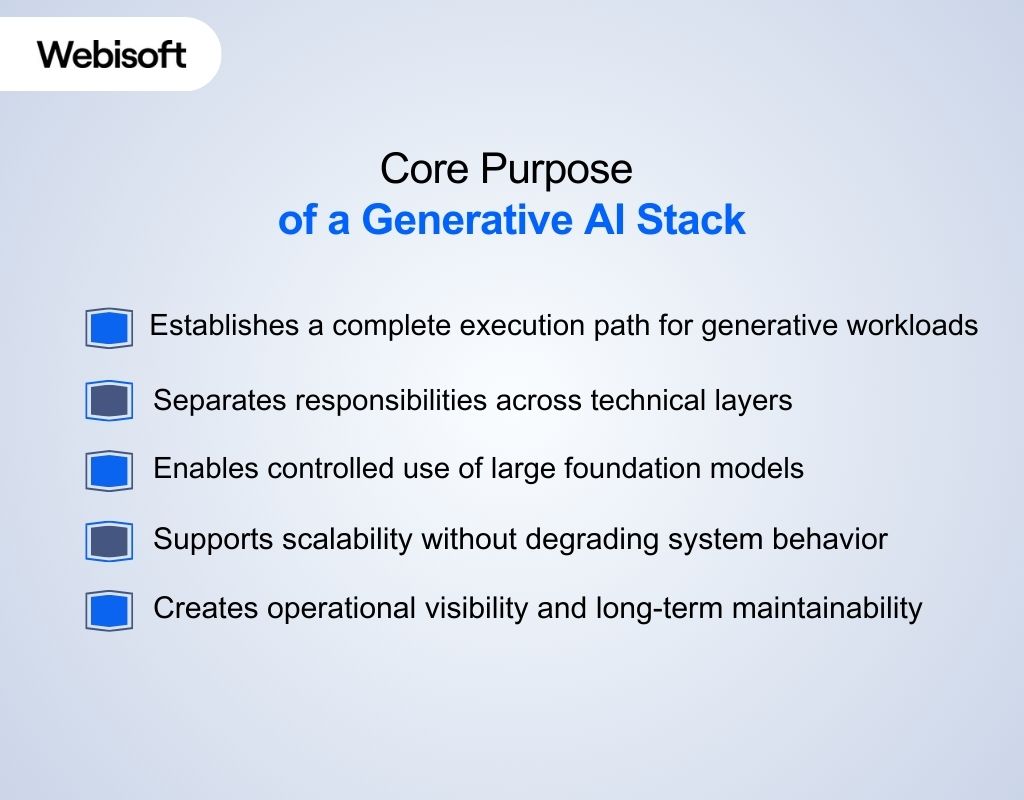

Core Purpose of a Generative AI Stack

The core purpose of a generative AI stack is to turn models into reliable production systems within a generative AI tech stack. It defines how compute, data, models, application logic, and operations interact under real usage constraints. Here are the key purposes of gen AI stack:

The core purpose of a generative AI stack is to turn models into reliable production systems within a generative AI tech stack. It defines how compute, data, models, application logic, and operations interact under real usage constraints. Here are the key purposes of gen AI stack:

Establishes a complete execution path for generative workloads

The stack defines how inputs move through data retrieval, model inference, orchestration logic, and output delivery. This prevents fragmented systems where models operate without context, control, or predictable behavior.

Separates responsibilities across technical layers

A generative AI stack assigns clear responsibilities to infrastructure, data handling, models, and application control layers. This separation allows teams to modify one layer without destabilizing the entire system.

Enables controlled use of large foundation models

The stack governs how models access data, execute prompts, and return outputs within defined boundaries. This control is important when models operate with enterprise data or user-facing applications.

Supports scalability without degrading system behavior

The stack defines how systems scale inference, manage latency, and handle concurrent requests. Without this structure, performance degrades as usage increases.

Creates operational visibility and long-term maintainability

A well-defined stack makes system behavior observable across inference, cost, output quality, and failures. This visibility supports debugging, iteration, and long-term system ownership.

Build generative AI with clear enterprise boundaries.

Design, deploy, and scale secure generative AI systems customized to your business.

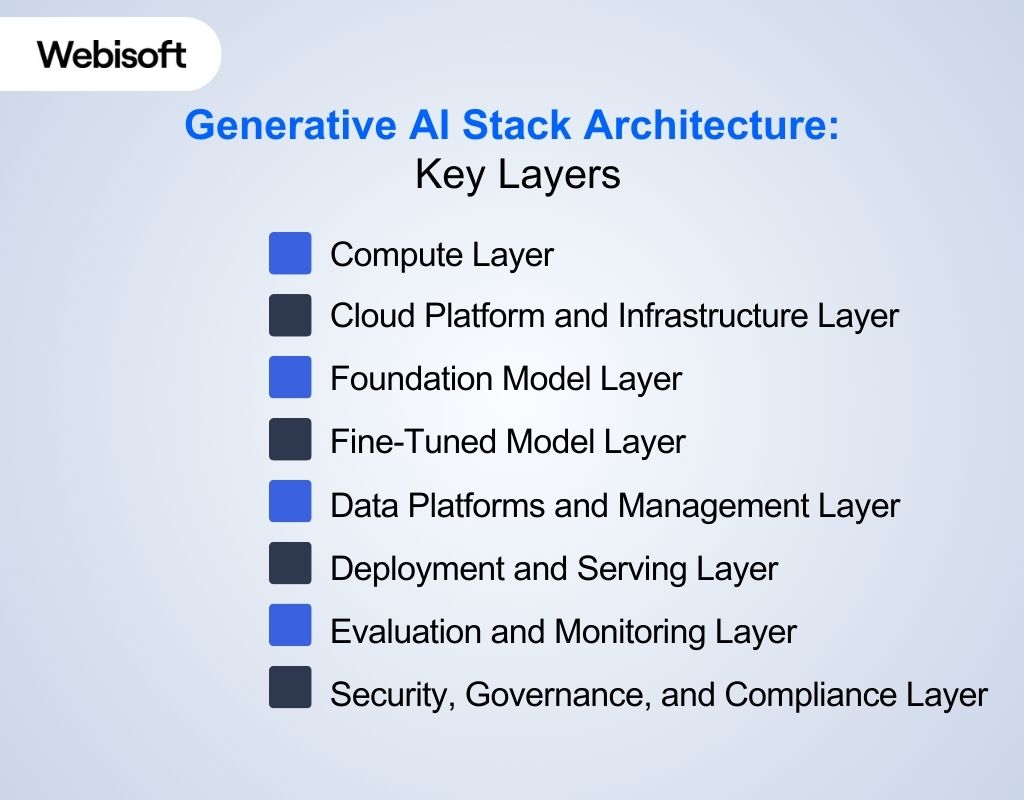

Generative AI Stack Architecture: Key Layers

Generative AI stack architecture defines how production systems organize components for generative workloads. Here are the key generative AI stack layers that structure compute, data, models, applications, deployment, and operations.

Generative AI stack architecture defines how production systems organize components for generative workloads. Here are the key generative AI stack layers that structure compute, data, models, applications, deployment, and operations.

Compute Layer

The compute layer forms the physical and virtual foundation of the generative AI stack. It provides the processing capacity required for model training, fine-tuning, and inference workloads. Generative models, especially large language models, rely on high-performance resources such as GPUs, TPUs, or specialized accelerators to handle parallel computation and memory-intensive operations.

This layer directly impacts inference speed, throughput, and concurrency handling. Compute limitations affect how many requests a system can process, how large a context window it supports, and how predictable response times remain under load. Decisions at this layer also influence batching strategies, memory allocation, and cost efficiency in production environments.

Cloud Platform and Infrastructure Layer

The cloud platform layer sits above raw compute and provides scalable infrastructure services that support storage, networking, security, and resource orchestration. Cloud providers offer elastic provisioning, allowing systems to scale resources dynamically based on demand rather than fixed capacity planning.

Hyperscalers like generative AI stack AWS, Azure, and Google Cloud offer managed compute, security layers, and networking that help systems scale efficiently. This layer reduces operational complexity by abstracting infrastructure management tasks such as provisioning, networking configuration, and access control.

It also provides integration with monitoring, logging, and identity systems that support production-grade deployments. Reliable interaction between this layer and compute resources is critical for system stability and performance.

Foundation Model Layer

The foundation model layer contains the pretrained generative models that power content generation. These models are trained on large datasets and serve as the base capability for text generation, image synthesis, code generation, or multimodal outputs.

Organizations typically choose between externally hosted proprietary models and self-hosted open-source alternatives. This decision affects data privacy, operational control, cost structure, and compliance posture. Model characteristics such as latency, supported context length, output quality, and language coverage play a major role in architectural decisions at higher layers.

Fine-Tuned Model Layer

Foundation models often require adaptation to meet specific business or domain requirements. The fine-tuned model layer focuses on customizing base models using domain-specific datasets, task-specific objectives, or supervised training signals. Fine-tuning introduces additional architectural considerations, including training pipelines, dataset versioning, model lifecycle management, and validation processes.

This layer improves relevance and consistency for targeted use cases while increasing system complexity. Proper isolation and version control at this layer are essential to avoid regressions in production behavior.

Data Platforms and Management Layer

The data layer manages how information enters, moves through, and is accessed by the generative AI system. It handles ingestion, cleaning, transformation, storage, and retrieval of both structured and unstructured data. This layer is especially important for runtime context delivery in generative systems.

Key components include data pipelines, vectorization processes, vector databases, retrieval systems, and context management mechanisms. The quality and structure of data at this layer directly influence output accuracy, relevance, and consistency. Weak data foundations often lead to hallucinations, outdated responses, or inconsistent system behavior.

Deployment and Serving Layer

The deployment layer governs how models and supporting services are exposed to users and applications. It includes model servers, API endpoints, traffic routing, load balancing, and container orchestration systems.

This layer ensures that generative AI systems remain available under varying workloads while meeting latency and reliability requirements in well-architected generative AI deployments. It also supports controlled rollout strategies, version upgrades, and rollback mechanisms. Deployment decisions affect system resilience, response times, and the ability to handle real-world usage spikes.

Evaluation and Monitoring Layer

Generative AI systems require continuous evaluation due to the variability of their outputs. This layer monitors performance, output quality, usage patterns, cost metrics, and failure conditions over time.

Evaluation mechanisms include automated metrics, human review processes, and feedback loops that track drift or degradation. Monitoring data such as latency trends, token consumption, and anomaly alerts helps teams maintain system reliability and align outputs with business expectations as usage evolves.

Security, Governance, and Compliance Layer

Security and governance span the entire stack and enforce controls across all architectural layers. This layer manages data access, encryption, identity controls, audit trails, and regulatory compliance requirements. Governance policies define acceptable system behavior, user permissions, and data handling rules.

This layer also supports risk mitigation through logging, access monitoring, and compliance validation. Strong governance ensures generative AI systems can operate safely in regulated or enterprise environments.

Application Frameworks in a Generative AI Stack

Application frameworks act as the execution backbone of a generative AI stack and clarify the AI stack meaning beyond standalone models. They connect models to applications, enabling generative AI to function as real, usable systems. Without this layer, generative AI remains a set of isolated model calls rather than a functional application.

Application frameworks act as the execution backbone of a generative AI stack and clarify the AI stack meaning beyond standalone models. They connect models to applications, enabling generative AI to function as real, usable systems. Without this layer, generative AI remains a set of isolated model calls rather than a functional application.

Orchestration of Workflows and Pipelines

Generative AI workflows often involve multiple stages such as input processing, context retrieval, inference, post-processing, and output validation. Application frameworks define and manage these execution pipelines, enabling:

- Sequential and conditional workflow steps

- Interaction with databases or knowledge stores

- Chaining of model calls with enrichment steps

- Integration with caching or performance optimization modules

Without framework orchestration, developers would resort to custom scripting that is harder to test and maintain as systems scale.

Model Abstractions and Standardized Interfaces

Frameworks abstract away the differences between multiple model backends. They allow applications to:

- Support proprietary APIs and self-hosted models interchangeably

- Swap or upgrade models with minimal code changes

- Centralize prompt templates and response formats

- Maintain consistent handling of tokens, contexts, and error states

This abstraction is crucial because foundation models vary widely in APIs, response formats, context window behavior, and operational constraints.

Tool and Service Integration

In real systems, generative AI applications rarely operate in isolation. Frameworks enable integration with:

- External APIs such as search engines, CRMs, and knowledge graphs

- Database systems for structured and unstructured data

- Authentication and access control services

- Logging, monitoring, and telemetry infrastructure

These connectors are not simple adapters. They enforce contracts about how data flows, how retries are handled, and how policies are applied before and after model calls.

Error Handling, Guardrails, and Safety Controls

Generative AI systems must remain safe and compliant during production use. Frameworks embed execution guardrails that:

- Check for inappropriate or unsafe outputs

- Monitor latency and handle fallback strategies

- Validate responses before they reach users or downstream systems

They also centralize rule sets that enforce enterprise policies, minimizing the risk of misbehavior when models generate unpredictable content.

Testing, Versioning, and Deployment Support

Effective frameworks support systematic testing and version control of:

- Workflow definitions

- Prompt templates

- Model configurations

- Integration connectors

This helps teams manage changes over time, roll out updates with confidence, and maintain reproducibility across environments.

How a Generative AI Stack Works End to End

A generative AI stack works as a coordinated system where architectural layers interact during execution. This section serves as a practical generative AI stack tutorial showing how layers interact in production.

A generative AI stack works as a coordinated system where architectural layers interact during execution. This section serves as a practical generative AI stack tutorial showing how layers interact in production.

1. Input and Data Preparation

The process begins with input intake and data preparation, where the system collects raw user requests or data signals. Inputs may originate from application interfaces, web forms, IoT streams, or enterprise systems. At this stage, the system:

- Validates inputs for correctness and formatting

- Sanitizes and normalizes raw data

- Identifies relevant context based on business rules

Data platforms then structure this information, pulling from transactional data, document stores, knowledge bases, or vector databases. Modern generative use cases often depend on high-quality embeddings or indexed context at runtime to ground model outputs in relevant facts. This stage is crucial because poor data preparation leads to irrelevant or unsafe model responses.

2. Contextual Retrieval and Enrichment

Once data is prepared, the stack executes context retrieval. For tasks requiring domain knowledge or long histories, models alone are insufficient without proper context. Retrieval may include:

- Vector search over embeddings

- Lookups in structured databases

- Document or passage selection from large corpora

This enriched context is packaged with the original input to form an enhanced request. Without this enrichment, generative systems often hallucinate or produce inconsistent results. The enriched context becomes the foundation for inference.

3. Model Invocation and Inference

At the heart of the workflow, the generative model layer receives structured prompts enhanced with context. The stack handles:

- Model selection based on task requirements

- Prompt construction and templating

- Passing enriched inputs to the chosen model

Depending on performance, privacy, and cost, systems may use proprietary APIs or self-hosted models. Some scenarios execute multiple models sequentially or in combination, such as one model for summarization and another for classification. This phase is where the generative core produces outputs. Proper API handling, error checking, and retry logic are essential to maintain reliability.

4. Post-Processing and Output Structuring

Raw model outputs rarely match application requirements directly. The stack applies post-processing to:

- Normalize or filter responses

- Apply business rules and format results

- Enforce safety policies (e.g., content screening)

This stage ensures generated outputs adhere to enterprise constraints, such as legal requirements, tone standards, or user experience guidelines. It is especially important for customer-facing systems where unfiltered output can have reputational or compliance risks.

5. Delivery and Application Integration

After processing, the result is delivered to the requesting application or service. This may involve:

- REST/GraphQL APIs

- Event streams to downstream systems

- UI components in web or mobile platforms

The integration layer ensures that applications receive responses in the expected format and that errors or fallbacks are handled gracefully. This phase also captures metrics related to latency, usage, and failures.

6. Monitoring, Logging, and Feedback Loops

Production systems must be observable. Effective stacks record:

- Model performance metrics

- Token usage and cost data

- Latency and throughput statistics

- Output quality signals

Logs and telemetry feed into dashboards or alerting systems to detect anomalies. Feedback loops allow teams to identify drift, regressions, or unsafe outputs and adapt workflows accordingly. This ongoing monitoring supports continuous improvement and model governance.

7. Governance and Safety Controls

Throughout the end-to-end flow, governance policies are enforced to:

- Control access to sensitive data

- Apply usage limits based on roles

- Enforce compliance with industry standards

These controls operate at multiple stages, from data ingestion to output delivery, ensuring that the entire stack adheres to security and compliance requirements. Understanding how a generative AI stack works is only useful when it can be applied correctly in real environments.

Webisoft’s Generative AI development services help organizations design and implement production-ready generative AI stacks that translate architecture into reliable, scalable systems.

How to Choose the Right Generative AI Stack

Choosing the right generative AI stack requires balancing technical feasibility, business goals, costs, and long-term operations. It involves selecting the right mix of infrastructure, data strategy, models, operational tools, and governance based on real constraints. This section breaks down key factors to consider in making that choice.

Choosing the right generative AI stack requires balancing technical feasibility, business goals, costs, and long-term operations. It involves selecting the right mix of infrastructure, data strategy, models, operational tools, and governance based on real constraints. This section breaks down key factors to consider in making that choice.

Align stack choices with your use case requirements

Start with the problem you are trying to solve. Every generative AI system has different needs. Some require fast responses, others need higher accuracy, larger context windows, or multimodal outputs. When these needs are clear early, the stack stays focused and avoids unnecessary complexity.

Assess data sensitivity and privacy requirements

Data sensitivity shapes many stack decisions. If your application handles confidential or regulated data, self-hosted models or isolated environments may be required. Where data lives, who can access it, and how it is protected directly affect whether cloud, hybrid, or on-prem components make sense.

Evaluate model selection and customization needs

Model choice is not just about capability. General-purpose foundation models may work for broad tasks, while domain-specific use cases often need fine-tuning. Proprietary APIs are easier to start with but limit control and cost predictability. Open-source models offer flexibility but require more maintenance. Model size, latency, and context limits should guide decisions.

Prioritize infrastructure scalability and cost efficiency

Generative AI usage can grow quickly and unevenly. The stack should scale without causing cost surprises. This includes planning for GPU availability, accelerator options, and hybrid deployments. Costs should be estimated across inference usage, storage, networking, and data transfer, not compute alone.

Balance managed services with custom engineering

Managed services can speed up development and reduce operational effort, especially for hosting, monitoring, and vector databases. Custom solutions provide more control but demand more engineering work. The right balance depends on timelines, team experience, and how much operational complexity you can manage.

Ensure operational visibility and monitoring support

Once deployed, generative AI systems must be observable. Logging and monitoring help track latency, costs, and output behavior. Without visibility, it becomes difficult to identify failures, detect model drift, or maintain consistent system performance over time.

Evaluate ecosystem support and integration capabilities

A generative AI stack must work with existing systems. This includes databases, CRMs, identity systems, and internal tools. Strong APIs and modular integrations make future expansion easier. Vendor stability, ecosystem maturity, and community support also matter for long-term reliability.

Plan for security, compliance, and governance

Security and compliance should be considered from the beginning. Access controls, encryption, audit logs, and policy enforcement protect data and users. Regulatory requirements influence how data is processed and stored, making governance a core part of stack design.

Align stack complexity with team capabilities

Finally, consider who will build and maintain the stack. Highly customized systems require experienced engineers and ongoing effort. Managed solutions reduce technical barriers but may limit flexibility. Training, documentation, and long-term ownership should be planned alongside adoption.

How Webisoft Builds Production-Ready Generative AI Stacks

Choosing the right generative AI stack is only valuable when it is implemented correctly in real environments. At Webisoft, we turn those architectural decisions into production-ready systems by combining AI engineering, data expertise, and long-term operational planning.

Choosing the right generative AI stack is only valuable when it is implemented correctly in real environments. At Webisoft, we turn those architectural decisions into production-ready systems by combining AI engineering, data expertise, and long-term operational planning.

Discovery and Strategic Alignment

At Webisoft, we begin with in-depth discovery to understand your business objectives, existing systems, and data maturity. This ensures the AI stack design aligns with real use cases and measurable outcomes rather than abstract concepts.

AI Stack Architecture and Blueprinting

Our architects design the full stack blueprint, defining how compute, data pipelines, models, and operational components integrate. This plan covers performance, scalability, and compliance needs before any coding begins.

Data Preparation and Quality Engineering

Data readiness is an important focus, with Webisoft refining, cleaning, validating, and structuring your data to ensure high-quality model inputs. This minimizes downstream errors and improves contextual accuracy.

Custom Model Development and Fine-Tuning

Webisoft selects or develops models based on your domain, using fine-tuning to ensure outputs match business language, tone, and expectations. This includes integrating advanced architectures like LLMs relevant to your use cases.

Integration with Existing Systems

Models and components are not standalone; Webisoft integrates them with your ERP, CRM, or core platforms so they enhance workflows without disruption. This supports unified data flow and practical system adoption.

Production Deployment and Scaling

We deploy generative AI solutions using scalable infrastructure strategies that support cloud, hybrid, or on-prem setups. Deployment includes containerization, CI/CD pipelines, and automated scaling to handle real usage patterns.

Monitoring, Retraining, and Optimization

Post-launch, we track performance metrics like latency, accuracy, and cost, with retraining or fine-tuning as needed. This ensures the stack remains reliable and adapts to evolving data patterns.

Security, governance, and compliance built in

We embed access controls, encryption, audit logging, and governance policies across the stack. This ensures your generative AI systems remain secure, compliant, and auditable in production. Discovery only works when assumptions are validated against real systems and data. Connect with Webisoft to assess your architecture, data readiness, and use cases, and confirm whether a generative AI stack is viable before design and implementation begin.

Build generative AI with clear enterprise boundaries.

Design, deploy, and scale secure generative AI systems customized to your business.

Conclusion

A strong AI initiative does not succeed because of a single model or tool. It succeeds when the underlying systems are designed to handle scale, change, and real-world constraints. Clarity around architecture, data flow, and operational discipline is what separates lasting systems from short-lived experiments.

For teams that want a generative AI stack explained beyond theory, Webisoft provides hands-on expertise to design and implement production-ready solutions. We help organizations move from understanding to execution, building systems that perform reliably as complexity and demand grow.

Frequently Asked Question

What is full stack generative ai?

Full stack generative AI refers to the complete system that combines data, models, infrastructure, orchestration, deployment, and monitoring. It enables teams to build, run, and maintain generative AI applications reliably in production environments.

How is a generative AI stack different from a traditional AI stack?

A generative AI stack is built for content generation and reasoning, not prediction or classification. It requires orchestration, context handling, and output control that traditional AI stacks do not prioritize.

Can a generative AI stack work without fine-tuning models?

Yes. Many systems rely on retrieval-based context and prompt control instead of fine-tuning. Fine-tuning becomes necessary when domain specificity or strict output behavior is required.