It’s hard to deny the buzz around generative AI nowadays. It’s a tech marvel that’s proven its worth by creating high-quality content autonomously, be it text, visuals, or sound. Industries across the spectrum – think marketing, healthcare, software, or finance – are exploring how to leverage it for their benefit. In a business setting, generative AI models are changing the game by automating monotonous tasks while showcasing smart decision-making abilities. Consider chatbots or predictive analytics tools; they’re making business operations smarter and smoother.

Still, developing models that can consistently deliver coherent, context-appropriate output remains a challenge in the field of generative AI. This is where pre-trained models come into the picture. They’re powerful tools, trained using enormous data sets, and capable of generating text that resembles human language. However, these models might not always be perfect fits for all applications or sectors. In such cases, fine-tuning of these pre-trained models becomes essential.

Fine-tuning is essentially tweaking pre-trained models with fresh data or information, to make them more suitable for specific tasks or sectors. It’s about training the model on a specialized data set to tailor it for a specific application. With the growing interest in generative AI applications, fine-tuning has come up as a preferred method to boost the performance of pre-trained models.

Contents

Why do We Fine-tune Pre-trained Models?

But what exactly are pre-trained models, and why should we bother fine-tuning them? Imagine a pre-trained model as a knowledgeable and talented artist who has already learned a great deal about a specific task. These models have been trained on vast amounts of data, spending hours upon hours learning patterns, nuances, and intricacies. They have become experts in their domain, whether it’s generating text, synthesizing images, or even composing music.

However, like any artist, pre-trained models have their own unique styles and preferences. They may produce generally impressive outputs but not align precisely with what you desire. This is where the magic of fine-tuning comes into play. Fine-tuning allows you to mold and shape these pre-trained models, transforming them from generalists to specialists tailored to your needs.

By fine-tuning a pre-trained model, you can imbue it with your own creative touch, guiding it to generate content that aligns with your vision. Whether you want to create personalized storytelling, design custom images, or even generate entire virtual worlds, fine-tuning enables you to harness these pre-trained models’ immense potential and make them your own.

In this blog, we’ll delve into the intricacies of fine-tuning pre-trained models for generative AI applications. We’ll explore the benefits of this process, examine the techniques involved, and provide you with valuable insights and best practices to help you navigate the challenges along the way.

Join us as we dive into the fascinating realm of fine-tuning for generative AI applications. Get ready to reshape the boundaries of what’s possible and unleash your imagination like never before!

Understanding Pre-Trained Models

Explanation of Pre-Trained Models and Their Benefits

Pre-trained models are like the superheroes of the AI world. They have undergone extensive training on vast amounts of data, learning to understand and mimic patterns, styles, and structures. These models have already mastered a particular task, be it generating text, creating images, or even playing games.

The beauty of pre-trained models lies in their ability to generalize knowledge and capture the essence of the data they were trained on.

They possess an innate understanding of what makes a piece of writing coherent, a painting visually appealing, or a melody harmonious. This knowledge is distilled into their complex neural networks, which can then be used as powerful tools by developers, researchers, and artists alike.

By leveraging pre-trained models, developers can save valuable time and computational resources. Instead of starting from scratch and training a model from the ground up, they can build upon the foundations laid by pre-existing models. This jumpstart significantly accelerates the development process, allowing for quicker iterations and experimentation.

Commonly Used Pre-Trained Models in Generative AI Applications

In the realm of generative AI applications, there are several popular pre-trained models that have garnered widespread recognition and adoption. Let’s explore a few of them:

- GPT (Generative Pre-trained Transformer): GPT models, such as GPT-2 and GPT-3, have revolutionized natural language processing tasks. These models can generate coherent and contextually relevant text, making them ideal for tasks like text completion, conversation generation, and language translation.

- StyleGAN (Style-Generative Adversarial Network): StyleGAN is a remarkable pre-trained model used for image synthesis. It allows users to control various aspects of generated images, such as their style, color palette, and even specific features. StyleGAN has found applications in creating realistic portraits, designing virtual environments, and generating unique artwork.

- VQ-VAE (Vector Quantized-Variational Autoencoder): VQ-VAE is a pre-trained model used for image and audio generation. It can compress and decompress complex data, resulting in high-quality reconstructions. VQ-VAE has been employed in applications such as image compression, music generation, and even deepfake detection.

These examples merely scratch the surface of the myriad pre-trained models available across different domains. Each model possesses its unique characteristics and capabilities, offering a rich tapestry of options for developers and artists seeking to unlock their creative potential.

Examples of Successful Applications Using Pre-Trained Models

The impact of pre-trained models can be witnessed in a wide range of successful applications. Here are a few notable examples:

- Chatbots and Virtual Assistants: Companies have used pre-trained models like GPT to create intelligent chatbots and virtual assistants that can engage in human-like conversations, providing personalized recommendations, customer support, and even acting as creative writing partners.

- Art and Design: Artists have harnessed the power of pre-trained models like StyleGAN to create stunning visuals, generate unique designs, and explore new artistic frontiers. These models have helped artists push boundaries, blend styles, and generate novel combinations that challenge traditional notions of art.

- Content Generation: From generating news articles and blog posts to composing poetry and music, pre-trained models have proven instrumental in automating content creation. They can generate text that mimics specific writing styles or genres, empowering content creators to produce vast amounts of high-quality material efficiently.

The use cases for pre-trained models in generative AI applications are vast and continue to expand as researchers and developers explore new possibilities. These models serve as powerful catalysts for creativity, providing a solid foundation for ground-breaking innovations.

In the next section, we’ll dive into fine-tuning pre-trained models, exploring how we can mold them to suit our specific needs and make them our own.

Fine-Tuning Pre-Trained Models

Definition and Purpose of Fine-Tuning

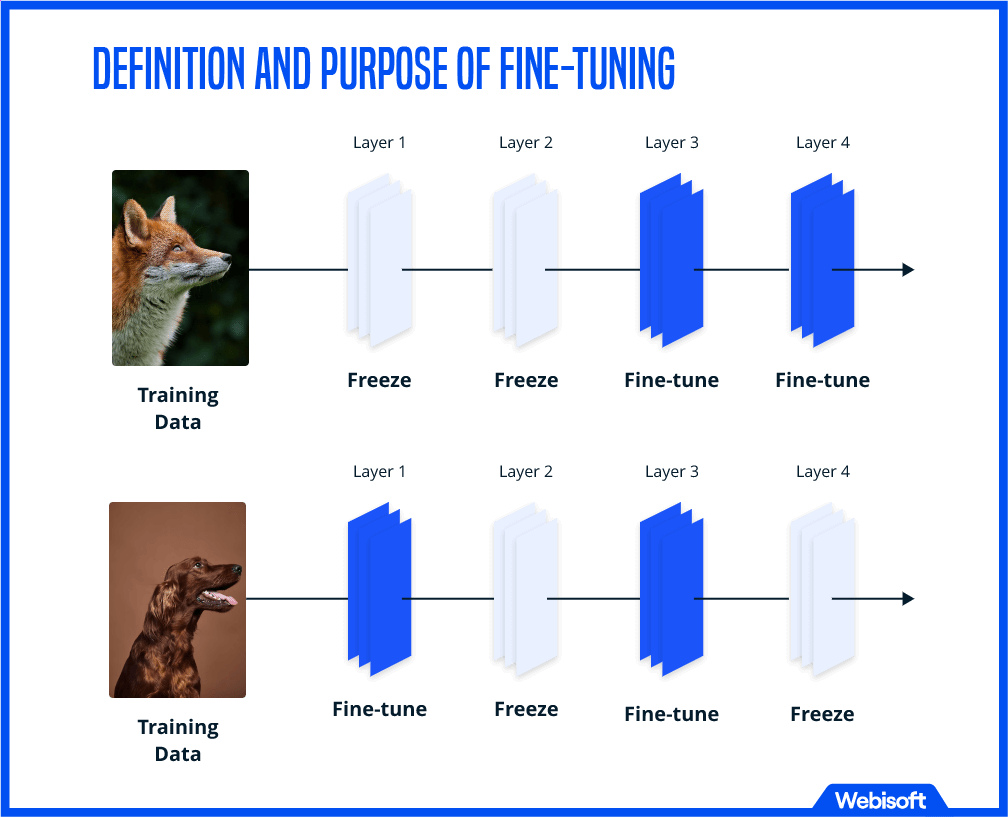

Fine-tuning is the process of taking a pre-trained model and further training it on a specific task or Dataset. While pre-trained models are already highly capable, fine-tuning allows us to tailor them to our specific requirements and enhance their performance on targeted tasks.

The purpose of fine-tuning is twofold: adaptation and specialization. By fine-tuning a pre-trained model, we adapt its knowledge to align with our Dataset’s specific nuances and patterns. This process enables the model to grasp the intricacies of our data domain, leading to more accurate and contextually relevant outputs.

Furthermore, fine-tuning allows us to specialize the pre-trained model for a particular task. It helps the model to focus its knowledge and adapt its behavior to generate outputs that align with our desired outcomes.

Benefits of Fine-Tuning Pre-Trained Models

- Reduced Training Time and Resource Requirements: Fine-tuning leverages the knowledge already present in a pre-trained model, saving significant time and computational resources compared to training from scratch. It allows us to build upon the existing foundations, accelerating the development process and enabling quicker iterations.

- Improved Performance on Specific Tasks: Fine-tuning allows us to enhance the performance of pre-trained models on targeted tasks by adapting them to our specific data distribution. The model’s ability to learn from our Dataset and specialize in the desired task leads to more accurate and tailored outputs.

- Transfer of Knowledge and Generalization: Pre-trained models have already learned a great deal from a vast amount of data, capturing the essence of the domain they were trained on. Fine-tuning enables us to transfer this knowledge to our specific task, benefiting from the model’s generalization abilities while still customizing it for our needs.

- Customization and Control: Fine-tuning empowers us to customize the behavior of pre-trained models according to our preferences. We can shape the outputs, control various aspects such as style, tone, or content, and ensure that the generated content aligns with our specific requirements.

Factors to Consider Before Fine-Tuning

- Dataset Selection and Preparation: The choice of Dataset is vital for successful fine-tuning. It should represent the target task, adequately diverse, and sufficient size to capture the required patterns. Also, proper data preprocessing and cleaning are essential to ensure the model effectively adapts to the data.

- Architecture Selection: Depending on the task at hand, it’s important to choose the appropriate architecture that complements the pre-trained model. Different architectures excel in different domains, and selecting the right one can significantly impact the fine-tuning process and overall performance.

- Hyperparameter Tuning: Fine-tuning often requires adjusting various hyperparameters such as learning rate, batch size, and regularization techniques. Careful experimentation and tuning of these hyperparameters are necessary to achieve optimal performance and prevent issues like overfitting or underfitting.

- Computational Resources: Fine-tuning can be computationally intensive, requiring substantial resources in terms of processing power, memory, and storage. Before initiating the process, ensuring access to sufficient computational resources is essential to carry out the training effectively.

By considering these factors and making informed decisions, we can set the stage for a successful fine-tuning process that maximizes the potential of our pre-trained models.

Techniques for Fine-Tuning Pre-Trained Models

A. Transfer Learning and Its Role in Fine-Tuning

Transfer learning plays a crucial role in fine-tuning pre-trained models. It involves leveraging the knowledge acquired by a pre-trained model on a source task and applying it to a target task. This process allows us to benefit from the generalization abilities of the pre-trained model while adapting it to our specific task.

In fine-tuning, transfer learning typically involves two stages: pre-training and fine-tuning. A model is trained on a large-scale dataset during pre-training to learn general patterns and features.

This pre-training phase establishes a solid foundation of knowledge. In the fine-tuning stage, the pre-trained model is further trained on a smaller, task-specific dataset to specialize its capabilities and adapt it to the target task.

B. Frozen Layers and Unfreezing Strategies

When fine-tuning a pre-trained model, one common practice is to freeze some initial layers while updating the later ones. Freezing early layers helps to preserve the general knowledge and patterns learned during pre-training while allowing the later layers to be fine-tuned to adapt to the target task.

Unfreezing layers gradually is often employed during the fine-tuning process. Initially, only the topmost layers are unfrozen, enabling the model to learn task-specific features while keeping the lower layers intact. As training progresses, additional layers can be unfrozen. This allows the model to refine its understanding of the task at different levels of abstraction.

C. Regularization Techniques for Fine-Tuning

Regularization techniques play a vital role in preventing overfitting and improving the generalization abilities of fine-tuned models. Regularization methods, such as dropout, L1 or L2 regularization, and batch normalization, can be applied to mitigate overfitting during the fine-tuning process.

These techniques help the model to generalize well to unseen data and produce more reliable and robust outputs.

D. Optimizers and Learning Rate Schedules

The choice of optimizer and learning rate schedule significantly impacts the fine-tuning process. Optimizers, such as Adam, SGD (Stochastic Gradient Descent), or RMSprop, control how the model’s weights are updated during training.

The learning rate, which determines the step size in weight updates, can be adjusted dynamically using learning rate schedules to optimize the training process and prevent convergence issues.

E. Monitoring and Evaluating Fine-Tuning Progress

During fine-tuning, it’s crucial to monitor the progress and performance of the model. Monitoring techniques include tracking metrics like loss, accuracy, or perplexity and visualizing training curves to assess convergence and identify potential issues.

Regular evaluation on validation or test sets helps gauge the model’s performance and make informed decisions regarding hyperparameter tuning or training adjustments.

By leveraging transfer learning, carefully selecting frozen and unfrozen layers, applying regularization techniques, optimizing with suitable optimizers and learning rate schedules, and monitoring the fine-tuning process, we can shape pre-trained models to excel at the target task and generate high-quality outputs.

Challenges and Best Practices for Fine-Tuning

A. Common Challenges in Fine-Tuning Pre-Trained Models

While fine-tuning pre-trained models offers great potential, it also comes with certain challenges. Understanding and addressing these challenges can lead to more successful outcomes. Some common challenges include:

- Overfitting: Fine-tuning on a specific task can sometimes lead to overfitting, where the model becomes too specialized to the training data and fails to generalize well to new examples. Regularization techniques, such as dropout and weight decay, can help mitigate overfitting by introducing constraints on the model’s parameters.

- Catastrophic Forgetting: When fine-tuning a model, there is a risk of catastrophic forgetting, where the model loses its ability to perform well on previously learned tasks. To address this, it’s important to strike a balance between updating the model’s parameters for the target task while preserving the knowledge gained during pre-training.

- Limited Dataset Size: Fine-tuning requires a task-specific dataset, which may be smaller than the original pre-training Dataset. Limited data can result in challenges such as poor generalization or increased sensitivity to noise. Data augmentation techniques, such as rotation, scaling, or adding noise, can be employed to artificially increase the effective dataset size and improve generalization.

- Bias and Fairness: Pre-trained models may inherit biases present in the training data, which can perpetuate unfair or discriminatory behavior. It’s crucial to carefully analyze and address biases during fine-tuning to ensure ethical and unbiased outputs.

B. Overcoming Challenges: Best Practices for Fine-Tuning

To overcome the challenges associated with fine-tuning pre-trained models, it’s important to follow the best practices:

- Regular Model Evaluation: Continuously monitor and evaluate the performance of the fine-tuned model on validation or test sets. This helps identify potential issues and guides the fine-tuning process.

- Iterative Refinement: Fine-tuning is an iterative process. Start with a small number of training iterations and gradually increase them while observing the model’s performance. This allows for better control over the fine-tuning process and helps in avoiding overfitting or catastrophic forgetting.

- Robust Dataset Preparation: Selecting a representative and diverse Dataset for fine-tuning is crucial. Ensure that the Dataset captures the desired patterns and covers a wide range of scenarios to improve the model’s generalization.

- Responsible Use of Data Augmentation: Data augmentation techniques can help overcome limited dataset sizes. However, ensure that the augmentation techniques are applied judiciously and aligned with the specific task to avoid introducing artificial biases or distorting the target distribution.

- Addressing Bias and Fairness: Perform bias analysis on the pre-trained and fine-tuned models to identify potential biases. Mitigate biases through techniques such as debiasing algorithms, dataset balancing, or inclusive data collection strategies.

By following these best practices, fine-tuning becomes a more effective and reliable process, resulting in fine-tuned models that perform well on specific tasks while maintaining ethical and unbiased behavior.

Case Studies: Fine-Tuning Pre-Trained Models in Generative AI Applications

To illustrate the real-world impact and effectiveness of fine-tuning pre-trained models in generative AI applications, let’s delve into a few compelling case studies:

A. Text Generation for Creative Writing

In the field of creative writing, fine-tuning pre-trained models like GPT has yielded remarkable results. By training in specific genres or styles, writers have been able to generate coherent and engaging text that mimics the desired literary characteristics.

For example, a writer can fine-tune a pre-trained model with a dataset of Shakespearean sonnets to generate new sonnets that capture the essence and style of Shakespeare’s works. This approach opens up possibilities for automated storytelling, poetry generation, and even interactive fiction.

B. Image Style Transfer and Editing

Fine-tuning pre-trained models such as StyleGAN has revolutionized image synthesis and editing. By fine-tuning specific datasets of images, artists, and designers can control various aspects of image generation, including style, color palette, and object composition.

For instance, a fine-tuned StyleGAN model can generate realistic images in the style of famous painters like Van Gogh or Picasso. This technique has been used to create virtual worlds, design unique artwork, and explore novel visual aesthetics.

C. Music Composition and Remixing

In the realm of music, fine-tuning pre-trained models has shown great promise. By training on diverse music datasets, musicians and composers can generate original compositions, explore new genres, or even remix existing tracks.

For example, a musician can fine-tune a pre-trained model on a collection of jazz songs and use it to generate new jazz melodies or improvisations. This approach expands creative possibilities, aids in music production, and provides inspiration for artists.

D. Multimodal Applications

Fine-tuning pre-trained models also enables the development of multimodal generative AI applications that combine text, image, and audio generation. For instance, a fine-tuned model can generate descriptive captions for images, convert text descriptions into visually coherent images, or even create soundtracks based on textual prompts.

This multimodal approach opens up avenues for immersive storytelling, interactive media experiences, and creative content generation across multiple modalities.

These case studies highlight the versatility and potential of fine-tuning pre-trained models in generative AI applications. By tailoring these models to specific tasks and domains, developers, artists, and researchers can unlock new levels of creativity and productivity.

Conclusion

Harnessing the capabilities of pre-trained models for generative AI through fine-tuning provides an effective way to exploit cutting-edge models, tailor-making them to meet specific requirements. By mastering the art of fine-tuning, overcoming its hurdles, and adopting the best practices, both individuals and organizations can innovate with pre-trained models in novel and influential ways.

From generating textual content to creating images, composing music, or applying to multimodal applications, fine-tuning of pre-trained models broadens the horizon of opportunities for creative expression, streamlining operations, and generating bespoke content.

As the landscape of generative AI is perpetually transforming, fine-tuning these models will persist as an indispensable tool, enabling diverse entities to push the envelope of feasibility.

Embark on the journey to discover the intriguing domain of fine-tuning pre-trained models with Webisoft. Ignite your creative spark, customize AI to align with your vision, and carve your niche in the world of generative AI applications. The potential is infinite and the future is waiting for you to shape it.

Join Webisoft now and let us guide you through the thrilling world of fine-tuning pre-trained models, enabling you to turn AI dreams into reality.