Have you ever wondered how computers can transform random noise into amazing pictures, text, or even music? Well, that’s the magic of diffusion models.

But what is the diffusion model?

A diffusion model is a type of generative machine-learning model that transforms random noise into realistic data by iteratively refining it. Here examples include PixelCNN++ for image generation, GPT-3.5 for text generation, and RealNVP for density estimation.

So, what can you expect from this article? We’re going to break down the complex diffusion models in simple terms. We’ll show you how they’re used, why they matter, and how they’re shaping the future. Let’s explore this together!

Contents

- 1 What are Diffusion Models?

- 2 Examples of Diffusion Models

- 3 Diffusion Model in Machine Learning

- 4 Exploring the Trio: Three Types of Diffusion Models

- 5 How Does the Diffusion Model Function?

- 6 Benefits of a Diffusion Models

- 7 Applications of Diffusion Models

- 8 Top Diffusion Models

- 9 Industries using diffusion models

- 10 How to Interpret Diffusion Models in AI?

- 11 How to Train Diffusion Model

- 11.1 Step 1: Preparing the Data

- 11.2 Step 2: Selecting Your Model

- 11.3 Step 3: Training Your Model

- 11.4 Step 4: Evaluating Your Model

- 11.5 Step 5: Deploying Your Model

- 12 Diffusion Model Prompt Engineering

- 13 What are Some Challenges of Diffusion Models

- 14 Future of Training Diffusion Models in Machine Learning

- 15 Why Choose Webisoft to Implement Diffusion to Your Business

- 16 Final Verdict

- 17 FAQs

What are Diffusion Models?

Diffusion models are a class of generative machine learning models. They are designed to model and generate high-dimensional data, such as images, text, or audio. They do this by progressively improving a random noise signal until it closely matches the desired data distribution.

These models have gained significant attention in recent years due to their ability to generate high-quality and coherent samples.

The core idea behind diffusion models is based on the concept of denoising. Instead of directly modeling the data distribution, they model the conditional distribution of data given an initial random noise signal. The key innovation is in how this noise signal is transformed to generate more realistic data samples.

Examples of Diffusion Models

Diffusion models are a unique approach in generative machine learning, transforming random noise into realistic data samples through carefully designed transformations.

Let’s look at some of the examples of diffusion models:

PixelCNN++

PixelCNN and its improved version PixelCNN++ are autoregressive diffusion models used primarily for image generation. They model the conditional distribution of pixel values given previously generated pixels. By repeatedly sampling from these conditional distributions, they generate high-quality images.

Gated PixelCNN

Gated PixelCNN is an extension of PixelCNN that incorporates gated activations, allowing it to model complex dependencies in image data more effectively. It improves the quality of generated images and speeds up training.

RealNVP (Real Non-Volume Preserving)

RealNVP is a flow-based diffusion model used for generative tasks. It employs invertible transformations to learn the data distribution. RealNVP has been used for image generation and density estimation.

BigGAN

BigGAN is a powerful generative model that utilizes diffusion-based training techniques to generate high-resolution images. It scales up the architecture of the original GAN (Generative Adversarial Network) and incorporates diffusion steps for training stability and improved sample quality.

Text-Diffusion Models

While diffusion models were initially more associated with image generation, they have also been adapted for text generation tasks. Models like GPT-3.5 and its successors have incorporated elements of diffusion training, among other techniques, to enhance their text generation capabilities.

Audio Diffusion Models

Diffusion models have also been explored for audio synthesis and generation. These models learn to generate realistic audio waveforms by iteratively refining a noisy audio signal, often conditioned on some context or initial audio snippet.

Diffusion Model in Machine Learning

A diffusion model in machine learning is a type of generative model that aims to generate high-quality data samples by transforming a random noise signal into realistic data through a series of steps or transformations.

These models are used for various generative tasks, such as image generation, text generation, and audio synthesis.

The key idea behind diffusion models is to represent the conditional distribution of data based on an initial noisy signal. By systematically refining this signal through well-planned transformations and iterative processes, diffusion models generate samples that closely match the target data distribution.

The term “diffusion” in diffusion models refers to the gradual spreading or diffusion of randomness in the noise signal as it is transformed into data. This process helps the model learn the complex dependencies and patterns present in the target data distribution.

Exploring the Trio: Three Types of Diffusion Models

There are three main types of diffusion models. Let’s go through them one by one:

1. Denoising Diffusion Probabilistic Models (DDPMs)

DDPMs are a type of diffusion model that specializes in generating probabilistic data. They simulate a process to transform noisy data into clean samples. During training, they learn the parameters of this transformation process.

When generating data, they use their learned knowledge to produce denoised and highly realistic samples. DDPMs are especially effective in tasks like image denoising, inpainting, and super-resolution.

2. Score-Based Generative Models (SGMs)

SGMs belong to the category of diffusion models that focus on generating new data based on patterns learned from existing data.

They estimate data likelihood using a score function and can create entirely new samples following the same patterns as the original data. SGMs find applications in tasks such as deepfakes and generating additional data in scenarios where data is scarce.

3. Stochastic Differential Equations (Score SDEs)

Score SDEs are mathematical equations employed in generative modeling to parameterize score-based models. They describe how systems change over time when subjected to deterministic and random forces.

Essentially, Score SDEs use stochastic processes to model unpredictable situations, proving valuable in addressing randomness in fields like physics and financial markets.

How Does the Diffusion Model Function?

To understand how a diffusion model functions, let’s break it down into several key steps:

Initialization

The diffusion process starts with an initial input known as “noise.” This noise represents a random data point, often conforming to a simple distribution like Gaussian.

Diffusion Steps

The key idea is to iteratively refine this initial noisy signal through a series of transformation steps. Each step involves applying an invertible operation to the signal, gradually reducing its randomness and making it more structured.

Conditional Generation

Diffusion models typically work conditionally. This means they don’t generate data in isolation but rather generate data based on some starting input or condition. This condition could be a text description, an image, or other forms of data.

Gradual Transition

As the signal undergoes these transformations, it undergoes a step-by-step transition. It evolves from its initial noisy state towards closely resembling the desired data distribution, with each operation contributing to this refinement.

Noise Control

An important feature is the ability to control the level of randomness or noise in the generated samples. Diffusion models provide a way to adjust noise levels during the generation process, which is valuable for tasks involving uncertain data.

Inverse Process

To generate data, diffusion models apply these transformations in reverse. They start with a well-structured signal, often considered a “clean” or “real” data point, and apply the inverse of the transformations to generate data samples.

Quality Assessment

The generated data is then assessed for quality. Various evaluation metrics are used to measure how closely it resembles the target data distribution. Diffusion models aim to produce high-quality samples.

Applications

Finally, the generated data finds applications in various use cases, depending on specific requirements. These applications can range from tasks like image denoising and text generation to more complex challenges, such as creating realistic video sequences or audio samples.

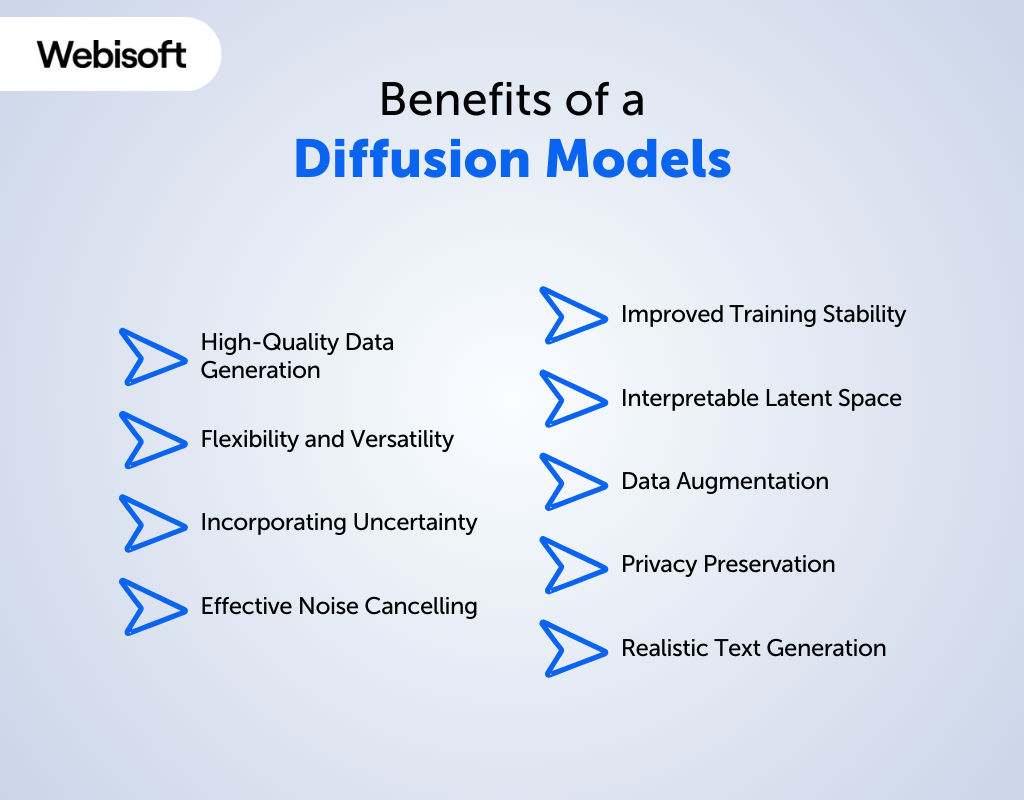

Benefits of a Diffusion Models

Diffusion models have several benefits in the field of machine learning and generative modeling. Here are some of them:

High-Quality Data Generation

Diffusion models excel at generating exceptionally high-quality data, be it lifelike images, coherent text, or realistic audio. Their ability to closely resemble original training data is invaluable for applications like art generation and content creation.

Flexibility and Versatility

Diffusion models stand out for their adaptability across a wide range of data types. Whether it’s image generation, text creation, or audio synthesis, these models exhibit proficiency in handling diverse data types effectively.

Incorporating Uncertainty

Diffusion models naturally handle uncertainty by offering a probabilistic framework. This adaptability proves useful in scenarios where data inherently contains uncertainty, such as financial predictions or medical diagnoses.

Effective Noise Canceling

Diffusion models excel at addressing noisy data, enhancing its quality for various applications like image processing and speech recognition.

Improved Training Stability

Unlike traditional generative models that often face training challenges, diffusion models use diffusion-based training techniques that enhance stability, resulting in more reliable model training.

Interpretable Latent Space

Some diffusion models offer interpretable latent spaces, providing valuable insights into data structure and contributing factors.

Data Augmentation

Beyond generation, diffusion models serve as excellent data augmenters, creating variations of existing samples. This aids in enhancing the robustness and generalization of machine learning models, particularly in data-scarce scenarios.

Privacy Preservation

Diffusion models play a crucial role in privacy-sensitive applications, generating synthetic data that retains statistical properties while safeguarding sensitive information, essential in sectors like healthcare and finance.

Realistic Text Generation

In natural language generation, diffusion models produce coherent and contextually relevant text, benefiting applications such as chatbots, content generation, and language translation.

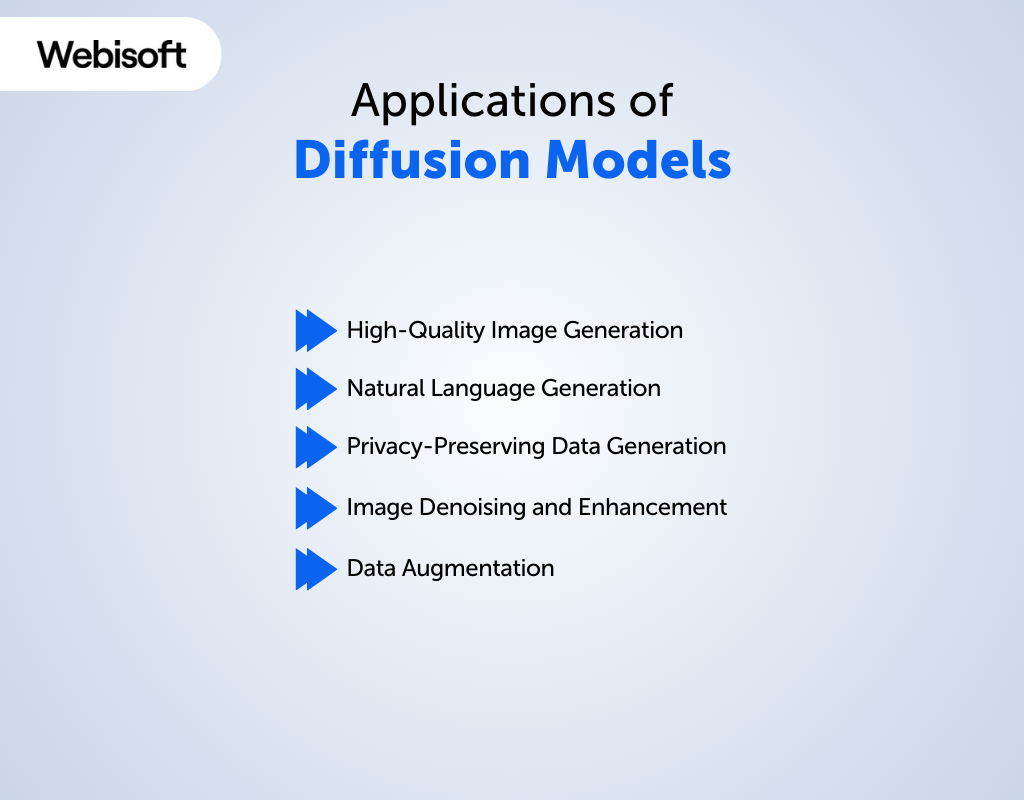

Applications of Diffusion Models

Here are some specific applications of diffusion models:

High-Quality Image Generation

Diffusion models are widely employed in the generation of high-quality images. They excel in creating visually appealing and realistic images that closely resemble real-world photographs.

This application is valuable in various domains, including art generation, graphic design, and content creation for media.

Natural Language Generation

In natural language processing, diffusion models have proven their capability to generate coherent and contextually relevant text. This is especially beneficial in applications such as chatbots, automated content generation, and language translation, where producing human-like text is essential.

Privacy-Preserving Data Generation

Diffusion models offer a unique advantage in privacy-sensitive applications. They can generate synthetic data that retains the statistical properties of the original dataset while safeguarding sensitive information.

This is crucial in fields like healthcare and finance, where privacy and data security are paramount.

Image Denoising and Enhancement

Diffusion models are proficient at denoising noisy images and enhancing their quality. They can effectively remove unwanted noise and artifacts from corrupted images, resulting in cleaner and more visually appealing versions.

This application is vital in image processing, medical imaging, and forensic analysis.

Data Augmentation

Beyond their role as generators, diffusion models serve as excellent data augmenters. They can create additional training data by generating variations of existing samples. This is particularly useful in machine learning scenarios with limited data availability, improving model robustness and generalization.

Top Diffusion Models

Here we have the list of the top 10 diffusion models. Let’s explore them:

1. CyberRealistic

CyberRealistic is a diffusion model designed for generating highly realistic data across various domains. It excels in creating images, text, and audio that closely mimic the characteristics of real-world data. Its applications span from immersive simulations to content generation.

2. Realistic Vision

Realistic Vision is a diffusion model known for its ability to generate lifelike and high-quality visual content. It’s widely used in tasks such as image synthesis, style transfer, and enhancing the realism of computer-generated graphics.

3. epiCRealism

epiCRealism is a diffusion model that specializes in producing epic and highly detailed content. Whether it’s generating epic scenes in video games or epic cinematic experiences, this model thrives in creating immersive visual and auditory content.

4. ReV Animated

ReV Animated is a diffusion model dedicated to the creation of animated content. It is capable of generating dynamic and visually appealing animations, making it a valuable tool for animators and content creators.

5. DreamShaper

DreamShaper is a diffusion model that excels in shaping creative and imaginative content. It’s often used for artistic endeavors, enabling artists and designers to bring their dreams and visions to life through various media.

6. ChilloutMix

ChilloutMix is a diffusion model recognized for its ability to generate soothing and relaxing content. Whether it’s calming images, ambient sounds, or serene text, this model contributes to creating tranquil and stress-relieving experiences.

7. SDXL

SDXL is a diffusion model that stands out for its extra-large capabilities. It can handle vast datasets and generate high-resolution content, making it ideal for large-scale image generation, data augmentation, and high-fidelity simulations.

8. Anything V5

Anything V5 is a versatile diffusion model that lives up to its name. It can generate diverse types of content, from images to text and audio. Its flexibility and adaptability make it a go-to choice for a wide range of generative tasks.

9. AbsoluteReality

AbsoluteReality is a diffusion model renowned for its ability to produce content that blurs the line between reality and simulation. Whether it’s for virtual reality experiences or creating hyper-realistic data, this model is at the forefront of achieving absolute realism.

10. DreamShaper XL

DreamShaper XL is an extended version of the DreamShaper diffusion model. It offers enhanced capabilities for shaping creative content on a larger scale, making it an invaluable tool for ambitious artistic projects and immersive storytelling.

Industries using diffusion models

Diffusion models find applications across various industries due to their ability to model complex data distributions and generate high-quality samples. Here are some industries where diffusion models are making an impact:

Film and Animation

In movies and animation, diffusion models play a vital role. They enhance the quality of visual effects, making them more lifelike and impressive. Animators rely on diffusion models to create characters that look and move realistically, improving the overall cinematic experience.

Graphic Design

Graphic designers use diffusion models to create intricate and stunning visual designs. These models help generate detailed patterns, textures, and graphics, making designs more captivating for purposes like advertising, branding, and digital art.

Music and Sound Design

In music composition and sound design, diffusion models are valuable. They assist in generating realistic and high-quality audio, making it sound like real instruments or environments. Musicians and sound designers use these models to create immersive audio experiences in music production, gaming, and virtual reality.

Media and Gaming Industry

Diffusion models have a significant impact on media and gaming. In media, they improve video quality, clean up audio, and aid in post-production work.

For gaming, diffusion models help create visually stunning game worlds and characters. They contribute to cinematic storytelling and engaging gameplay experiences, setting new standards for entertainment.

Finance and Banking

The finance and banking sector relies on diffusion models for risk assessment and fraud detection. These models predict market trends, simulate financial instrument behavior, and detect fraudulent transactions.

They are crucial tools for making informed investment decisions, managing risks, and maintaining financial security.

Healthcare and Medicine

Diffusion models are essential in healthcare. They enhance medical image processing by reducing noise and improving diagnostic accuracy in X-rays and MRIs. Additionally, they accelerate drug discovery by simulating molecular interactions and predicting potential new medicines, ultimately improving patient care.

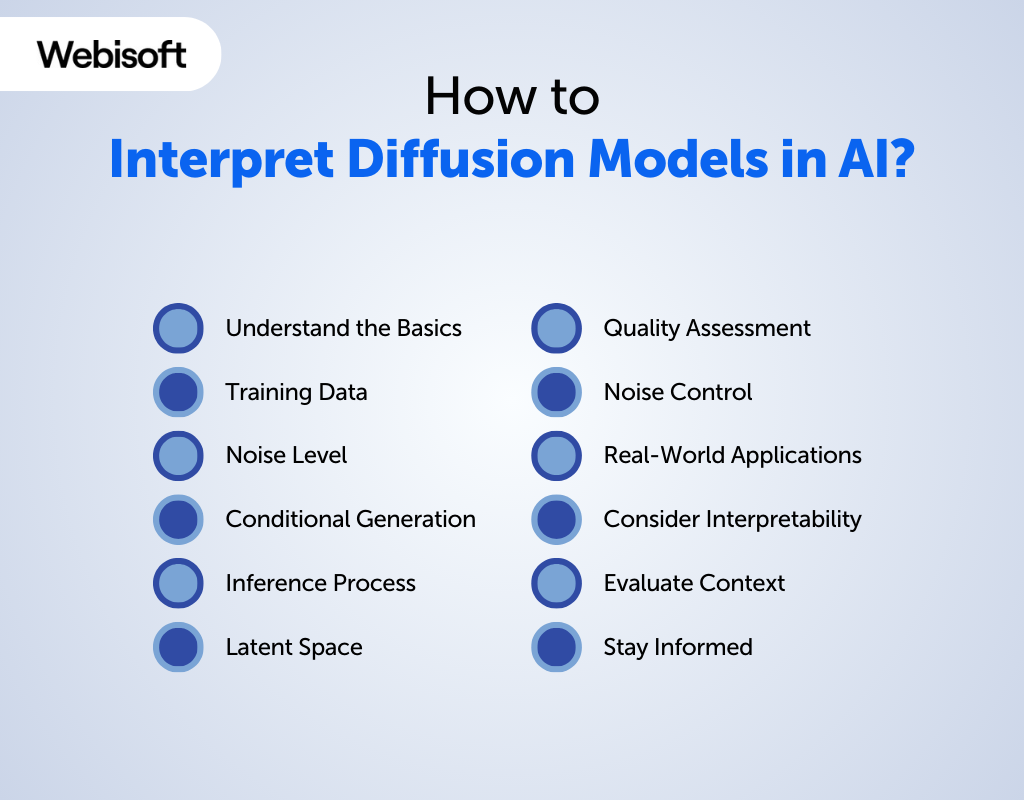

How to Interpret Diffusion Models in AI?

Interpreting diffusion models in AI involves understanding how these models generate data and making sense of the results they produce. Here’s a step-by-step guide on how to interpret diffusion models:

Understand the Basics

Begin by familiarizing yourself with the fundamental concept of diffusion models. Know that these models transform simple noise into complex data through a series of carefully designed steps.

Training Data

Know the training data used to train the diffusion model. Understand the type of data, its source, and any preprocessing steps involved.

Noise Level

Recognize the concept of noise levels. In diffusion models, noise refers to randomness in the data. Different noise levels represent varying degrees of randomness in the generated samples.

Conditional Generation

Realize that diffusion models often operate in a conditional generation setting. This means they generate data based on some initial input or condition. Understand the role of this condition in influencing the generated samples.

Inference Process

Comprehend the inference process. During inference, diffusion models start with a noisy or incomplete input and iteratively refine it to generate realistic data samples. This process may involve multiple diffusion steps.

Latent Space

Explore the latent space. Some diffusion models have interpretable latent spaces that allow you to gain insights into the underlying data distribution and the factors that contribute to the generation process.

Quality Assessment

Assess the quality of generated samples. Use appropriate evaluation metrics to measure how closely the generated data resembles the target distribution. Understand that diffusion models strive to produce high-quality samples.

Noise Control

Grasp the importance of noise control. Know that diffusion models allow you to control the level of randomness in generated samples, making them useful for tasks involving uncertainty.

Real-World Applications

Explore real-world applications. Understand how diffusion models are used in various domains, such as image denoising, text generation, and audio synthesis. Recognize the practical value they offer in these applications.

Consider Interpretability

If applicable, consider the interpretability of the diffusion model. Some models offer insights into the generation process, which can be valuable for understanding the relationships within the data.

Evaluate Context

Evaluate the context in which the diffusion model is used. Understand the specific problem it aims to solve and how it fits into the broader AI or machine learning pipeline.

Stay Informed

Keep up with the latest developments in diffusion models and their interpretability. AI research is continually evolving, and new techniques may emerge to enhance understanding and interpretation.

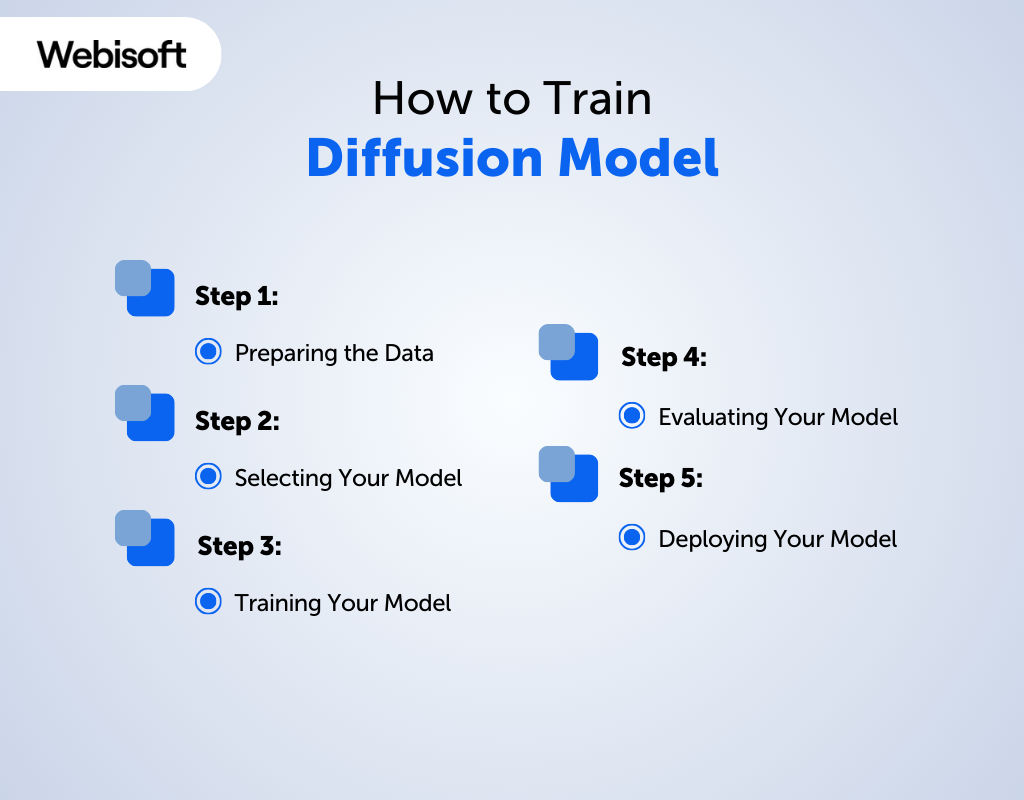

How to Train Diffusion Model

Let’s take a stroll through the process of training a diffusion model. We’ll walk you through every step, from data preparation to model deployment:

Step 1: Preparing the Data

Before you can train a diffusion model, you need to get your data ready. This involves a few important steps:

Collecting the Right Information

Start by gathering data that accurately represents the connections between individuals in your network. This data can include things like people’s demographics, their preferences, or any other relevant details that describe how they’re connected.

Cleaning Your Data

Once you have your data, it’s crucial to clean it up. This means getting rid of any missing or duplicate information, dealing with any unusual or extreme data points, and making sure your data is in a format that your model can work with.

Formatting Your Data

The last step in data preparation is getting your data into the right shape. Depending on your model and your data, this might involve turning your data into a graph-like structure or making sure that all your variables are on a similar scale. The specific steps you take will depend on your model and the characteristics of your data.

Step 2: Selecting Your Model

After preparing your data, the next critical step is choosing the right diffusion model for your project. Here’s how to go about it:

Weighing Your Options

You’ll have several diffusion model options to consider, such as threshold models, susceptible-infected (SI) models, and independent cascade models. The choice you make should align with the specific needs and goals of your project.

Making the Right Choice

When selecting a diffusion model, prioritize factors like accuracy, computational efficiency, interpretability, and the model’s capability to handle missing data. Assess how well the model can integrate into your existing system and consider the availability of the required data.

Tuning it Right

Once you’ve chosen a diffusion model, the next step is to set the model’s hyperparameters. This involves fine-tuning settings based on the unique characteristics of your application and data. Careful adjustment of these parameters is essential to ensure your model performs optimally.

Step 3: Training Your Model

Once you’ve selected your diffusion model, it’s time to train it effectively. Here’s how to proceed:

Dividing Your Data

Start by splitting your data into two sets – the training set and the test set.

The training set is used to teach your model, while the test set is reserved for evaluating its performance. Ensure that these sets accurately represent your data and avoid any biases towards specific individuals or units.

Setting Up for Success

Configure the hyperparameters and other model-specific parameters required for your chosen diffusion model. This step is crucial to enable your model to understand the underlying data structure without overfitting, where it learns noise instead of meaningful patterns.

Time to Train

With your data split and parameters in place, you can commence the training process. This typically involves multiple passes through the training set, fine-tuning model parameters based on performance feedback, and allowing your model to learn and adapt to the data.

class UNet(nn.Module):

def __init__(self, c_in=3, c_out=3, time_dim=256):

super().__init__()

self.time_dim = time_dim

self.inc = DoubleConv(c_in, 64)

self.down1 = Down(64, 128)

self.sa1 = SelfAttention(128)

self.down2 = Down(128, 256)

self.sa2 = SelfAttention(256)

self.down3 = Down(256, 256)

self.sa3 = SelfAttention(256)

self.bot1 = DoubleConv(256, 256)

self.bot2 = DoubleConv(256, 256)

self.up1 = Up(512, 128)

self.sa4 = SelfAttention(128)

self.up2 = Up(256, 64)

self.sa5 = SelfAttention(64)

self.up3 = Up(128, 64)

self.sa6 = SelfAttention(64)

self.outc = nn.Conv2d(64, c_out, kernel_size=1)

def unet_forwad(self, x, t):

“Classic UNet structure with down and up branches, self attention in between convs”

x1 = self.inc(x)

x2 = self.down1(x1, t)

x2 = self.sa1(x2)

x3 = self.down2(x2, t)

x3 = self.sa2(x3)

x4 = self.down3(x3, t)

x4 = self.sa3(x4)

x4 = self.bot1(x4)

x4 = self.bot2(x4)

x = self.up1(x4, x3, t)

x = self.sa4(x)

x = self.up2(x, x2, t)

x = self.sa5(x)

x = self.up3(x, x1, t)

x = self.sa6(x)

output = self.outc(x)

return output

def forward(self, x, t):

“Positional encoding of the timestep before the blocks”

t = t.unsqueeze(-1)

t = self.pos_encoding(t, self.time_dim)

return self.unet_forwad(x, t)

Also, if you’re working with an enhanced conditional model, remember to include class labels at each timestep. This is done using an Embedding layer to encode the labels, resulting in a more sophisticated model design.

class UNet_conditional(UNet):

def __init__(self, c_in=3, c_out=3, c_condition=3, time_dim=256):

super().__init__(c_in=c_in + c_condition, c_out=c_out, time_dim=time_dim)

self.c_condition = c_condition

def forward(self, x, t, y=None):

“If a condition is available it is concatenated to the input”

if y is not None:

y = F.interpolate(y, size=x.shape[-2:], mode=”bilinear”)

x = torch.cat((x, y), dim=1)

return self.unet_forwad(x, t)

Exponential Moving Average (EMA) Code

Remember the Exponential Moving Average (EMA) technique? It can greatly enhance the stability and performance of your model during training. So, don’t forget to include it! Let’s take a closer look at the code implementation for EMA:

class EMA:

def __init__(self, beta):

super().__init__()

self.beta = beta

self.step = 0

def update_model_average(self, ma_model, current_model):

for current_params, ma_params in zip(current_model.parameters(), ma_model.parameters()):

old_weight, up_weight = ma_params.data, current_params.data

ma_params.data = self.update_average(old_weight, up_weight)

def update_average(self, old, new):

if old is None:

return new

return old * self.beta + (1 – self.beta) * new

def step_ema(self, ema_model, model, step_start_ema=2000):

if self.step < step_start_ema:

self.reset_parameters(ema_model, model)

self.step += 1

return

self.update_model_average(ema_model, model)

self.step += 1

def reset_parameters(self, ema_model, model):

ema_model.load_state_dict(model.state_dict())

The code defines an ‘EMA’ class that facilitates exponential moving average calculations. The key methods within this class include:

Initialization

‘__init__(self, beta)’: Initializes the EMA object with a specified beta value, which controls the weightage of the previous model parameters.

Model Weight Update

‘update_model_average(self, ma_model, current_model)’: Updates the moving average model ‘(ma_model)’ by calculating the weighted average of its parameters with the current model’s parameters (current_model).

Average Update

‘update_average(self, old, new)’: Computes the updated average by combining the old average (‘old’) with the new value (‘new’) using the specified beta value.

EMA Step

‘step_ema(self, ema_model, model, step_start_ema=2000)’: Performs the EMA step, updating the EMA model parameters (‘ema_model’) based on the current model parameters (‘model’). The ‘step_start_ema’ parameter determines the step at which EMA begins.

Model Parameter Reset

‘reset_parameters(self, ema_model, model)’: Resets the parameters of the EMA model (‘ema_model’) to match the current model (‘model’).

Training

The training process incorporates the EMA calculations within the ‘train_step’ and one_epoch functions:

def train_step(self):

self.optimizer.zero_grad()

self.scaler.scale(loss).backward()

self.scaler.step(self.optimizer)

self.scaler.update()

self.ema.step_ema(self.ema_model, self.model)

self.scheduler.step()

def one_epoch(self, train=True, use_wandb=False):

avg_loss = 0.

if train:

self.model.train()

else:

self.model.eval()

pbar = progress_bar(self.train_dataloader, leave=False)

for i, (images, labels) in enumerate(pbar):

with torch.autocast(“cuda”) and (torch.inference_mode() if not train else torch.enable_grad()):

images = images.to(self.device)

labels = labels.to(self.device)

t = self.sample_timesteps(images.shape[0]).to(self.device)

x_t, noise = self.noise_images(images, t)

if np.random.random() < 0.1:

labels = None

predicted_noise = self.model(x_t, t, labels)

loss = self.mse(noise, predicted_noise)

avg_loss += loss

if train:

self.train_step()

if use_wandb:

wandb.log({“train_mse”: loss.item(), “learning_rate”: self.scheduler.get_last_lr()[0]})

pbar.comment = f”MSE={loss.item():2.3f}”

return avg_loss.mean().item()

These functions are responsible for training the model and performing a single epoch of training. Key points to note include:

Training Step

‘train_step(self)’: Executes a single training step, which involves gradient computation, backward propagation, optimization step, EMA update, and scheduler step.

One Epoch

‘one_epoch(self, train=True, use_wandb=False)’: Runs one epoch of training or evaluation. It iterates through the data and performs forward pass, loss calculation, and other necessary steps. It also logs the training MSE and learning rate if use_wandb is set to True.

Logging and Model Saving

The code includes functions for logging images and saving model checkpoints:

@torch.inference_mode()

def log_images(self):

“Log images to wandb and save them to disk”

labels = torch.arange(self.num_classes).long().to(self.device)

sampled_images = self.sample(use_ema=False, n=len(labels), labels=labels)

ema_sampled_images = self.sample(use_ema=True, n=len(labels), labels=labels)

plot_images(sampled_images)

wandb.log({“sampled_images”: [wandb.Image(img.permute(1,2,0).squeeze().cpu().numpy()) for img in sampled_images]})

wandb.log({“ema_sampled_images”: [wandb.Image(img.permute(1,2,0).squeeze().cpu().numpy()) for img in ema_sampled_images]})

def save_model(self, run_name, epoch=-1):

“Save model locally and to wandb”

torch.save(self.model.state_dict(), os.path.join(“models”, run_name, f”ckpt.pt”))

torch.save(self.ema_model.state_dict(), os.path.join(“models”, run_name, f”ema_ckpt.pt”))

torch.save(self.optimizer.state_dict(), os.path.join(“models”, run_name, f”optim.pt”))

at = wandb.Artifact(“model”, type=”model”, description=”Model weights for DDPM conditional”, metadata={“epoch”: epoch})

at.add_dir(os.path.join(“models”, run_name))

wandb.log_artifact(at)

Logging Images

‘log_images(self)’: Generates and logs images to Weights & Biases (wandb) for visualization. It generates both sampled images and EMA sampled images, converting them into wandb Image format.

Saving Models

‘save_model(self, run_name, epoch=-1)’: Saves the model locally and logs the model artifacts to wandb. It saves the model’s state dictionary, EMA model’s state dictionary, optimizer state dictionary, and creates a wandb artifact to store the model weights.

Model Preparation and Training Execution

The prepare and fit functions orchestrate the model setup and execution:

def prepare(self, args):

“Prepare the model for training”

setup_logging(args.run_name)

device = args.device

self.train_dataloader, self.val_dataloader = get_data(args)

self.optimizer = optim.AdamW(self.model.parameters(), lr=args.lr, weight_decay=0.001)

self.scheduler = optim.lr_scheduler.OneCycleLR(self.optimizer, max_lr=args.lr,

steps_per_epoch=len(self.train_dataloader), epochs=args.epochs)

self.mse = nn.MSELoss()

self.ema = EMA(0.995)

self.scaler = torch.cuda.amp.GradScaler()

def fit(self, args):

self.prepare(args)

for epoch in range(args.epochs):

logging.info(f”Starting epoch {epoch}:”)

self.one_epoch(train=True)

if args.do_validation:

self.one_epoch(train=False)

if epoch % args.log_every_epoch == 0:

self.log_images(use_wandb=args.use_wandb)

self.save_model(run_name=args.run_name, use_wandb=args.use_wandb, epoch=epoch)

Model Preparation

‘prepare(self, args)’: Sets up the model for training by initializing logging, defining the device, obtaining the data loaders, setting the optimizer and scheduler, and initializing loss functions, EMA, and gradient scalar.

Model Training

‘fit(self, args)’: Executes the model training process. It iterates over the specified number of epochs, performs one epoch of training, and optionally performs validation. It also logs images at specified intervals and saves the model after training completes.

Step 4: Evaluating Your Model

Once your diffusion model is trained, it’s crucial to assess its performance accurately. Here’s how to go about it:

Assessing Performance

Compare the model’s predictions with the actual outcomes from your test dataset. Employ key performance metrics like accuracy, precision, recall, and the F1 score to measure how well your model performs.

Understanding the Results

Take time to interpret the results generated by your model. This step involves identifying significant influencers within your population and gaining insights into why they exert such an impact.

Fine-Tuning Your Model

If your model’s performance falls short of expectations, don’t be discouraged. There are ways to improve it. Consider fine-tuning the model by adjusting its parameters, collecting additional data, or even exploring different types of diffusion models to achieve better results.

Step 5: Deploying Your Model

Last but not least, you’ll need to implement your trained model. This could mean integrating the model into a production environment, a cloud platform, or a web service.

Also, think about how your model can work with other systems, like a database, an API, or a user interface. This can help your model become part of a more comprehensive solution.

There you have it! Your step-by-step guide to training a diffusion model. Now, you’re all set to turn that noise into some impressive samples!

Diffusion Model Prompt Engineering

Think of prompts as your steering wheel, guiding the results from your Diffusion models. Now, these models are rather talkative, converting a duo of primary inputs into a fixed point in their own latent space.

A seed integer, mostly created on its own, and a text prompt, handed by the user, are the key inputs. To nail down the best outcomes, it’s all about ongoing experimentation with Prompt Engineering.

We’ve dabbled with Dall-E 2 and Stable Diffusion and compiled some cool insights to help you make the most of your prompts. We’ll chat about prompt length, artistic style, and crucial terms that can help shape the images you’re itching to create.

How to Use the Prompt in Different Cases

A prompt generally boils down to three main elements: a Frame, a Subject, a Style, and sometimes a Seed.

1. Frame

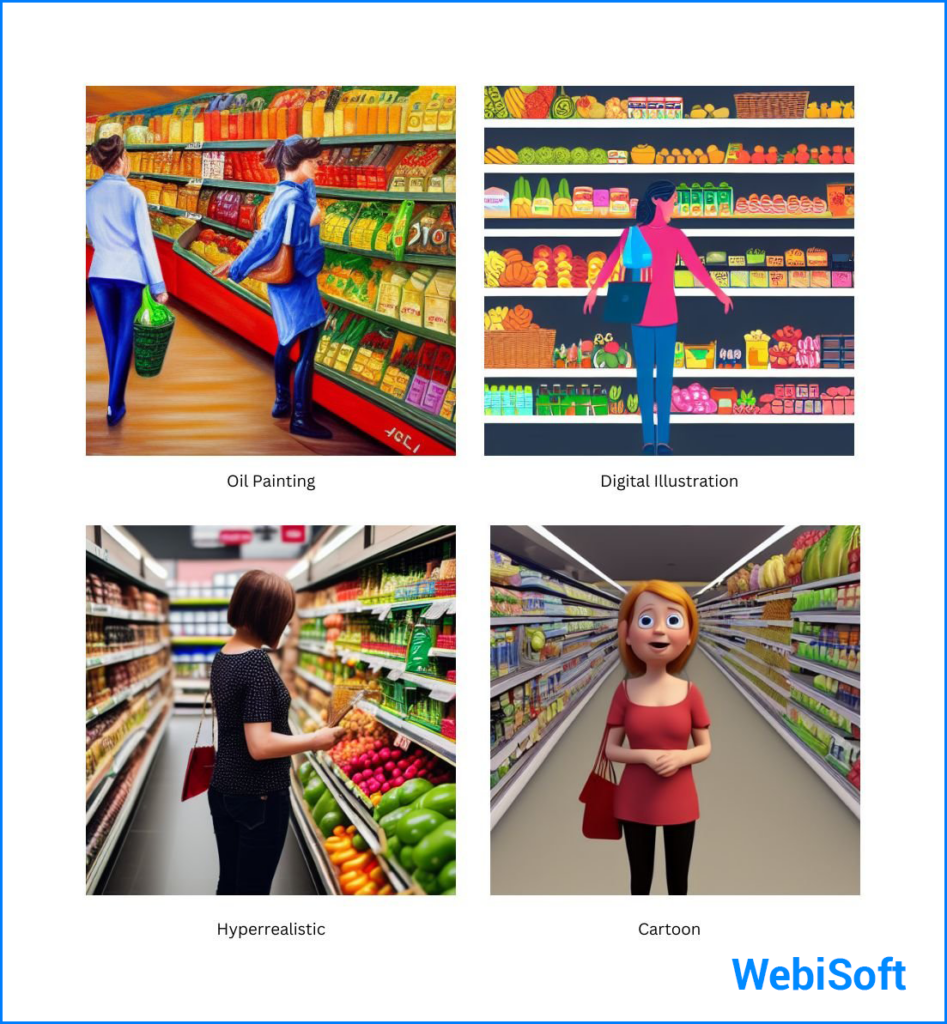

Picture the frame as the genre of your to-be-generated image. This pairs up with the Style later on, to give your image its distinctive vibe. There are a bunch of frames you can play with, such as photographs, digital illustrations, oil paintings, pencil drawings, one-line drawing, or matte paintings.

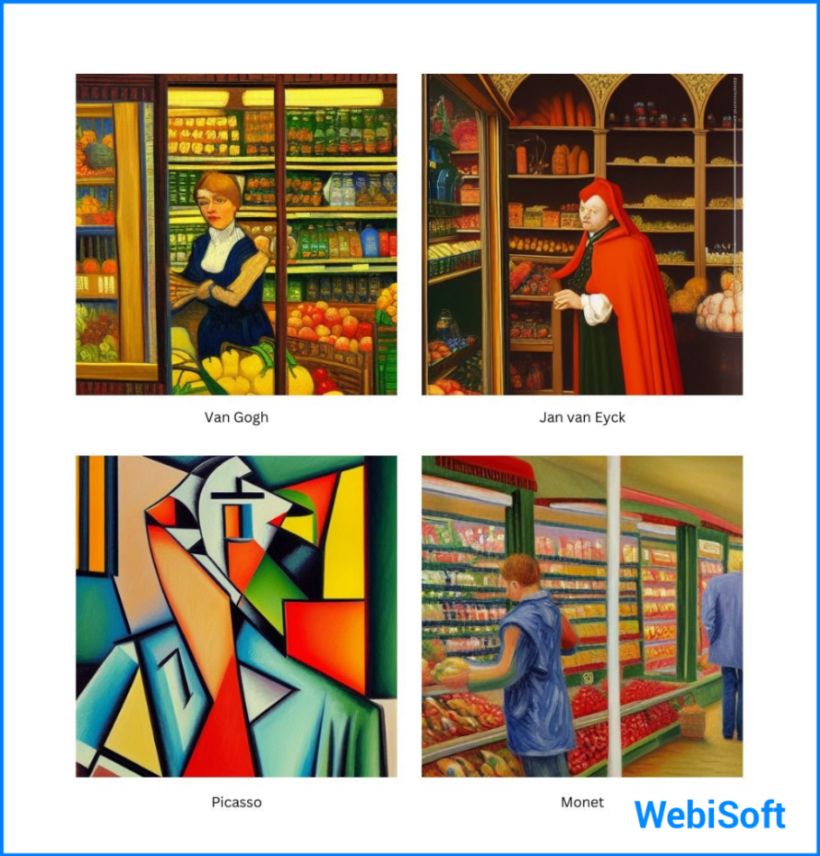

Imagine we’re playing with the base prompt “Painting of a person in a Grocery Store,” morphed for an oil painting, a digital illustration, a realistic photo, and a 3D cartoon.

If you don’t specify the frame, Diffusion models tend to lean towards a “picture” frame, but this really depends on what the subject is. By calling out a specific frame, you’re directly manipulating the final outcome.

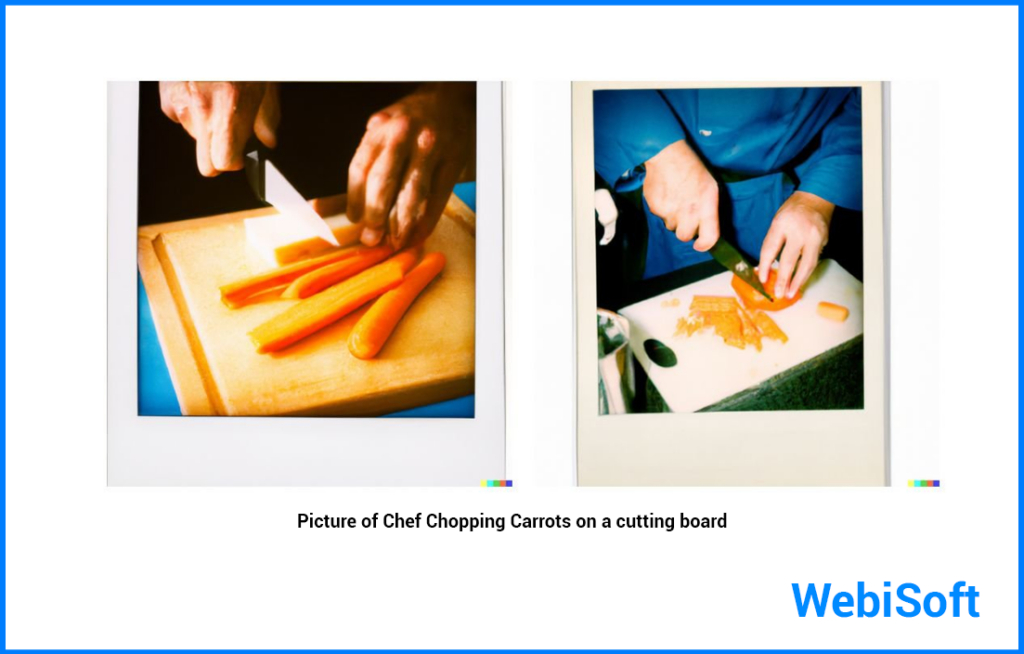

Turn the frame into a “Polaroid” and you get something reminiscent of a Polaroid snapshot, thick white borders and all.

You can also give pencil drawings a whirl.

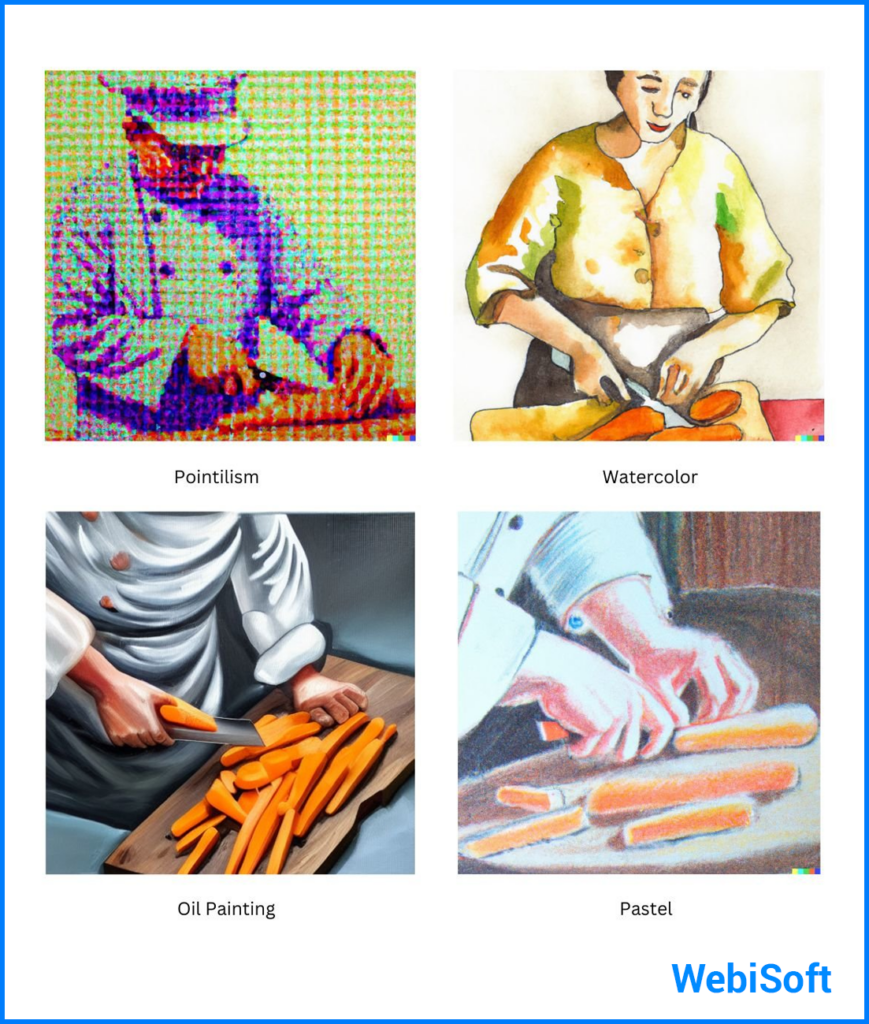

And of course, you can experiment with different painting methods.

The frame is like a basic roadmap for the output your diffusion model should produce. But for truly stunning images, a well-chosen subject and a honed style should be part of your prompts. Subjects are up next, followed by how to fuse frames, subjects, and styles for some stellar image fine-tuning.

2. Subject

The star of your generated image could be anything your imagination serves up.

The core of Diffusion models stems from a wealth of internet data that’s out there in the public domain, which makes it a pro at crafting impressively precise images of real-world objects.

That said, Diffusion models can sometimes trip over their own feet when it comes to compositionality. So, the golden rule?

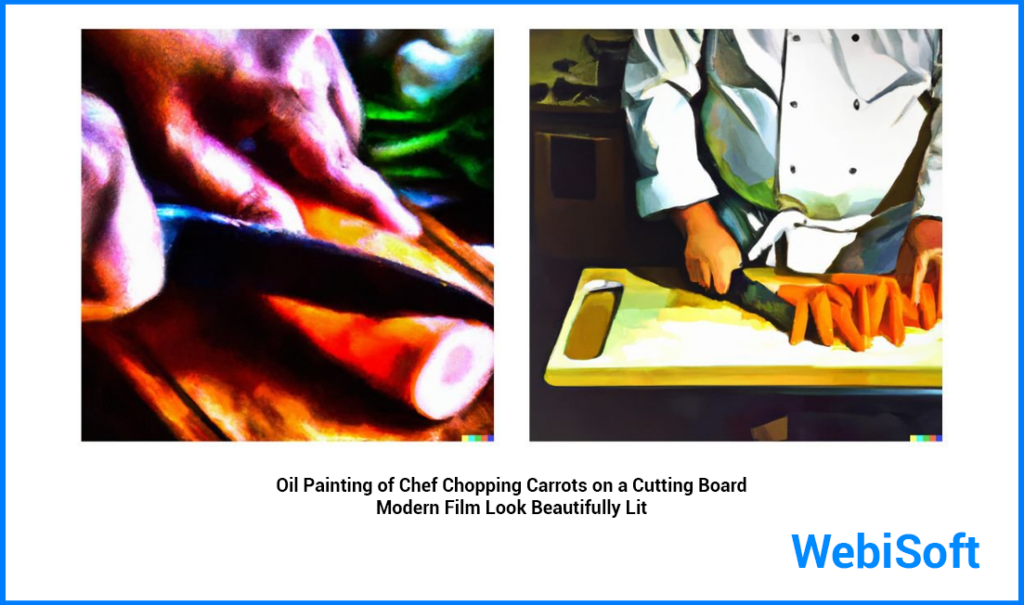

Keep your prompts to a cozy one or two subjects for the best results. Think along the lines of “Chef dicing carrots on a chopping board.”

Sure, there might be a hiccup here with a knife slicing another knife, but you’ve still got diced carrots in the mix, which is more or less in line with what you originally prompted.

On the flip side, if you toss in more than two subjects, things can start to veer into the unpredictable or even downright comical.

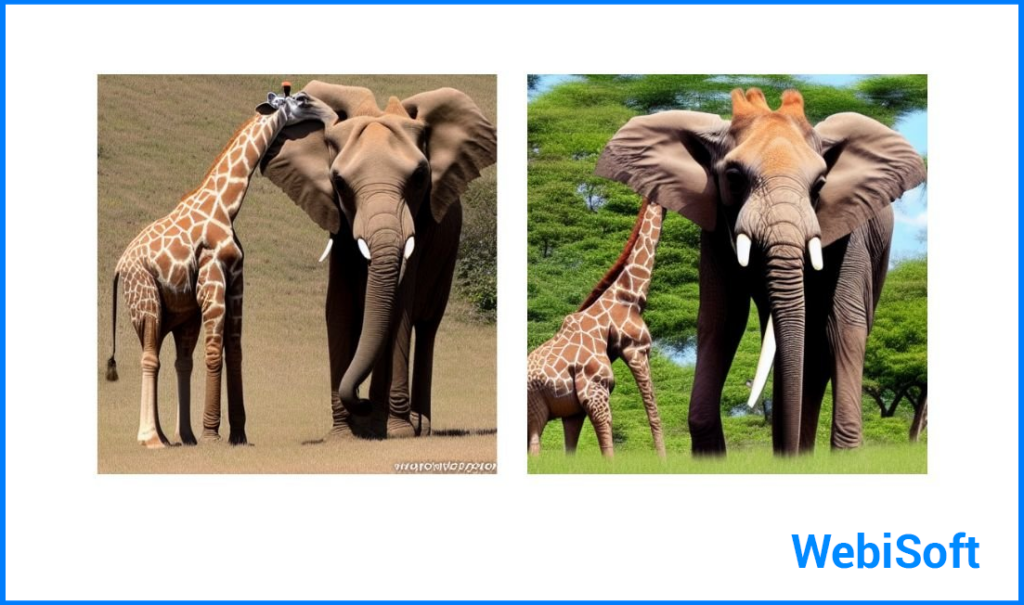

Less commonly seen subjects can end up blended into one by the Diffusion models. Take “a giraffe and an elephant” for instance, and you might wind up with a giraffe-elephant mashup instead of individual giraffe and elephant.

Funnily enough, there are often two creatures in the scene, but they’re usually a hybrid of some sort.

People have tried to steer clear of this by including prepositions like “beside”, with varying degrees of success but generally closer to what was initially prompted.

Interestingly, this seems to be dependent on the subjects. A more frequently-seen pair, say “a dog and a cat,” can be generated as distinct animals without any hitches.

3. Style

A style brings multiple aspects to an image. The most pivotal ones? Think lighting, theme, artistic influence, and time period.

Little tweaks like “Bathed in light”, “Contemporary Film”, or “Surrealistic” can dramatically alter the final look of your image.

Let’s circle back to our example prompt “chefs chopping carrots.” We can give this basic image a makeover by adding new styles. Picture a “contemporary cinema vibe” layered onto “Oil Painting” and “Picture” frames.

The mood of the images can be transformed by a style, like introducing some “eerie illumination.”

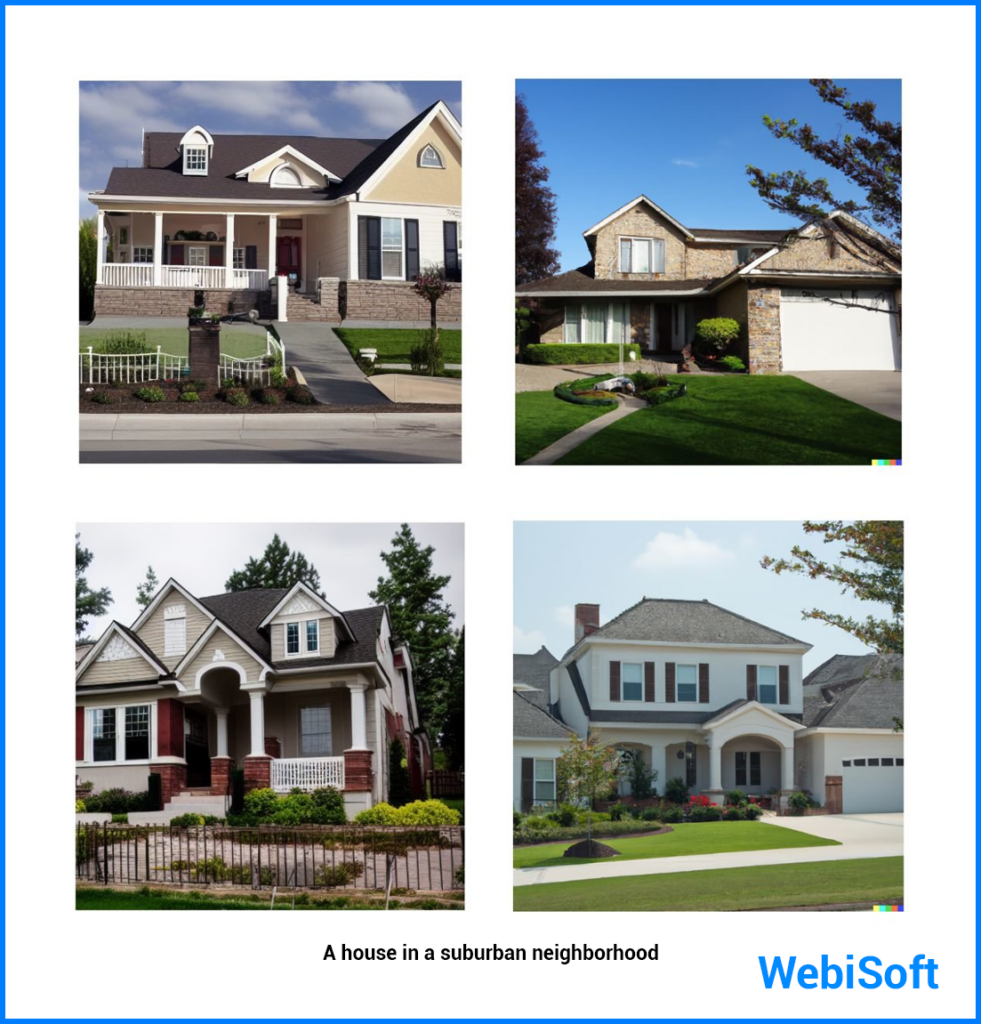

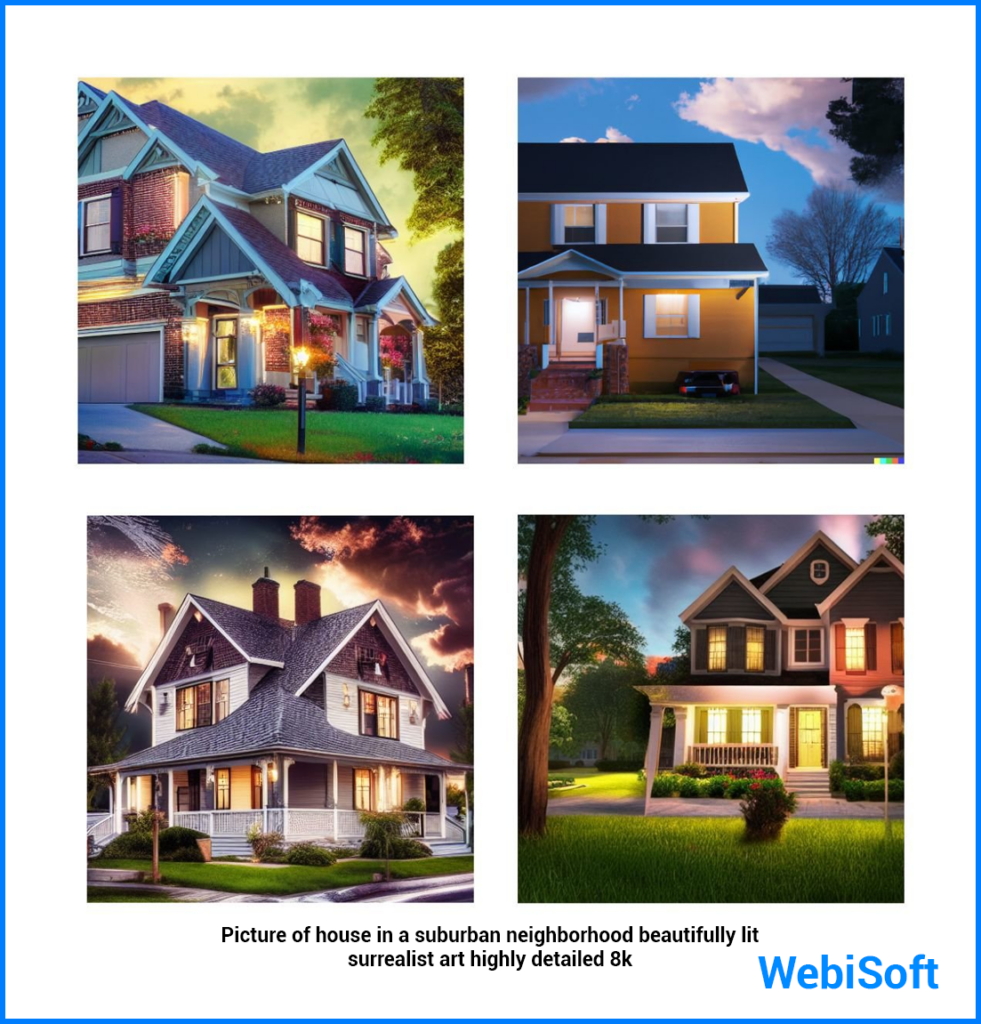

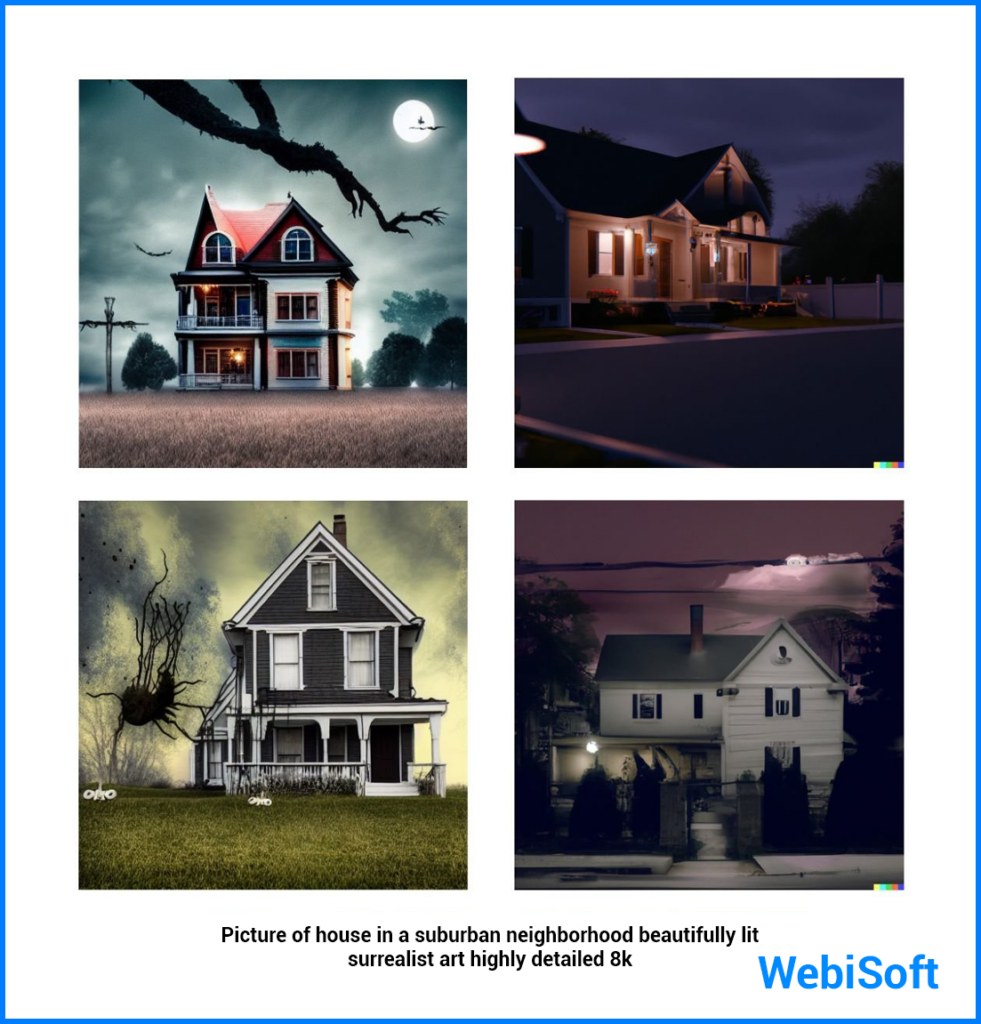

You can put the finishing touches on your generated images by adjusting the style just a bit. Take a vanilla prompt like “a house in a suburban neighborhood.”

Stir in “radiantly lit surrealistic art” and you’ve got yourself images that are a whole lot more intense and dynamic.

Switch “radiantly lit” with the phrase “hauntingly eerie,” and you’ve suddenly got a creepy theme going on in the images.

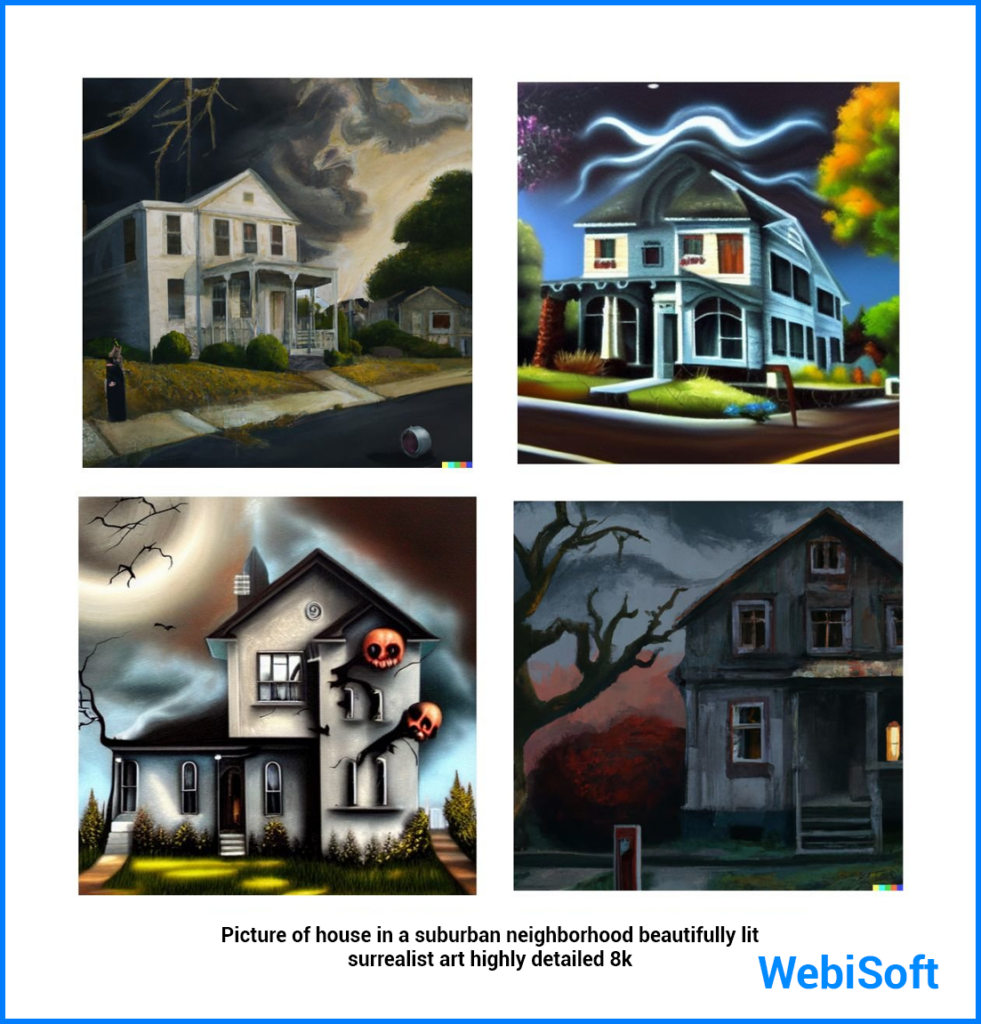

Slap this onto a different frame for the effect you want, like an oil painting frame for instance.

Alter the mood to “cheery brightness” and observe the stark change in the final image.

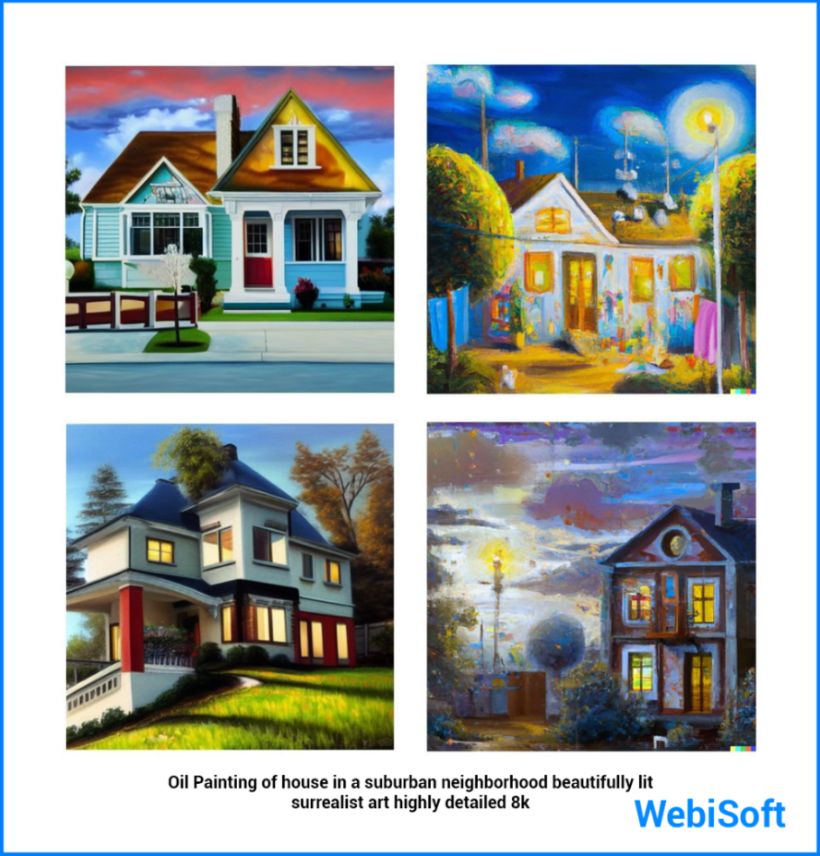

By tweaking the artistic style, you can further fine-tune your images, like swapping “surrealistic art” for “art nouveau.”

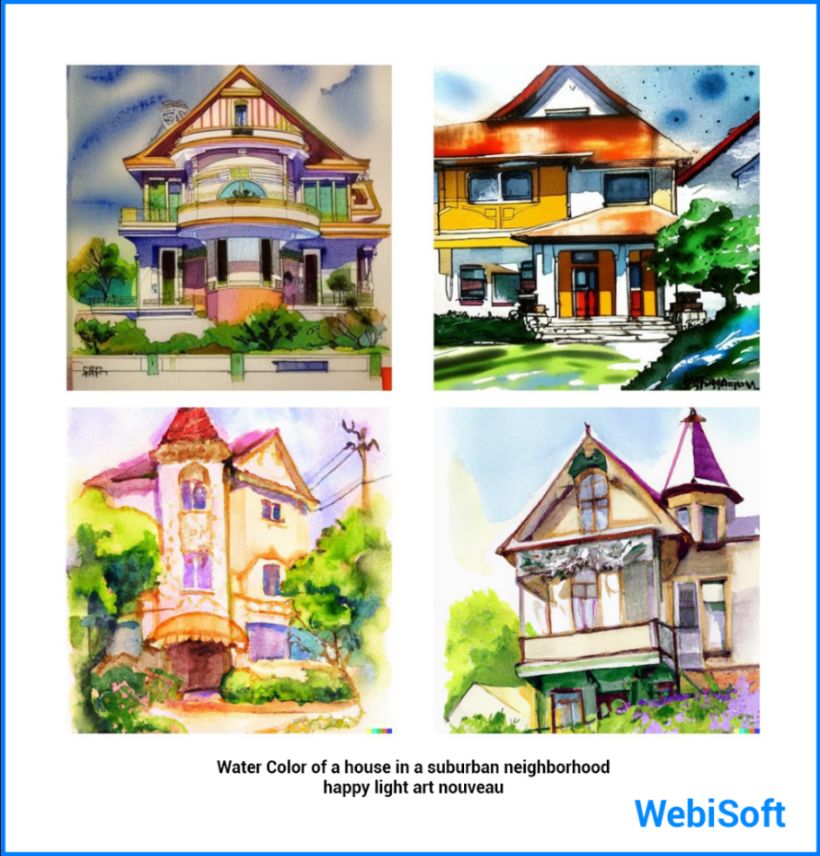

Let’s once more highlight how the frame impacts the final output. Here, we transition to a “watercolor” frame while maintaining the same style.

Playing with different seasons in your images can tweak the overall setting and mood of your final piece.

Just remember, the number of combinations you can cook up with frames and styles is nearly limitless – we’re just dipping our toes in the possibilities here. Historical artists can also step in to sharpen your prompts. Imagine the same prompt, say “person shopping at a grocery store,” reimagined in the unique artistic styles of legendary painters.

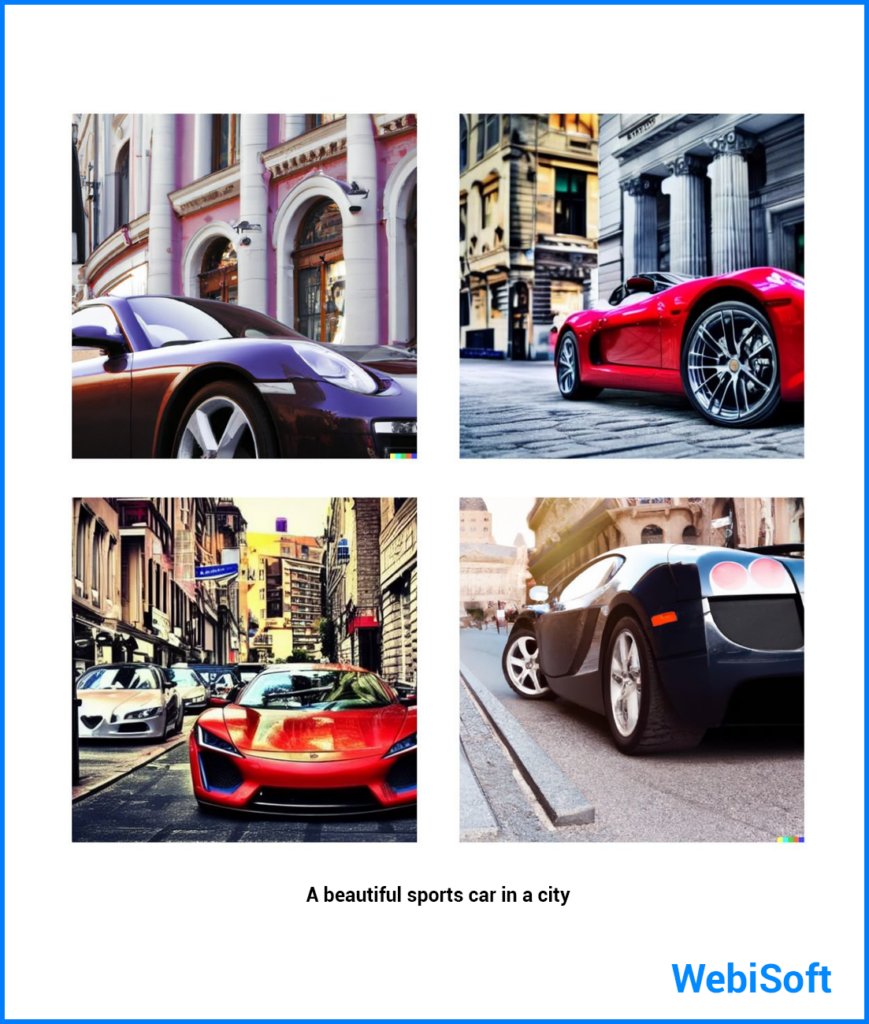

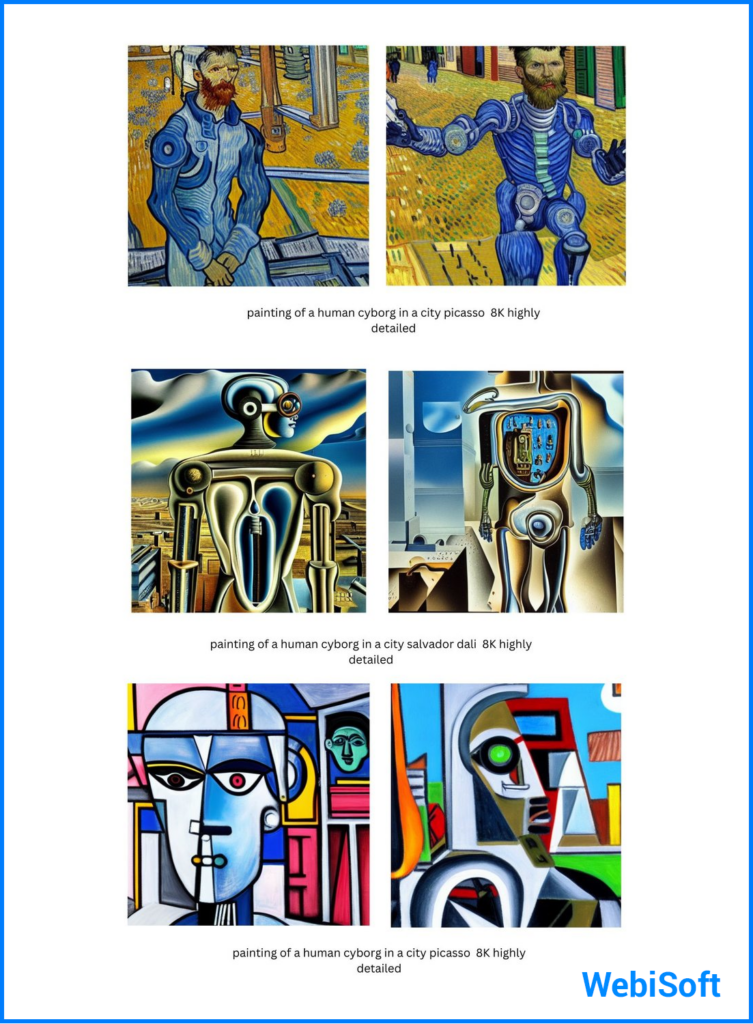

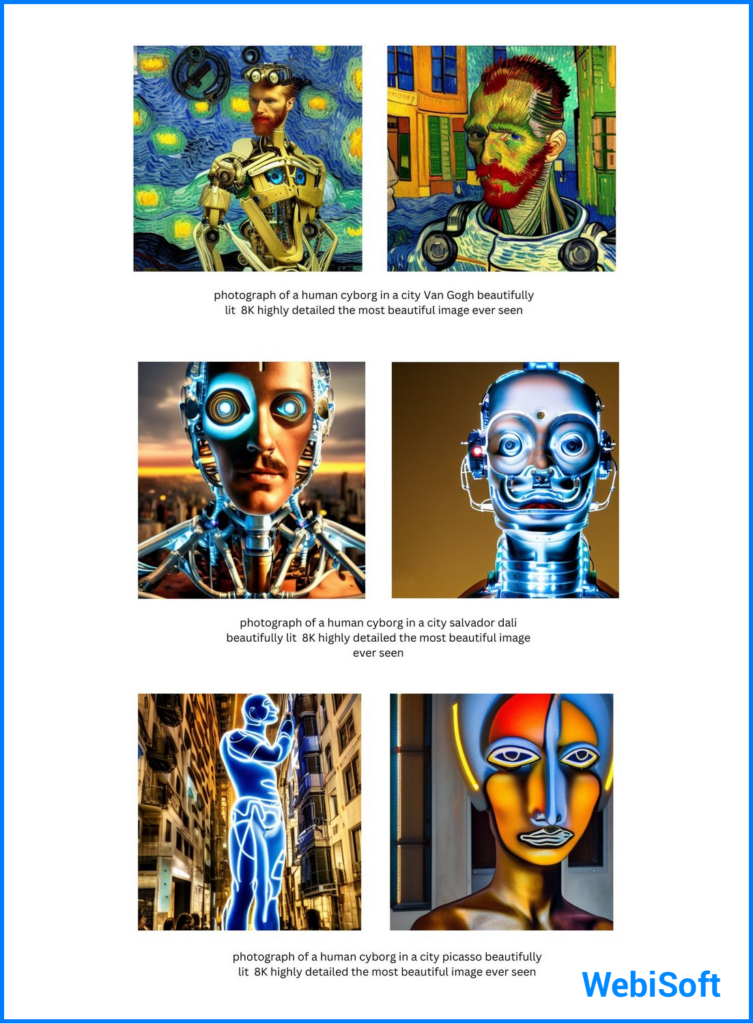

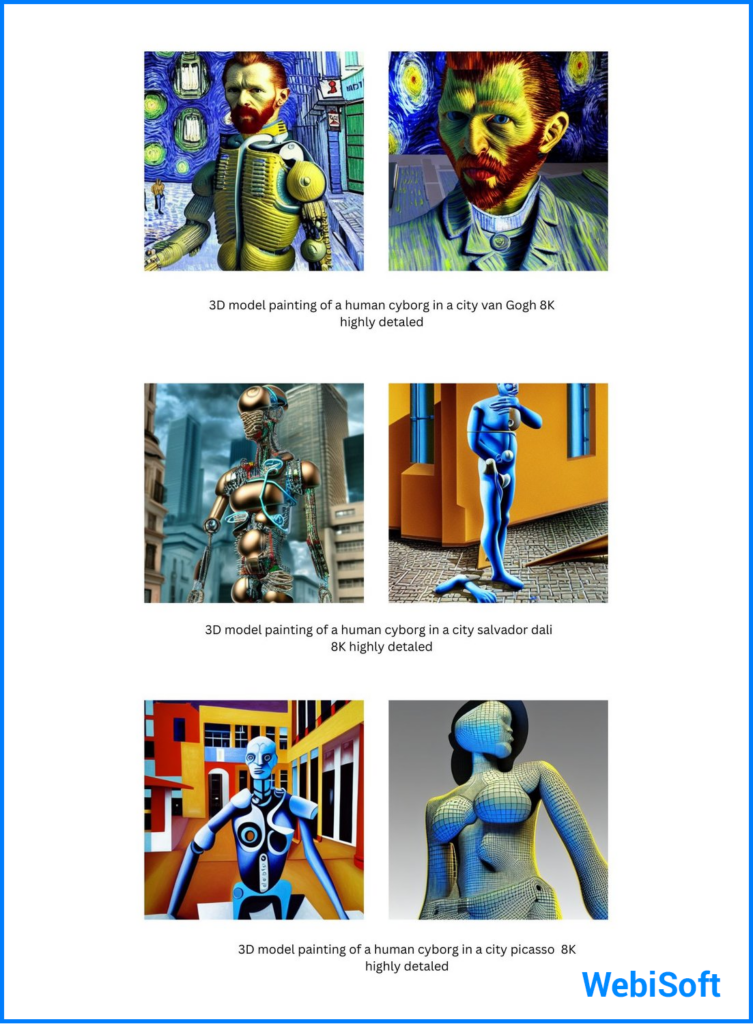

When you blend diverse styles, frames, and sprinkle in an artist, you’re whipping up a unique piece of art. Consider the base prompt “painting of a human cyborg in a city {artist} 8K super detailed.”

Granted, the subject is a tad unconventional, but each painting mirrors the anticipated style of the specific artist.

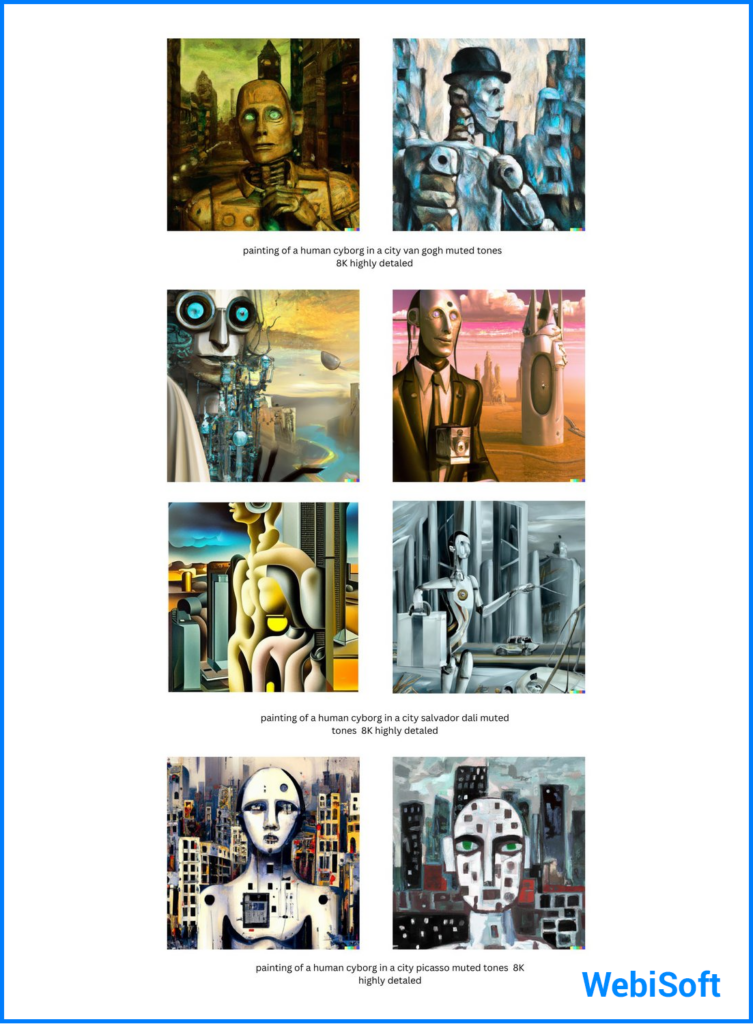

We can switch up the style by tweaking the mood, say to “muted hues.”

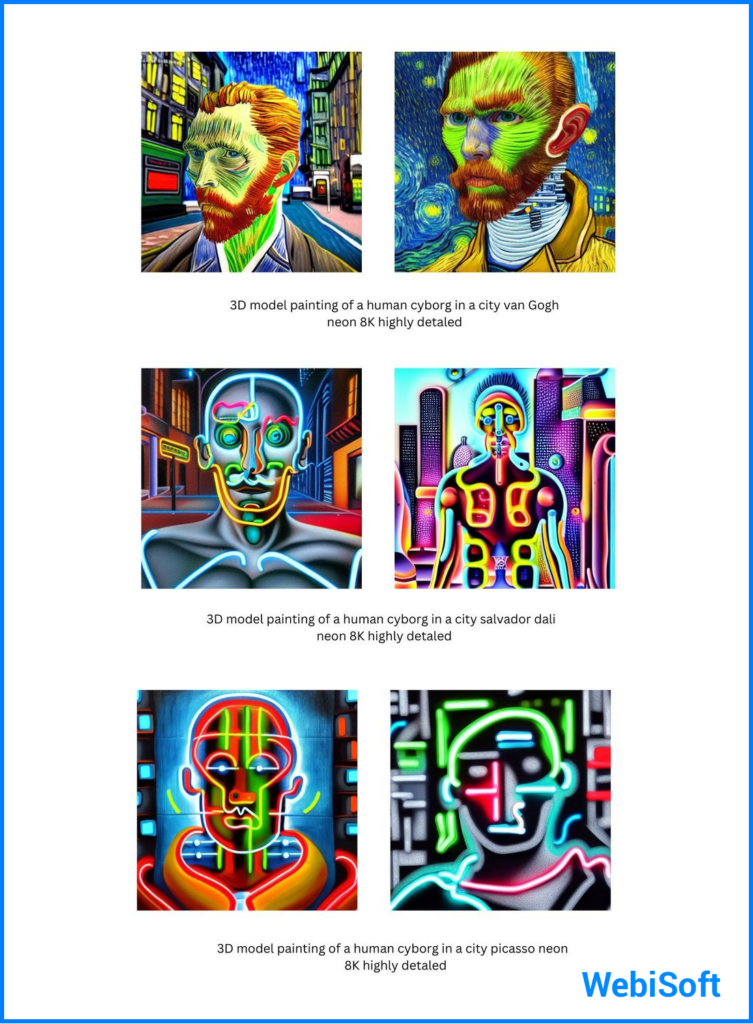

You can push the envelope by tweaking both the frame and the mood to land on something one-of-a-kind, like a “3D model painting” frame with neon hues.

Throw in the descriptor, “the most breathtaking image you’ve ever laid eyes on” and you’re in for a visual treat.

Unique art forms like “3D model paintings” can birth some truly original masterpieces.

By playing with the frame and style of your image, you can spawn some awe-inspiring and fresh results. Why not mix and match style modifiers like “intense illumination” or “faded hues” to put your own spin on your concepts.

This guide is just the tip of the iceberg, and we’re excited to see what brilliant pieces you all come up with!

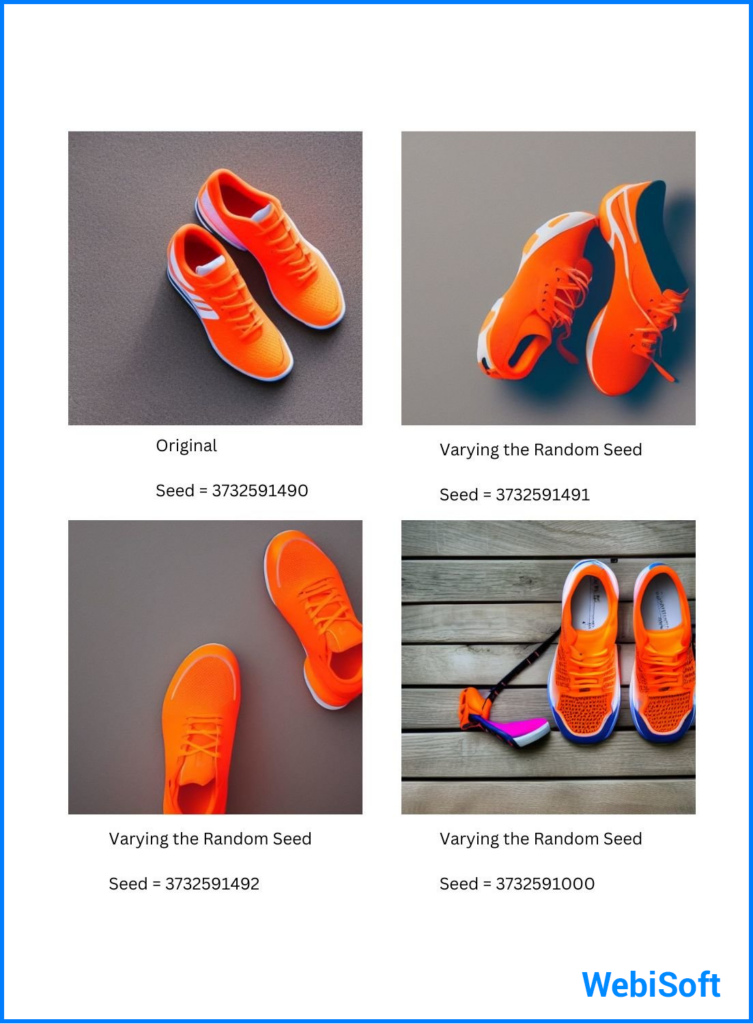

4. Seed

Sticking with the same seed, the same prompt, and the same rendition of Stable Diffusion will always whip up the same image.

Seeing different images for the same prompt? It’s probably because you’re rolling with a random seed, not a fixed one.

For instance, “Bright tangerine sneakers, photo-realistic lighting e-commerce style” could be shuffled up by tweaking the random seed’s value.

Tweaking any of these factors will bring a new image to life. You can pin down the prompt or seed and journey through the latent space by altering the other variable. This approach offers a reliable way to hunt down similar images and slightly change up the visuals.

Adjust the prompt to “Bright cobalt suede formal shoes, photo-realistic lighting e-commerce style” and freeze the seed at 3732591490. The outcome? Images with similar structures but tweaked to align with the updated prompt.

Now, keep that prompt static and journey through the latent space by switching up the seed, and you’ll cook up different variations:

To wrap it up, a solid strategy to structure your prompts could look like “[frame] [main subject] [style type] [modifiers]” or “A [frame type] of a [main subject], [style example]” And an optional seed.

The sequence of these exact phrases could shake up your final result, so if you’re chasing a specific outcome, it’s best to play around with all these values until you’re happy with what you see.

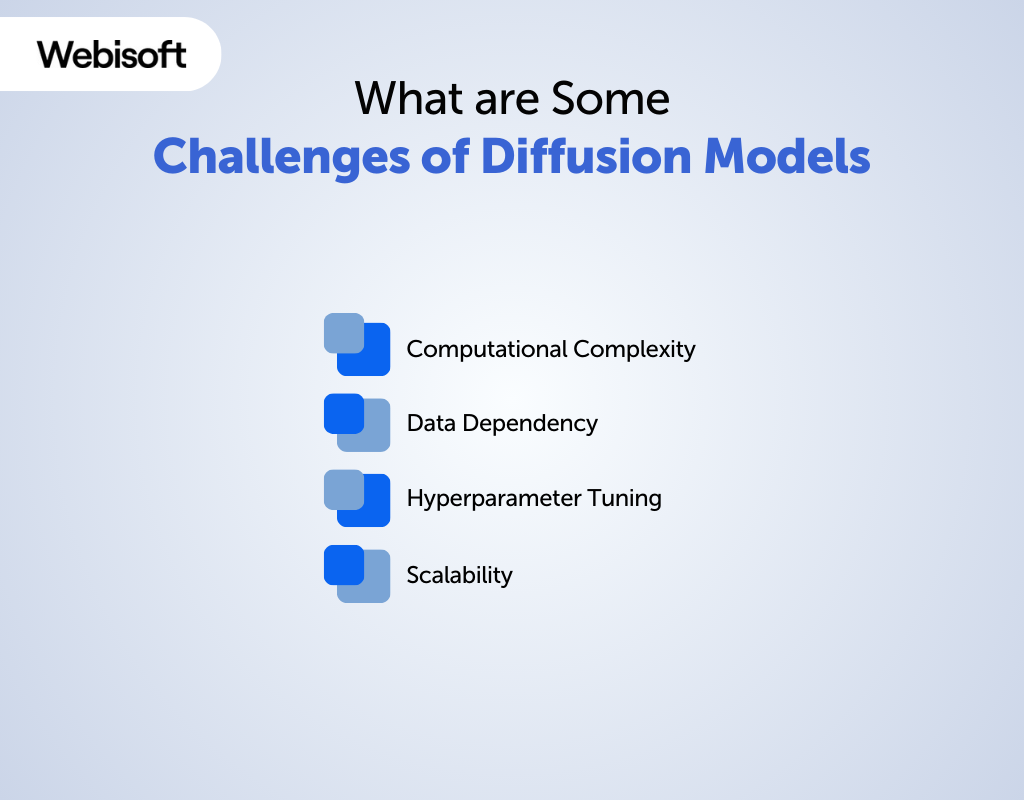

What are Some Challenges of Diffusion Models

While diffusion models possess impressive capabilities, they also come with their own set of challenges:

Computational Complexity

Training diffusion models can be computationally expensive and time-consuming, especially for large datasets and complex data distributions. This complexity can limit their practicality in some applications.

Data Dependency

Diffusion models require a substantial amount of high-quality training data to perform effectively. In cases where data is scarce or of poor quality, these models may struggle to generate accurate samples.

Hyperparameter Tuning

Properly setting hyperparameters is crucial for diffusion models. Finding the right combination of hyperparameters can be a challenging and iterative process, requiring significant experimentation.

Scalability

Scaling diffusion models to handle high-resolution images or large text corpora can be difficult due to memory and computational constraints. This scalability issue limits their application in certain domains.

Future of Training Diffusion Models in Machine Learning

The future of training diffusion model machine learning holds several exciting possibilities and advancements:

Improved Training Efficiency

Researchers are actively working on techniques to make training diffusion models more efficient. This includes developing novel optimization algorithms, parallelization methods, and hardware acceleration to reduce training time and resource requirements.

Scalability

Future diffusion models will likely handle larger and more complex datasets. Scaling up models for tasks like high-resolution image generation and video synthesis will continue to be a focus, enabling more realistic and detailed outputs.

Enhanced Generative Capabilities

Expect diffusion models to generate even more realistic and diverse samples. Researchers are exploring techniques to reduce mode collapse and improve the fidelity of generated data, making them indistinguishable from real data.

Interpretability

There is a growing interest in making diffusion models more interpretable. Techniques for understanding the reasoning behind model decisions and extracting meaningful insights from the latent space are likely to be developed.

Robustness and Security

As diffusion models are increasingly deployed in security-critical applications, research into adversarial robustness and secure deployment will gain importance. Ensuring models are resistant to attacks and safe to use will be a priority.

Why Choose Webisoft to Implement Diffusion to Your Business

Choosing Webisoft for implementing diffusion models in your business offers several advantages:

Machine Learning Expertise

We have a team of highly experienced machine learning engineers who are well-versed in diffusion models. Our expertise ensures that you get top-notch machine-learning solutions.

Customized Solutions

We understand that every business is unique. Instead of offering generic solutions, we take the time to understand your specific needs. Our personalized approach ensures that the diffusion models we create align perfectly with your requirements.

Proven Success

We have a track record of successfully implementing machine learning solutions for a diverse range of clients. Our past achievements demonstrate our competence and reliability in delivering effective solutions.

Cutting-Edge Technology

Staying updated with the latest technological advancements is a priority for us. We continuously keep an eye on the latest developments in machine learning and diffusion models, guaranteeing that your business benefits from the most advanced and innovative solutions.

Scalability

Whether your business is small, medium-sized, or large, we can customize our solutions to fit your needs. We have the flexibility to create diffusion models that can grow along with your business.

Data Security

We place great importance on data privacy and security. We implement strict measures to safeguard your data and ensure compliance with relevant regulations, providing you with peace of mind regarding data handling.

Ongoing Support

Implementing diffusion models is just the beginning. We offer continuous support and maintenance services to ensure that your models consistently perform at their best and remain adaptable to changing business demands.

Competitive Pricing

We offer competitive pricing for our services, ensuring that you receive excellent value for your investment. We work within your budget constraints while delivering high-quality solutions.

Client-Centric Approach

Your input is highly valued, and we actively involve you in the process. Our client-centric approach emphasizes communication and collaboration, guaranteeing that the diffusion models align with your business goals and expectations.

Final Verdict

In summary, we’ve thoroughly explored diffusion models in various domains, uncovering their applications, benefits, challenges, and top models. We’ve also discussed how these models excel in tasks like image generation, text coherence, and data security.

Looking to the future, diffusion models hold great promise. Ongoing research and advancements suggest we can expect even more realistic and diverse data generation, improved interpretability, and increased security measures.

Now, as you start your journey into machine learning and diffusion models, consider the expertise of Webisoft. Our team is ready to transform these insights into customized solutions for your unique business needs.

FAQs

What is diffusion ai?

Diffusion AI refers to the application of diffusion models in the field of artificial intelligence. These models are used for data generation and are known for their ability to transform noisy data into high-quality samples.

Does GPT use diffusion?

No, GPT (Generative Pre-trained Transformer) primarily utilizes a different approach called a transformer architecture for text generation. While diffusion models are used in AI, GPT does not specifically rely on diffusion techniques.

What is the diffusion model in marketing?

In marketing, a diffusion model refers to a mathematical model that predicts how new products or innovations spread and are adopted by consumers over time. It helps businesses understand the adoption curve and plan marketing strategies accordingly.

What is an AI diffusion model?

An AI diffusion model is a generative machine learning approach. It refines a random noise signal iteratively until it closely resembles a target data distribution. This makes it suitable for generating high-dimensional data like images, text, or audio.