Master Python Machine Learning With Fast, Real Results

- BLOG

- Artificial Intelligence

- January 1, 2026

Python machine learning has quietly become the engine behind products that predict, rank, recommend, and automate. It gives teams the power to turn raw behavior, transactions, and logs into decisions that scale without slowing down.

Yet most explanations stay stuck in toy datasets and beginner tutorials, far from the reality of shipping a working ML system. Real projects need structure, clarity, and choices that hold up under pressure.

This guide gives you exactly that. A practical view of the tools, workflows, and production steps you must understand to make machine learning with Python deliver real results.

Contents

- 1 What Does “Python Machine Learning” Mean in Real Projects?

- 2 Why Python is Widely Used for Machine Learning

- 3 Python Machine Learning Stack You Will Actually Use

- 4 Python Machine Learning Workflow: From Data to a Working Model

- 5 Data Preprocessing and Feature Engineering That Change Results

- 6 The Most Common Algorithms in Python Machine Learning and How to Choose

- 7 Put machine learning to work with Webisoft!

- 8 Model Evaluation and Tuning That Won’t Mislead You

- 9 Taking Machine Learning with Python From Prototype to Production

- 10 How Webisoft Helps Teams Deliver Python Machine Learning Systems

- 10.1 Clear Problem Framing and Success Criteria

- 10.2 Focused Data Review and Feasibility Check

- 10.3 Pipeline Architecture That Aligns Training and Production

- 10.4 Iterative Model Development With Honest Evaluation

- 10.5 Deployment Built for Your Product Environment

- 10.6 Monitoring and Ongoing Reliability

- 10.7 Engagement Options That Support Your Team

- 11 Put machine learning to work with Webisoft!

- 12 Conclusion

- 13 Frequently Asked Question

What Does “Python Machine Learning” Mean in Real Projects?

In real teams, Python machine learning usually means “use Python to train a model on historical data so a product can make better decisions on new data.” The model might score leads, flag fraud, route support tickets, forecast demand, or detect defects.

It is less about the algorithm and more about building a repeatable system that produces useful predictions. A practical introduction to python machine learning starts with what a project needs to exist:

- A decision to improve: Something you already do manually or with rules.

- A target to predict: A label (approved or rejected) or a number (time to deliver).

- Training examples: Old data consisting of features (inputs) and outcomes (labels).

- A measurement plan: How you will judge the model in a way the business accepts.

Most Python machine learning projects fail for predictable reasons: vague goals, weak labels, leakage, or “offline wins” that do not survive real traffic. So the “real project” framing looks like this:

- Define the decision and the cost of wrong predictions.

- Assess data readiness to ensure it can support the decision-making process.

- Develop and train a baseline model fast.

- Improve the system around the model (data quality, evaluation, monitoring).

If you keep that sequence, you can move quickly without guessing.

Why Python is Widely Used for Machine Learning

Python is popular for machine learning because it fits the way ML work happens inside teams. Iterate, test ideas, validate with data, then integrate into an app.

The language itself is readable, which helps when engineers, analysts, and product owners all need to review the logic and results. If your goal is to learn python machine learning, Python also gives you a direct path from learning to shipping:

- You can explore datasets quickly.

- You can train classical ML models with stable tooling.

- You can move the same code into a backend service or a scheduled job.

- You can keep one language across data work and product work in many stacks.

The practical point is not “Python is popular.” The point is that Python reduces friction from experiment to integration.

Python Machine Learning Stack You Will Actually Use

A production-minded machine learning with Python setup is not a long list of tools. It is a small set that covers data handling, modeling, evaluation, and packaging. Here is the short stack most teams end up using:

- Jupyter or notebooks for exploration and quick checks

- NumPy for arrays and numeric operations

- pandas for tables, joins, and feature preparation

- matplotlib for data visualization and exploratory analysis.

- scikit-learn for classical ML on structured data and for building robust ML pipelines.

- A packaging and runtime plan (virtualenv/poetry/conda, Docker, CI)

When people search for Python machine learning libraries, they often want to know what to learn first. A sensible order is:

- pandas + NumPy for data

- scikit-learn for models and evaluation

- Add deep learning only when the problem needs it (images, audio, large text, complex patterns)

For many business cases on structured data, scikit-learn is the Python machine learning library you start with and often keep. Especially when supported by focused AI model development services. Because it covers preprocessing, models, metrics, and pipelines in one place.

Python Machine Learning Workflow: From Data to a Working Model

This is the fastest way to think about machine learning with Python without wasting cycles. If you do these steps in order, you will avoid most beginner and even mid-level project issues.

Step 1: Define the decision and the target

Write the decision in one sentence. Then define what the model predicts at decision time. For churn, decide if the model predicts churn within a time window. For fraud, decide if it predicts a risk score before a transaction completes.

Step 2: Choose a metric that matches the decision

Accuracy is rarely enough. If false positives are expensive, track precision. If missing positives is costly, track recall. For regression tasks, choose MAE if you want to measure average error magnitude, or RMSE if you need to penalize large outliers more heavily.

Step 3: Look at your data before training

Scan value ranges, missingness, and class balance. Plot a few distributions and review example rows. Many model issues are data issues, and a quick visual check often reveals them earlier than any metric. To experiment with clean, structured data explore Kaggle datasets or the UCI Machine Learning Repository.

Step 4: Build a dataset with time awareness

Keep only fields available at prediction time. If events happen over time, split by time so training uses earlier data and testing uses later data. This prevents data leakage and avoids overly optimistic results.

Step 5: Train a baseline model

The baseline is your reference. It can be logistic regression, a simple tree, or k-nearest neighbors. The point is to create a score you can evaluate and compare.

Below is a short Python machine learning tutorial style example to show the “baseline first” approach.

Machine learning python code example:

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.compose import ColumnTransformer

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import OneHotEncoder, StandardScaler

from sklearn.impute import SimpleImputer

from sklearn.metrics import classification_report

from sklearn.linear_model import LogisticRegression

df = pd.read_csv(“data.csv”)

y = df[“target”]

X = df.drop(columns=[“target”])

num_cols = X.select_dtypes(include=[“int64”, “float64”]).columns

cat_cols = X.select_dtypes(include=[“object”, “category”, “bool”, “string”]).columns

pre = ColumnTransformer(

[

(“num”, Pipeline([(“imputer”, SimpleImputer(strategy=”median”)),

(“scaler”, StandardScaler())]), num_cols),

(“cat”, Pipeline([(“imputer”, SimpleImputer(strategy=”most_frequent”)),

(“onehot”, OneHotEncoder(handle_unknown=”ignore”))]), cat_cols),

]

)

model = Pipeline([(“preprocess”, pre),

(“clf”, LogisticRegression(max_iter=1000))])

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, random_state=42, stratify=y

)

model.fit(X_train, y_train)

pred = model.predict(X_test)

print(classification_report(y_test, pred))

Step 6: Inspect errors and iterate

Review false positives and false negatives. Look for label noise, missing signals, and feature definitions that are too broad. Improve one thing at a time so you can attribute gains to a specific change. If you have data and a clear target but the workflow feels uncertain, contact Webisoft. Webisoft can review your dataset structure, labels, and success metric, then deliver a baseline model and a path to production that your team can maintain.

Data Preprocessing and Feature Engineering That Change Results

Preprocessing is where many machine learning with Python attempts break, especially when results look good in a notebook but fail in testing or real usage. Here are the moves that most often change outcomes:

Clean missing and inconsistent values

- Decide what “missing” means per field.

- For numeric fields, median imputation is often a reasonable baseline.

- For categories, most-frequent imputation is a safe start.

Encode categorical variables properly

- Tree models can sometimes handle categories after encoding.

- Linear models typically need one-hot encoding for categories.

- Keep unknown handling for categories that appear later.

Scale when the model cares about magnitude

Scaling often matters for:

- Logistic regression

- SVM

- kNN

- Neural nets

Tree-based models care less about scaling.

Prevent leakage

Leakage happens when your feature includes information that would not exist at prediction time. Common leakage examples:

- Using “status after approval” as an input when predicting approval

- Including “refund issued” when predicting churn

- Training on random splits for a time-based system and accidentally learning future patterns

A useful rule: If a human would not know it at decision time, the model should not see it.

Feature engineering that stays simple

You rarely need clever tricks early. Good “first pass” features often look like:

- Ratios (spend per visit)

- Time deltas (days since last action)

- Rolling counts (events in last 7 days)

- High-cardinality handling (grouping rare categories to “other”)

Feature work becomes much easier when the business can explain each feature in one sentence.

The Most Common Algorithms in Python Machine Learning and How to Choose

Algorithm choice is a tool choice. In machine learning with Python, the right “algorithm” is usually the one that gives a clean baseline and can be explained in a review. Below are the algorithms you will see most often, with selection cues.

Linear Regression (logistic regression, linear regression)

Use when:

- You need something fast to train.

- You want explanations (coefficients).

- You have many features and expect simple relationships.

Avoid when:

- The relationship is strongly nonlinear and feature work is limited.

Tree-based algorithms (decision trees, random forest, gradient boosting)

Use when:

- Your data is tabular and messy.

- Nonlinear relationships matter.

- You want strong baseline performance without complex feature creation.

Watch for:

- Overfitting on small datasets.

- Feature leakage still applies.

k-Nearest Neighbors (kNN)

Use when:

- You want a simple baseline.

- The dataset is not huge.

- Features are strictly scaled and meaningful.

Avoid when:

- You need speed at inference on large datasets (kNN can be heavy).

Naive Bayes

Use when:

- Text classification is part of the problem.

- You need a quick baseline.

- You want something simple to deploy.

Support Vector Machines (SVM)

Use when:

- The dataset is medium-sized.

- You can tune carefully.

- You have strong feature scaling.

Avoid when:

- You need fast training on very large datasets without extra work.

Neural nets and deep learning

Use when:

- You have images, audio, or complex text.

- You have enough training data and the ability to run training reliably.

For many product teams, deep learning is not step one. It is step three or step four, after data quality and evaluation are in shape.

Put machine learning to work with Webisoft!

Book a free consultation to review your data, goals, and delivery plan!

Model Evaluation and Tuning That Won’t Mislead You

Evaluation is where machine learning with Python becomes honest. Without the right evaluation design, you can end up shipping a model that looks great in tests but does not help the product.

Start with the right split strategy

Use splits that match how the model will be used:

- Random split can work for stable datasets without time effects.

- Time-based split is often needed for forecasting, churn, fraud, and anything where behavior shifts.

Pick metrics that match the decision

Classification examples:

- Precision: how often “positive” predictions are correct

- Recall: how many real positives you find

- F1: balance of precision and recall

Regression examples:

- MAE: average absolute error

- RMSE: penalizes large errors more

If the business cost of false positives is high, do not judge the model mainly by accuracy.

Use a confusion matrix mindset

Even without drawing it, think in terms of:

- True positives, false positives

- True negatives, false negatives

This keeps you focused on the kind of mistakes the product can tolerate.

Tune hyperparameters with discipline

A sensible tuning approach:

- Establish a baseline.

- Choose a small parameter grid.

- Use cross-validation where it fits.

- Compare against baseline with the same split strategy.

Avoid tuning on the test set. Keep the test set for final checks.

Use pipelines so training matches testing

Pipelines connect preprocessing and model training as one unit. This prevents common mistakes such as scaling the full dataset before splitting. If you only change one habit in your work, make it this: train and test through a single pipeline.

Taking Machine Learning with Python From Prototype to Production

A prototype is a model that runs once. A production system is a model that runs every day, handles messy inputs, and can be observed. This is where many machine learning with Python efforts stall, so it helps to plan for it early.

Package the full prediction path

Production inference needs:

- The same preprocessing used in training

- The same feature names and ordering

- Stable handling for missing data and new categories

Treat preprocessing as part of the model, not a separate script.

Choose an inference pattern

Choose an inference pattern, a step often handled within broader AI product development services when models move into real applications. Common patterns:

- Batch scoring: run nightly or hourly, write results to a database

- Real-time API: score on request, return prediction to the app

- Hybrid: batch for most users, real-time for edge cases

Batch is often simpler and cheaper for early releases.

Add monitoring you can act on

At minimum, track:

- Input data drift (feature distributions changing)

- Prediction drift (scores shift over time)

- Performance drift (when labels become available later)

If labels arrive late (for example churn), plan delayed evaluation.

Add testing

Production ML needs tests similar to other software:

- Schema checks (columns and types)

- Range checks (values within expected bounds)

- Unit tests for feature functions

- Smoke tests for the scoring path

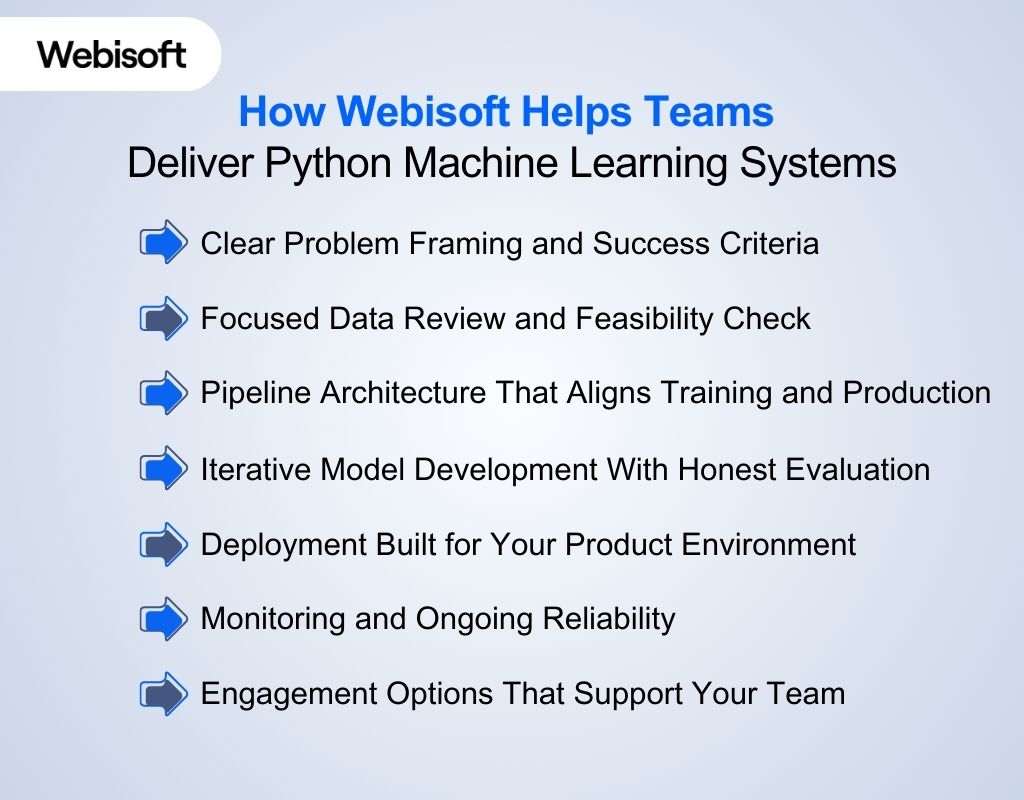

How Webisoft Helps Teams Deliver Python Machine Learning Systems

Teams often reach a stage where experiments work in notebooks, but turning them into a dependable product workflow feels unclear. Webisoft helps close that gap. Our company supports organizations that want Python machine learning systems shaped around real decisions, stable pipelines, and predictable performance.

Teams often reach a stage where experiments work in notebooks, but turning them into a dependable product workflow feels unclear. Webisoft helps close that gap. Our company supports organizations that want Python machine learning systems shaped around real decisions, stable pipelines, and predictable performance.

Clear Problem Framing and Success Criteria

Webisoft begins by understanding the decision your model will influence. Our team defines the target, selects metrics that reflect business impact, and sets expectations that guide the entire project.

Focused Data Review and Feasibility Check

A structured data audit follows. Webisoft examines label quality, leakage risks, time ordering, and feature stability to confirm the problem is solvable and to highlight needed adjustments early.

Pipeline Architecture That Aligns Training and Production

Webisoft designs a practical ML pipeline covering preprocessing, training, validation, and inference. This ensures training and production behavior remain aligned, which reduces surprises after deployment.

Iterative Model Development With Honest Evaluation

Models are improved in controlled steps. Our team trains baselines, evaluates them with appropriate metrics, and refines features or parameters with clear documentation behind each improvement.

Deployment Built for Your Product Environment

When the model is ready, Webisoft packages it into a batch workflow or real-time API. Input validation, fallbacks, and predictable inference behavior are included so the system holds up during real usage.

Monitoring and Ongoing Reliability

Webisoft sets up monitoring for input drift, prediction changes, and long-term performance. Retraining triggers and update routines keep the system reliable as data and behavior evolve.

Engagement Options That Support Your Team

We offer flexible collaboration: fixed-scope MVPs, dedicated ML support, or targeted improvements to existing models. Each approach strengthens your internal capability rather than adding complexity.

Put machine learning to work with Webisoft!

Book a free consultation to review your data, goals, and delivery plan!

Conclusion

You’ve now seen how a complete Python machine learning workflow takes shape. From the early decisions to the final evaluation steps that determine whether a model can be trusted. With the right structure, Python gives you everything you need to move from experimentation to something that consistently supports real decisions. When you’re ready to put that structure into practice, Webisoft can help you build the components that matter most. Clean pipelines, stable deployments, and a system your product can rely on over time.

Frequently Asked Question

Is Python good for machine learning in production?

Yes. Python works well in production when preprocessing, inference paths, and dependencies are packaged consistently. Most failures come from weak processes, missing validation, or poor monitoring rather than limitations of the language itself.

Should I start with scikit-learn or deep learning?

Begin with scikit-learn for structured datasets because it provides strong baselines with minimal complexity. Move to deep learning only when the task demands it, such as image, audio, or large-scale text problems where classical models are insufficient.

How do I evaluate a model if labels arrive later?

Log predictions with IDs, features, and timestamps, then evaluate once labels become available. This setup supports delayed scoring, helps compare model versions correctly, and ensures performance monitoring stays reliable despite late-arriving outcomes.

How long does it take to learn and implement Python Machine Learning?

Learning the fundamentals of Python machine learning typically takes two to four months, while building and deploying a single production-ready project generally requires three to six months depending on the complexity of the data and the required model accuracy.