Multimodal AI in Healthcare: Use Cases and 2025 Trends

- BLOG

- Artificial Intelligence

- October 6, 2025

Over the past few years, I’ve noticed a big shift in how healthcare teams talk about AI. A few years back, it was all about single-purpose systems that analyzed either text, images, or structured data in isolation.

That era is behind us now.

Today, the focus is on multimodal AI. This approach brings together information that used to sit in silos: electronic health records, imaging scans, genomic sequencing, and even data from wearables. When you look at it as a whole, the picture of patient health becomes much clearer.

Why does this matter? Because combining these data streams leads to more accurate diagnoses, earlier detection, and treatment plans that are tailored to each individual. That is the promise that healthcare professionals, researchers, and hospital administrators are paying attention to right now.

In this article, I’ll take you through the growth of multimodal AI in healthcare, the use cases that are already showing results, the challenges that still stand in the way, and what the future may look like. I’ll also share where we at Webisoft fit into this landscape, since a lot of you have asked how to approach these projects in real terms.

Contents

- 1 Market Growth and Adoption of Multimodal AI in Healthcare

- 2 What Is Multimodal AI in Healthcare

- 3 Use Cases of Multimodal AI in Healthcare

- 4 Clinical and Business Impact of Multimodal AI

- 5 Expert Perspectives on Multimodal AI Transformation

- 6 Challenges and Barriers to Adoption

- 7 Adoption Outlook: What’s Next for Multimodal AI in Healthcare

- 8 How Webisoft Can Help You Build Multimodal AI Solutions

- 9 FAQs About Multimodal AI in Healthcare

- 9.1 1) What is multimodal AI in healthcare?

- 9.2 2) How is it different from traditional AI?

- 9.3 3) Where does it fit into clinical workflows?

- 9.4 4) What use cases are ready now?

- 9.5 5) How accurate are these models?

- 9.6 6) What is required on the data side?

- 9.7 7) How do we handle privacy and compliance?

- 9.8 8) What about explainability?

- 9.9 9) How long does a first deployment take?

- 9.10 10) What does a good ROI story look like?

- 10 Conclusion – From Hype to Reliable Clinical Impact

Market Growth and Adoption of Multimodal AI in Healthcare

Multimodal AI is moving from pilots to production. I am seeing it show up in real clinical workflows, not just research talks.

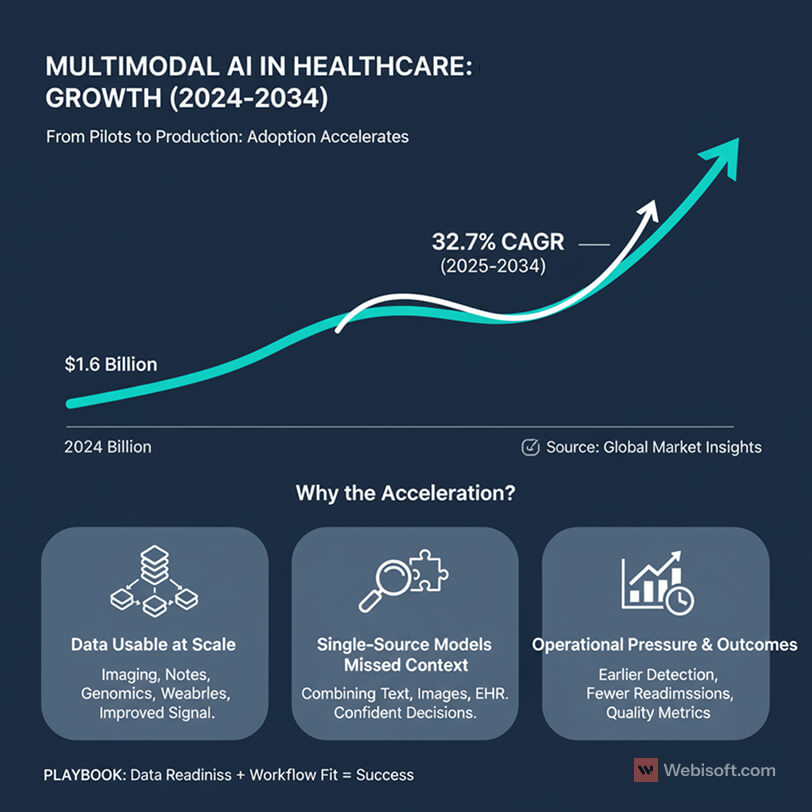

The market is growing fast. According to Global Market Insights, the multimodal AI market was valued at 1.6 billion dollars in 2024 and is projected to grow at a 32.7 percent CAGR from 2025 to 2034.

Why the acceleration?

- Data is finally usable at scale. Hospitals now collect massive volumes of imaging data, clinical notes, lab results, genomics, and continuous streams from wearables. When we bring these sources together, the signal improves and false positives drop.

- Single-source models missed context. A note-only system or an image-only model can be helpful, but it often lacks the bigger picture. Multimodal models combine text, images, structured EHR fields, and sometimes sensor data, which gives clinicians more confident decisions.

- Operational pressure is rising. Health systems want earlier detection, fewer readmissions, and clear documentation for quality metrics. Executives I speak with are looking at multimodal AI as a path to measurable outcomes, not just innovation theater.

Adoption patterns are becoming more predictable. Academic medical centers tend to lead with imaging and decision support. Integrated delivery networks pick targeted use cases where data pipelines are mature and governance is clear. Vendors are shipping tooling that connects to EHRs, PACS, LIMS, and cloud stores without months of custom work.

We are still early. Most providers I talk with are in one of three phases: scoping a first use case, running a limited deployment on a small patient cohort, or expanding a proven model across a service line. The difference between progress and stall is almost always data readiness and workflow fit.

The bottom line is simple. The market signal is there, budgets are opening, and the technical stack is catching up. If you have clean pipelines and a clear outcome to measure, multimodal AI belongs on your roadmap now.

What Is Multimodal AI in Healthcare

When I say multimodal AI, I mean models that learn from more than one type of clinical data at the same time. Text, images, signals, and structured fields come together in a single system that understands how these pieces relate.

Here is the typical mix I see in real projects:

- Clinical text: notes, discharge summaries, pathology reports.

- Medical images: radiology, cardiology, dermatology, digital pathology.

- Structured EHR data: labs, vitals, medications, diagnoses, procedures.

- Genomics and omics: variants, expression profiles, panels.

- Wearables and sensors: heart rate, activity, sleep, glucose.

Why combine them? Because a chest CT without the patient’s oxygen saturation and notes is an incomplete story. A note without imaging misses objective evidence. Multimodal models reduce blind spots by learning correlations across sources.

A quick framing you can use with your team:

- Early fusion: merge features from multiple sources before the model makes a prediction.

- Late fusion: build separate models per source, then combine outputs.

- Cross attention: let text guide what the model looks for in an image, or the other way around.

Tooling matters too. Most teams pair domain encoders for each data type with a shared representation layer. Data moves through FHIR or vendor APIs into a feature store, then into training pipelines. In production, I like to see clear interfaces for EHR, PACS, and LIMS so updates do not break inference.

Performance is improving quickly. As one well known example, Google’s MedPaLM 2 blends text and image inputs for clinical reasoning and scored above 60 percent on USMLE-style questions.

Two rules I hold teams to:

- Keep a single source of truth for identifiers and timestamps across modalities.

- Align features to clinical workflows, not just model accuracy. If the output does not fit the way clinicians make decisions, it will not be used.

You might also find this guide interesting: Blockchain Implementation Strategy: A Step-by-Step Guide

Use Cases of Multimodal AI in Healthcare

I group the most valuable use cases into four buckets. Each one benefits from combining text, images, structured EHR fields, and sometimes genomics or sensor data.

Diagnostics and imaging support

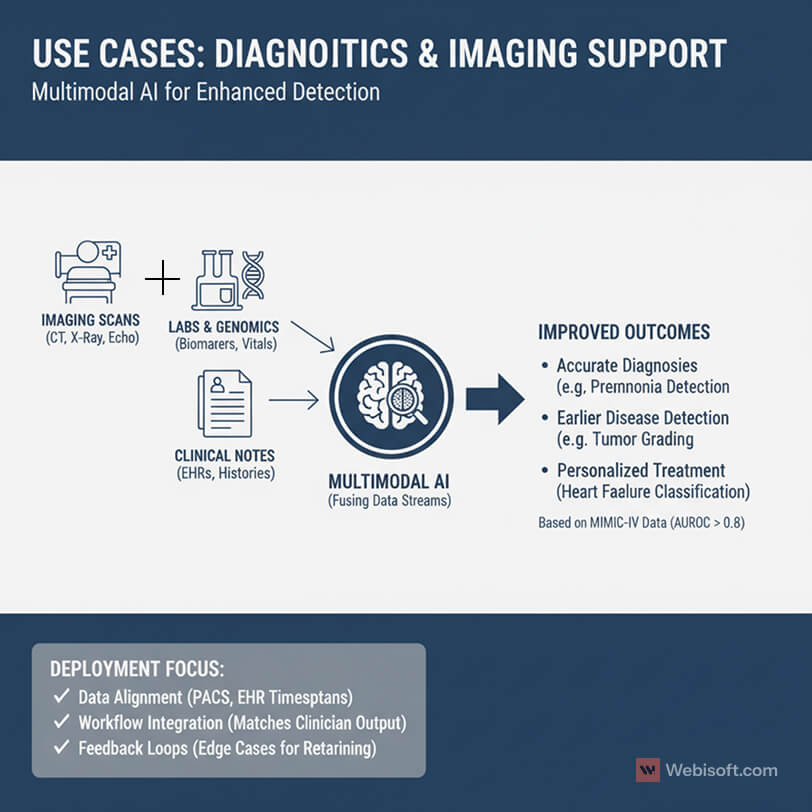

Radiology, pathology, cardiology, and dermatology all gain from multimodal inputs.

A chest CT paired with oxygen saturation, labs, and notes improves pneumonia detection. Digital pathology slides plus tumor markers and prior treatments support better grading. Echo videos combined with vitals and medication history help with heart failure classification.

Evidence is getting stronger. Research using the MIMIC-IV family of datasets reports AUROC greater than 0.8 across more than 600 diagnosis types and 14 of 15 patient deterioration outcomes, beating single-input baselines.

What I look for in deployments:

- Tight data alignment between PACS images, EHR timestamps, and note authors.

- Clear output formats that match radiologist and pathologist workflows.

- A feedback loop so clinicians can flag edge cases for retraining.

Personalized treatment and care planning

This is where genomics and longitudinal history matter.

For oncology, models can combine mutation profiles with imaging response and prior regimens to suggest the next best line of therapy. In cardiology, risk scores that blend EHR labs, wearable signals, and imaging help titrate medications with fewer readmissions.

Keys to making this work:

- Variant calling and panel data mapped to a consistent schema.

- Medication and adverse event coding cleaned up in the EHR.

- Clear guardrails so recommendations are advisory with clinician control.

Predictive analytics and early warning

Hospitals want earlier signals for deterioration, sepsis, and readmission risk.

Multimodal models watch trends in vitals, labs, and nursing notes, then cross check against imaging or medication changes. The goal is fewer false alarms and earlier, targeted interventions.

What I advise teams to implement:

- Unit-specific thresholds and calibration to avoid alert fatigue.

- A visible audit trail that explains which inputs drove a high-risk score.

- Integration with the existing alerting system, not a new pop-up.

Operational analytics and patient flow

Not every win is clinical. Some are operational.

When you combine EHR bed status, imaging backlogs, and transport timestamps with staffing levels, you can predict bottlenecks and shorten lengths of stay. In outpatient settings, multimodal signals can forecast no-shows and optimize scheduling.

Implementation steps that help:

- A small feature store for operational metrics with consistent update times.

- Simple dashboards for nursing, transport, and admin leads.

- A weekly review loop to tune models and remove low value signals.

Drug discovery and trial support

Biopharma teams are using multimodal learning to speed up target discovery and trial design. Imaging biomarkers, omics data, and clinical endpoints come together to identify cohorts and predict response.

For sponsors and CROs, the practical step is standardizing data capture early, so the model is not fighting format drift halfway through the study.

Unimodal vs multimodal at a glance

| Task | Unimodal approach | Multimodal approach | Practical impact |

| Pneumonia triage | CT only | CT plus labs, SpO2, notes | Higher precision in busy ERs |

| Sepsis prediction | Vitals only | Vitals, labs, notes, meds | Earlier alert, fewer false positives |

| Oncology next step | Genomics only | Genomics, imaging response, history | More relevant therapy options |

| Readmission risk | Claims only | EHR, wearables, social risk | Better discharge planning |

Keep in mind that success depends on data readiness. If one modality is noisy or delayed, start with the sources you can trust today, then add more over time.

Clinical and Business Impact of Multimodal AI

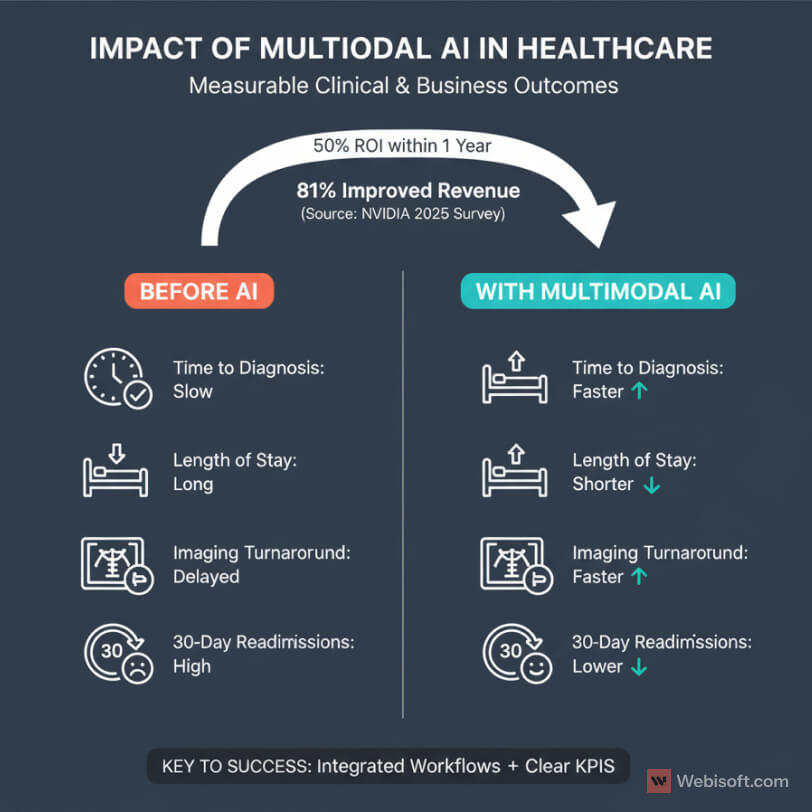

When I evaluate projects, I look for outcomes that are easy to measure. Multimodal AI is starting to meet that bar.

On the clinical side, teams report faster time to diagnosis and fewer unnecessary tests. When models combine notes, images, and labs, clinicians get clearer signals and less guesswork. That shows up as shorter length of stay, lower readmissions, and better guideline adherence.

On the business side, leaders want proof that AI improves revenue and margins. The latest industry survey from NVIDIA is useful here. In 2025, 63 percent of healthcare professionals reported they are actively using AI, 81 percent saw improved revenue, and 50 percent saw ROI within one year.

Where I see impact first:

- Imaging service lines. Decision support reduces turnaround time and supports higher throughput without rushing reads. That keeps radiologists focused on the hard cases and helps catch findings that should not be missed.

- Acute care. Early warning models reduce code events and ICU transfers. The savings are clinical and financial.

- Outpatient care. Better risk stratification improves follow-up scheduling, reduces no-shows, and supports value-based contracts.

Operational gains add up as well. When AI maps bottlenecks across bed management, transport, and imaging, hospitals move patients faster with fewer manual escalations. That means less staff burnout and better use of expensive equipment.

Two patterns make the difference between soft wins and hard numbers:

- Tight integration with existing workflows. If clinicians need to log into a separate app, adoption drops. Embedding results in the EHR or PACS keeps usage high and data consistent.

- Clear KPIs from day one. Pick outcome metrics that executives and clinicians already track. Time to diagnosis. Length of stay. 30-day readmissions. Imaging turnaround time. Revenue per modality. Then set baselines and measure monthly.

For payers and life sciences, the case is slightly different. Multimodal models help with cohort selection, adverse event detection, and protocol adherence. Those gains reduce trial costs and speed timelines.

Bottom line. Multimodal AI is not just a research upgrade. It is a way to improve care delivery while supporting financial performance. If you define your KPIs early and integrate cleanly, you will see results you can defend in a budget meeting.

Expert Perspectives on Multimodal AI Transformation

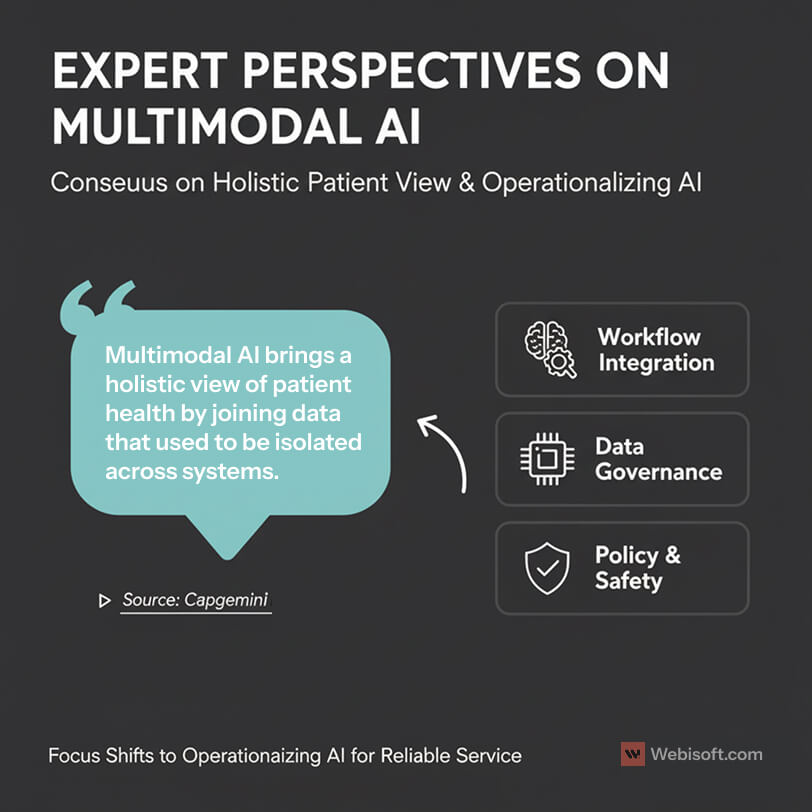

I like to sanity check my views with outside voices. The consensus from industry leaders is getting clearer.

Capgemini’s healthcare group puts it plainly. Multimodal AI brings a holistic view of patient health by joining data that used to be isolated across systems. That shift supports better clinical decision making and stronger outcomes. You can read their perspective here: Capgemini Invent on multimodal AI and personalized healthcare.

This lines up with what I see on the ground. When clinicians can see model outputs that consider notes, images, vitals, and genomics together, trust goes up. The recommendations feel closer to how real decisions get made.

Experts also point to workflow fit as the difference between pilots and production. If the model explains which inputs influenced a score, adoption is easier. If it shows up inside the EHR and PACS at the right step, usage stays high.

There is also agreement on the data work required. Leaders I speak with stress governance, lineage, and consent tracking. If you cannot trace each feature back to its source system and timestamp, you will struggle with validation and audits.

On the technical side, researchers expect the next wave to include larger vision-language models adapted to clinical settings. The goal is simple. Better grounding on medical vocabularies, better handling of imaging series, and safer behaviors in edge cases.

Policy voices are watching explainability and safety. Health systems will need clear documentation that links model behavior to clinical logic. That does not mean opening the entire model. It does mean logging decisions, offering clinician override, and tracking outcomes.

My takeaway is straightforward. The experts are not debating the value of multimodal approaches anymore. They are focused on the operational details that turn a good idea into a reliable service.

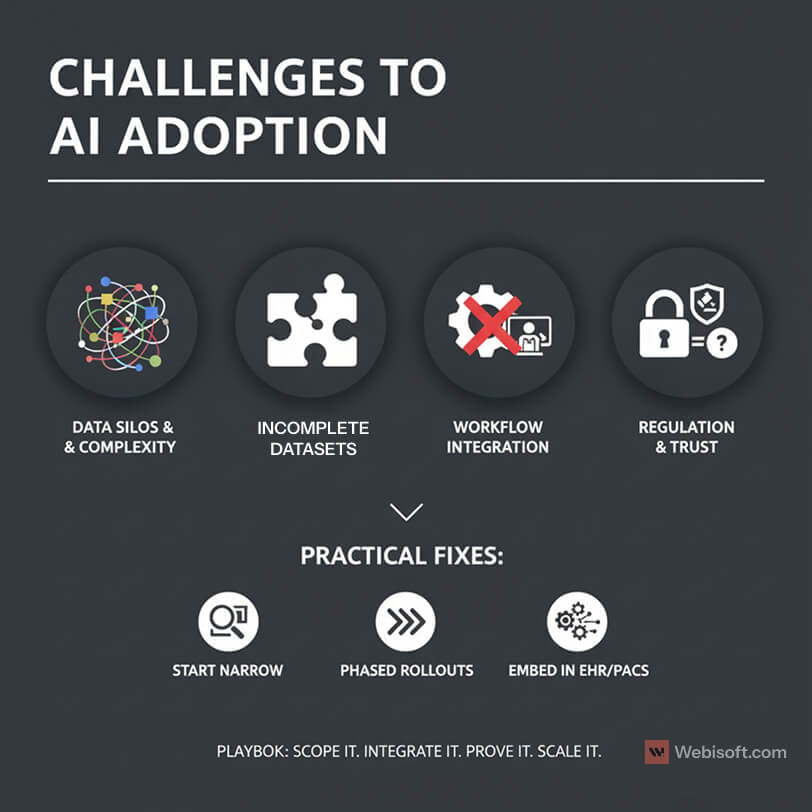

Challenges and Barriers to Adoption

I want to be honest about the hard parts. Multimodal AI is powerful, but the road to production has real obstacles.

Data heterogeneity is the first blocker I see. Notes live in one system, images in another, genomics somewhere else, and wearables in yet another silo. Formats differ. Timestamps drift. Labels are inconsistent. The result is brittle pipelines unless you invest in mapping, normalization, and a shared patient identity strategy.

Incomplete datasets make training and validation noisy. Missing vitals, partial imaging series, and unstructured notes reduce signal quality. Teams that ship to production usually start with a single service line where data is complete and governance is clear, then expand.

Workflow integration is another pain point. If the model output shows up outside the EHR or PACS, clinicians ignore it. If it creates extra clicks, adoption falls. The fix is simple to state and hard to do. Deliver results inside existing tools, at the exact moment a clinician is making a decision.

Regulation and trust are not optional. Privacy, consent, and auditability matter in every clinical environment. The National Institutes of Health highlights the same themes across recent reviews. The main hurdles are data heterogeneity, incomplete datasets, and integration with clinical workflows, along with the need for transparency and regulatory approval.

Explainability is still maturing. Clinicians want to know which inputs influenced a score. A simple contribution chart, confidence range, and a short rationale often go a long way. Black box results slow adoption and raise risk.

Operational cost can creep up. Training is one thing. Serving large models at low latency is another. You will need a budget for inference, monitoring, and retraining. Without cost controls, pilots look good but production margins suffer.

Here is a simple view I share with teams:

| Challenge | What usually breaks | Practical fix |

| Data heterogeneity | Mapping errors, ID mismatches | FHIR mapping, master patient index, strict timestamp policy |

| Incomplete datasets | Low recall, biased results | Start with one service line, define inclusion rules, track missingness |

| Workflow fit | Low clinician adoption | Embed in EHR and PACS, reduce clicks, align to the clinical path |

| Regulation and trust | Delays, audit gaps | Data lineage, consent tracking, model cards, change logs |

| Explainability | Pushback from reviewers | Contribution charts, confidence bands, short rationales |

| Serving cost | Unstable unit economics | Batch low urgency jobs, right-size models, cache intermediate features |

A few patterns reduce risk:

- Start with narrow scope where data is clean and outcomes are measurable.

- Use phased rollouts. Cohort first, then unit, then service line.

- Set up data contracts so upstream changes do not break features.

- Log every inference with inputs, outputs, and versioning for audits.

- Build feedback loops so clinicians can correct edge cases and improve retraining.

My recommendation is to treat adoption like any other clinical change. Align stakeholders early. Set clear KPIs. Prove value on a small scope. Expand once users and leadership trust the output.

Adoption Outlook: What’s Next for Multimodal AI in Healthcare

The next three waves of adoption

Wave 1: Targeted deployments (now to 12 months)

- Focus areas: imaging decision support, deterioration alerts, readmission risk.

- Why these work: clean data, clear owners, fast validation.

- Success signals: reduced turnaround time, fewer false alarms, shorter length of stay.

- What to ship: one service line, one model, one KPI dashboard.

Wave 2: Service line expansion (12 to 24 months)

- Replicate wins: radiology to cardiology, sepsis to broader deterioration, single DRG to multiple.

- What changes: governance, cost controls, and change management matter as much as accuracy.

- Platform moves: shared feature store, reusable evaluation harness, standard data contracts.

- Risks to manage: alert fatigue, model drift, integration gaps.

Wave 3: Platform maturity (24 to 36 months)

- Operating model: program funding, quarterly roadmaps, clear ownership across data, clinical, and IT.

- Tooling: connectors for EHR, PACS, LIMS, cloud storage with less custom glue code.

- SRE for models: monitoring, versioning, rollbacks, audit logs.

- Outcome: AI becomes routine infrastructure, not a special project.

Model direction to watch

- Vision-language models tuned for medicine: read notes and images together with better grounding on clinical language.

- Safety and trust features: contribution charts, confidence ranges, conservative defaults, and clinician override.

- Privacy-preserving collaboration: secure ways to share features and outcomes across partners.

Regulation and governance checklist

- Data lineage linked to each inference.

- Consent handling documented and enforced.

- Model cards that reviewers can understand.

- Simple change logs for updates and retrains.

- Evidence of outcome monitoring, not just AUC scores.

Interoperability priorities

- Standard schemas across payers, labs, and imaging groups.

- API contracts that survive vendor upgrades.

- Secure partner exchange for features and outcomes.

- Clear identity and timestamp rules across modalities.

How I recommend you plan the next 90 days

Track 1: Prove value fast

- Pick one use case with clean data and a single KPI.

- Embed results in the EHR or PACS.

- Measure weekly and publish the dashboard.

Track 2: Build the foundation

- Stand up a small feature store and evaluation pipeline.

- Implement model monitoring and versioned audit logs.

- Define data contracts and governance roles.

This two-track plan keeps momentum while you invest in scale.

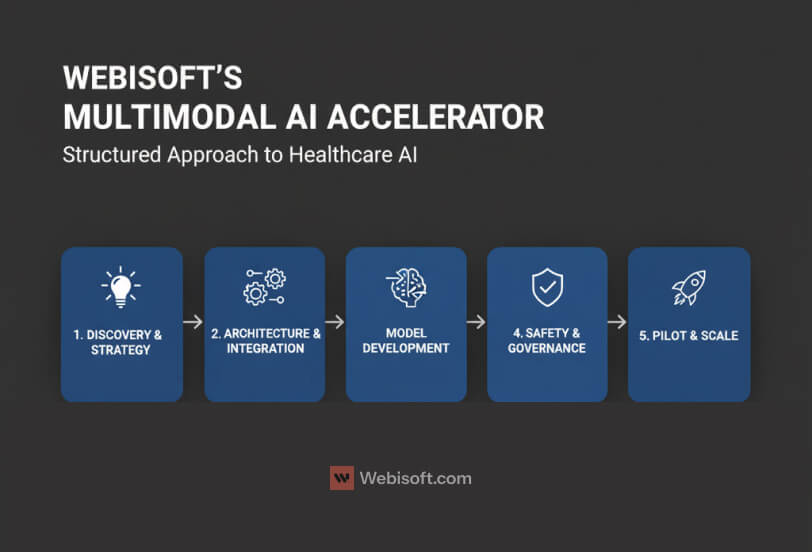

How Webisoft Can Help You Build Multimodal AI Solutions

I take a consultative first approach. Most teams do not need more models. They need a clear plan that ties data, workflows, and compliance to measurable outcomes.

Here is how my team at Webisoft works with healthcare organizations.

Discovery and strategy

We map business goals to use cases you can measure. We review data readiness across EHR, PACS, LIMS, genomics, and wearables. We define what success looks like, who owns it, and how we will prove it in ninety days.

Architecture and integration

We design pipelines that fit your stack. That includes FHIR and vendor APIs, identity management, feature stores, and secure storage. Outputs land inside the EHR or PACS where clinicians already work.

Model selection and development

We use proven vision, text, and tabular encoders with a shared representation. If a prebuilt model is strong enough, we use it. If not, we fine tune on your data with strict versioning and audit trails.

Safety, governance, and compliance

We document lineage, consent rules, and access controls. We add contribution charts, confidence ranges, and clinician override. Every inference is logged with inputs, outputs, and model version for review.

Pilot, measure, and scale

We ship one use case with one KPI. We measure weekly and share dashboards with clinical and executive sponsors. When the result is solid, we replicate the pattern across service lines.

What you get is not a one-off demo. You get a working system, clear documentation, and a plan to extend the platform without starting over.

If you want help scoping your first project or stress testing an existing plan, I am happy to walk through options and tradeoffs with your team.

FAQs About Multimodal AI in Healthcare

1) What is multimodal AI in healthcare?

It is an approach that learns from more than one data type at once. Text, images, structured EHR fields, genomics, and wearables are combined in a single model. The goal is a fuller clinical picture that supports diagnosis, risk prediction, and treatment planning.

2) How is it different from traditional AI?

Traditional models usually focus on one source, like images or notes. Multimodal systems fuse several sources, which reduces blind spots. In practice, this improves precision, lowers false positives, and aligns better with how clinicians make decisions using multiple inputs.

3) Where does it fit into clinical workflows?

The best results come when outputs appear inside existing tools. That means EHR problem lists, PACS viewers, and care management dashboards. If the model requires a separate app or extra clicks, adoption drops. Integration at the decision point is critical.

4) What use cases are ready now?

Imaging decision support, deterioration and sepsis risk, readmission prediction, oncology planning, and operational flow are showing results. Teams that start with one service line and one KPI typically see faster validation and cleaner expansion paths across the hospital.

5) How accurate are these models?

Performance varies by data quality and scope. Results improve when notes, images, and labs are aligned by patient and time. The strongest programs pair good modeling with strong data contracts, consistent labeling, and ongoing evaluation against real outcomes, not just offline metrics.

6) What is required on the data side?

A master patient index, consistent timestamps, and mapped vocabularies. You will also need stable pipelines from EHR, PACS, LIMS, and any sensors. Start with sources you can trust today. Add more modalities once governance and data quality are in place.

7) How do we handle privacy and compliance?

Document lineage, consent handling, and access controls. Log every inference with inputs and versioning. Keep model cards and change logs that reviewers can understand. Work with legal and compliance early so you design for HIPAA, GDPR, and local rules from day one.

8) What about explainability?

Clinicians want to see which inputs influenced a score. Provide contribution charts, confidence ranges, and short rationales in plain language. Allow clinician override. The combination of transparency and control drives trust and speeds internal approval.

9) How long does a first deployment take?

Most teams can scope, build, and ship a focused use case in 8 to 12 weeks if data pipelines already exist. Complex integrations take longer. A phased plan helps: cohort first, then unit, then service line, with KPIs tracked at each step.

10) What does a good ROI story look like?

Pick metrics leaders already track. Time to diagnosis, imaging turnaround time, length of stay, 30-day readmissions, and revenue per modality are common. Baseline these before launch, then measure weekly. Tight workflow integration and clear ownership usually separate strong ROI from soft wins.

Conclusion – From Hype to Reliable Clinical Impact

If you have read this far, you already know where the value is. Multimodal AI works when it is tied to a clear outcome, fed by clean data, and delivered inside the tools clinicians already use.

Start narrow. Prove one use case with one KPI. Measure every week. When the signal is real, expand to the next unit or service line. In parallel, invest in the foundation. Data contracts, a small feature store, monitoring, and simple governance will save you months later.

Keep trust front and center. Log every inference with inputs and versioning. Add contribution charts and confidence ranges. Let clinicians override. These small details turn a promising model into a dependable service.

My team at Webisoft can help you plan and ship this work. We map goals to use cases, design the pipelines, and integrate the outputs into your EHR and PACS. We stay for the hard parts like audits, scaling, and change management.

If you want to discuss your first use case or stress test an existing plan, reach out. We will give you a clear path from strategy to production and a system you can defend in a budget meeting.

Contact Webisoft to get started.