Machine Learning in Risk Management Beyond Static Rules

- BLOG

- Artificial Intelligence

- February 9, 2026

Machine learning in risk management allows organizations to identify, measure, and respond to risk by analyzing real patterns in data rather than relying on fixed assumptions. It evaluates behavior across systems, detects anomalies as they emerge, and assigns probabilities that help teams act before issues escalate into losses.

If you’re responsible for managing risk, you already feel the pressure. Data arrives from too many sources. Signals are fragmented. Reviews happen after exposure is visible. Rules that once worked now lag behind changing behavior, leaving gaps you are expected to close. This article focuses on those exact challenges.

It explains how ML works at risk management systems, where it strengthens risk detection, limitations, and examples of ML in risk management.

Contents

- 1 What Is Machine Learning in Risk Management

- 2 Types of Machine Learning Used in Risk Management

- 3 Strengthen risk management with Webisoft’s machine learning service!

- 4 How Machine Learning Differs From Traditional Risk Models

- 5 How Machine Learning Works in Risk Management Systems

- 6 Types of Risk Machine Learning Can Reduce

- 7 Common Machine Learning Models Used in Risk Management

- 8 Practical Examples of Machine Learning in Risk Management

- 9 Risks and Limitations of Using Machine Learning for Risk Management

- 10 Governance and Controls for ML-Based Risk Systems

- 11 When Machine Learning Is Not the Right Tool for Risk Management

- 12 How Webisoft Help You with Machine Learning Service

- 13 Strengthen risk management with Webisoft’s machine learning service!

- 14 Conclusion

- 15 FAQs

What Is Machine Learning in Risk Management

Machine learning in risk management refers to using data-driven models to spot, measure, and respond to risk based on observed behavior, not fixed rules. Instead of relying on static thresholds, these systems learn from past incidents, abnormal behavior, and evolving signals to flag risk earlier.

The real value sits in anomaly detection. You are not just predicting known risks. You are identifying deviations that suggest something new is going wrong. That shift moves risk teams from reacting after losses to acting while signals are still weak.

Types of Machine Learning Used in Risk Management

In ML in risk management, different learning approaches are used based on how risk data behaves. Each one solves a specific problem.

In ML in risk management, different learning approaches are used based on how risk data behaves. Each one solves a specific problem.

1. Supervised Learning

This approach works when past outcomes are known. Fraud cases, credit defaults, or confirmed policy violations become labeled data. The model learns which patterns typically lead to loss and applies that learning to score similar behavior in real time.

2. Unsupervised Learning

This approach is critical for anomaly detection. The model learns normal behavior without labels, then flags deviations that suggest emerging or unknown risks. It works well when new threats do not resemble past incidents.

3. Reinforcement Learning

Reinforcement learning appears in environments where risk decisions influence future behavior. The model adjusts actions based on feedback, which makes it useful in areas like algorithmic trading controls or adaptive cybersecurity defenses.

Strengthen risk management with Webisoft’s machine learning service!

Work with Webisoft’s experts to evaluate your risk scenarios and apply ML where it truly fits!

How Machine Learning Differs From Traditional Risk Models

Most risk teams feel the gap before they can explain it. Rules look fine on paper, but they fall apart when behavior shifts. That is where machine learning creates a clear break from older approaches. For example:

| Dimension | Traditional Risk Models | Machine Learning Systems |

| Core logic | Predefined rules and thresholds | Learns patterns directly from data |

| Adaptability | Manual updates | Continuous learning from new signals |

| Risk coverage | Known and expected risks | Known risks plus emerging anomalies |

| Decision output | Pass or fail decisions | Probabilistic risk scoring |

| Scalability | Degrades with complexity | Handles high-volume, high-variance data |

| Detection strength | Misses subtle signals | Captures weak, combined indicators |

Traditional models assume risk stays stable. They work until behavior shifts. When patterns change, rules lag behind reality and false decisions increase.

Machine learning systems rely on risk scoring models that adjust as data evolves. Instead of reacting after losses, they surface early deviations that signal growing risk.

Why Traditional Risk Management Approaches Fall Short

Most traditional risk frameworks were designed for stable environments. But risk behaves differently. It moves faster, spreads across systems, and hides inside data patterns that rules were never built to catch. Here’s why the traditional management fall short:

Static Rules Cannot Keep Up With Changing Risk

Rule-based systems depend on manual updates. Someone must notice a new pattern, analyze it, and rewrite thresholds. By the time that happens, behavior has already shifted.

This delay creates blind spots where emerging threats pass through unchecked, especially in real-time environments.

Linear Models Miss Complex Risk Interactions

Traditional statistical models assume clean, linear relationships. Real risk does not work that way. Multiple weak signals often interact to create exposure.

When models cannot capture non-linear dependencies, they underestimate risk or flag it too late. This is where risk prediction models built on learning systems outperform static math.

Human-Centric Processes Do Not Scale

Human judgment plays an important role, but it does not scale well. Fatigue, bias, and inconsistency creep in as volume grows. When analysts review thousands of signals manually, decision quality drops.

Risk becomes unevenly managed, and early warning signs are missed. These failures are structural, not operational. That is why organizations look beyond traditional approaches.

Why Risk Problems Are Well-Suited for Machine Learning

Risk rarely shows up as a single clear signal. It builds quietly through small changes in behavior, volume, timing, and context.

That is exactly why predictive risk management depends on machine learning rather than fixed logic. These systems are built to work inside uncertainty, not around it. Here is why risk problems align so well with machine learning:

- Risk is pattern-driven, not rule-driven: Loss events usually come from combinations of weak signals. Machine learning connects those signals instead of treating them in isolation.

- Risk decisions are probabilistic by nature: There is no absolute safe or unsafe state. Models assign likelihoods, which supports better prioritization and control.

- Behavior changes faster than rules can: As user actions shift, learning systems adjust without waiting for manual updates.

- Anomalies matter more than averages: Most serious risks begin as deviations from normal behavior, not obvious violations.

- Feedback improves accuracy over time: Each confirmed incident sharpens future predictions, reducing blind spots.

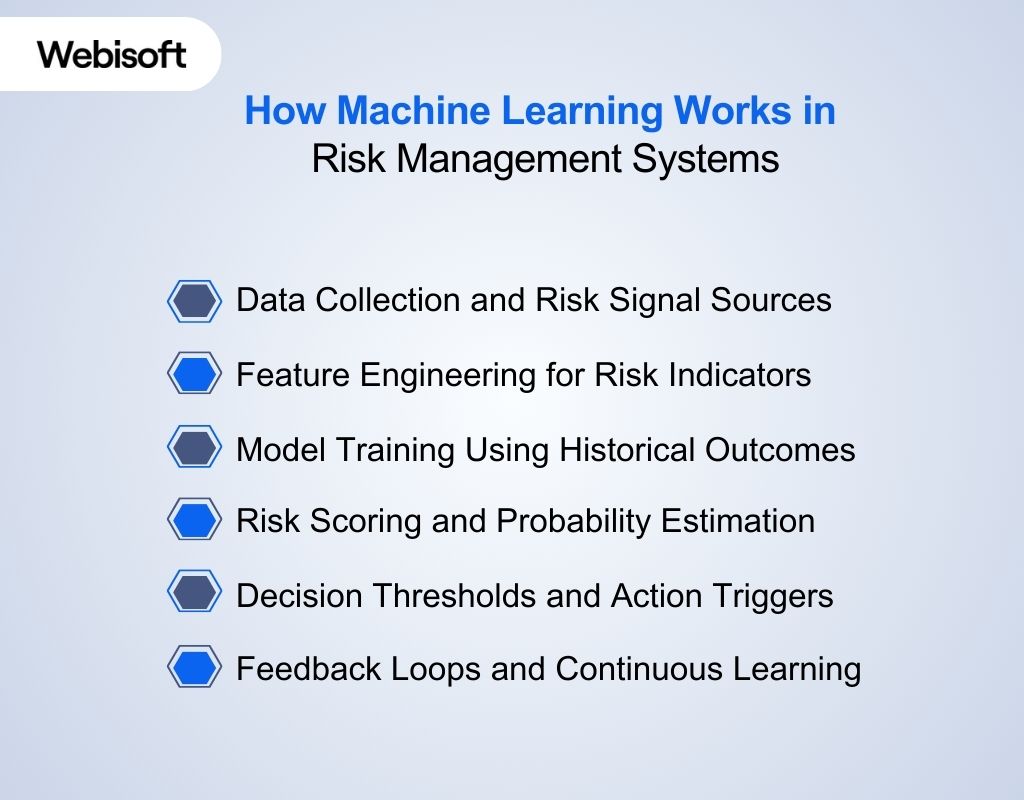

How Machine Learning Works in Risk Management Systems

At a system level, machine learning in risk management follows a clear flow. Each stage exists to reduce noise, surface anomalies, and support faster decisions without guessing.

At a system level, machine learning in risk management follows a clear flow. Each stage exists to reduce noise, surface anomalies, and support faster decisions without guessing.

Data Collection and Risk Signal Sources

Risk data comes from many places. Transactions, system logs, user behavior, access records, and third-party feeds all carry signals. The goal is coverage. Missing one source often means missing early warnings, especially when risks span multiple systems.

Feature Engineering for Risk Indicators

Raw data is not useful on its own. It needs structure. Feature engineering converts activity into indicators like frequency changes, unusual timing, or deviation from normal behavior. This step is where anomaly detection starts to become possible.

Model Training Using Historical Outcomes

Models learn by linking past behavior to confirmed outcomes. Loss events, incidents, and near misses teach the system what risk looks like in real conditions. Training focuses on patterns, not isolated events.

Risk Scoring and Probability Estimation

Instead of binary decisions, models assign probabilities. This allows teams to rank exposure and focus attention where it matters most. Scoring supports scale without overwhelming reviewers.

Decision Thresholds and Action Triggers

Scores only matter when tied to action. Thresholds define when alerts fire, reviews start, or controls activate. These thresholds balance false alarms against missed risks.

Feedback Loops and Continuous Learning

Every confirmed outcome feeds back into the system. Over time, models adjust to new behavior, which strengthens anomaly detection in risk management and keeps controls aligned with reality.

Types of Risk Machine Learning Can Reduce

Risk rarely lives in isolation. It moves across financial activity, internal processes, regulatory boundaries, and technical systems. What makes ML effective is its ability to observe these domains continuously and surface deviations early. Here’s the type of risk ML can handle:

Risk rarely lives in isolation. It moves across financial activity, internal processes, regulatory boundaries, and technical systems. What makes ML effective is its ability to observe these domains continuously and surface deviations early. Here’s the type of risk ML can handle:

Credit and Financial Risk

Financial risk builds through behavior, not headlines. Small changes in repayment timing, exposure concentration, or account usage often precede defaults.

Machine learning identifies these gradual shifts across large datasets, helping teams act while losses are still preventable.

Fraud and Financial Crime Risk

Fraud adapts faster than rules. Attackers test boundaries, alter behavior, and exploit timing gaps. Fraud detection using machine learning focuses on transaction flow and behavioral anomalies, catching schemes that look normal in isolation but suspicious in context.

Operational Risk

Process failures tend to repeat before they escalate. Missed handoffs, approval delays, and system inconsistencies leave patterns behind. In operational risk management, learning systems analyze workflow behavior to identify emerging breakdowns instead of waiting for incidents to occur.

Compliance and Regulatory Risk

Compliance violations rarely start as clear breaches. They begin as subtle deviations in access, communication, or transaction behavior. Compliance risk monitoring uses pattern analysis to surface these early warning signs, allowing intervention before regulatory exposure increases.

Cybersecurity and Technology Risk

Most cyber incidents do not start with alarms. They start with unusual access paths, odd usage times, or unexpected data movement. Cybersecurity risk analytics detects these weak signals across systems, helping teams respond before threats escalate.

Supply Chain and Third-Party Risk

External dependencies add layers of uncertainty. Shifts in vendor behavior, delivery timing, or volume trends often signal disruption ahead. Machine learning tracks these deviations to highlight growing third-party risk early.

If you’re dealing with a different risk profile or uncertain whether machine learning is the right approach, consult with Webisoft’s machine learning experts for thorough discussion.

Common Machine Learning Models Used in Risk Management

Risk does not behave the same way across systems, timelines, or data types. That is why model selection matters more than algorithm popularity. In machine learning in risk management, models are chosen based on how decisions are made, how signals appear, and how quickly risk evolves.

Risk does not behave the same way across systems, timelines, or data types. That is why model selection matters more than algorithm popularity. In machine learning in risk management, models are chosen based on how decisions are made, how signals appear, and how quickly risk evolves.

Classification Models for Risk Prediction

Classification models are used when decisions require clear categorization. They support binary outcomes, such as approve or block, and multi-class decisions, such as low, medium, or high risk.

These models rely on historical outcomes and are effective when consistency and scale matter more than interpretability at the individual level.

Anomaly Detection Models for Unknown Threats

Not all risks are known in advance. Anomaly detection models focus on learning normal behavior first, then identifying deviations.

This makes them well suited for uncovering rare or emerging threats that do not match past patterns. Their strength lies in early warning, not confirmation.

Time-Series Models for Trend and Volatility Risk

Many risks develop over time rather than in single events. Time-series models analyze sequences to detect trends, seasonality, and sudden shifts. They are used when changes in frequency, volume, or volatility signal rising exposure.

Natural Language Processing for Unstructured Risk Data

Risk signals often exist in text. Logs, alerts, reports, and communications contain patterns that structured data misses. Natural language processing models extract meaning from this unstructured data, helping surface recurring issues and early warning signals hidden in written records.

Practical Examples of Machine Learning in Risk Management

Theory matters, but risk teams trust systems only after seeing them work. These examples show how machine learning in risk management operates in real environments, from raw signals to decisions that reduce loss.

Theory matters, but risk teams trust systems only after seeing them work. These examples show how machine learning in risk management operates in real environments, from raw signals to decisions that reduce loss.

Fraud Detection in Financial Transactions

Fraud systems ingest transaction amount, velocity, device history, location shifts, and merchant behavior. Models evaluate how each transaction deviates from an account’s normal pattern and its peer group.

Output is a probability score. Decisions follow thresholds that trigger step-up authentication, temporary holds, or immediate blocks when anomaly strength crosses tolerance.

Credit Risk Scoring for Lending Decisions

Credit models combine financial data with behavioral signals such as payment consistency, balance movement, and income volatility over time. Risk updates continuously as behavior changes.

Lenders adjust limits, pricing, or approval paths early, rather than reacting after defaults appear. This is where AI and machine learning in risk management improves timing, not just prediction accuracy.

Operational Risk Monitoring in Enterprises

Operational systems analyze incident logs, workflow delays, access issues, and control failures across teams. Models cluster related events and flag abnormal frequency increases. Early warnings allow intervention before process weaknesses escalate into financial or regulatory impact.

Risks and Limitations of Using Machine Learning for Risk Management

ML earns trust only when its limits are understood. Many risk failures don’t come from bad intent or weak tools, but from overconfidence in models that were never designed to operate without oversight. Here are some limitations of ML in risk management:

ML earns trust only when its limits are understood. Many risk failures don’t come from bad intent or weak tools, but from overconfidence in models that were never designed to operate without oversight. Here are some limitations of ML in risk management:

Bias and Fairness Risks

Bias enters models through data, not code. When historical decisions reflect unequal treatment or incomplete representation, models scale those patterns silently. Key risk drivers include:

- Skewed training data that overrepresents certain behaviors

- Proxy variables that indirectly encode sensitive attributes

- Feedback loops where past model decisions shape future data

Left unchecked, these issues lead to discriminatory outcomes that are hard to detect after deployment.

Overfitting and Data Leakage

Some models perform well only because they learned shortcuts. Common failure causes:

- Training on data that includes future signals

- Excessive feature tuning without proper validation

- Testing on datasets that resemble training data too closely

The result is false confidence. Performance looks strong until real-world conditions expose the gap.

Concept Drift and Changing Risk Behavior

Risk behavior changes constantly. Attackers adapt, users shift habits, and markets evolve. In machine learning in risk management, models decay when they are not retrained against current data. Warning signs include:

- Rising false positives

- Missed anomalies that were previously detected

- Gradual performance drop without obvious errors

Explainability and Trust Challenges

Risk decisions do not end at prediction. Someone has to justify them. When a model flags an account, blocks a transaction, or escalates an incident, teams must explain why that decision happened. If they cannot, trust breaks down quickly. This becomes a problem when:

- Regulators ask for reasoning behind automated decisions

- Risk analysts need to challenge or override a model’s output

- Audit teams require clear decision trails

- Business leaders demand accountability for outcomes

In risk management, accuracy alone is not enough. A system that cannot explain its decisions creates operational and regulatory exposure, even if its predictions are statistically strong.

Governance and Controls for ML-Based Risk Systems

Machine learning speeds up risk decisions, but speed without control creates exposure. To keep systems reliable and defensible, especially in financial risk prediction, governance must focus on three core control layers:

Machine learning speeds up risk decisions, but speed without control creates exposure. To keep systems reliable and defensible, especially in financial risk prediction, governance must focus on three core control layers:

Model Validation and Performance Monitoring

Models must be validated before deployment and monitored continuously after. This includes testing accuracy on unseen data, tracking error rates over time, and watching for performance decay as behavior changes. Without active monitoring, models drift while teams assume stability.

Human-in-the-Loop Risk Decisions:

Automation should support decisions, not replace accountability. Human review is required when risk scores cross material thresholds, when context contradicts outputs, or when edge cases fall outside training data. This prevents blind reliance on models.

Auditability and Regulatory Readiness:

Clear documentation, version tracking, and decision logs allow teams to justify outcomes to auditors and regulators. Transparency keeps ML defensible.

When Machine Learning Is Not the Right Tool for Risk Management

Machine learning adds value when patterns repeat and data behaves consistently. But risk doesn’t always follow those conditions.

Machine learning adds value when patterns repeat and data behaves consistently. But risk doesn’t always follow those conditions.

In some situations, applying machine learning in risk management increases complexity without improving decision quality. Knowing when not to use ML is part of building a credible risk strategy.

Situations Requiring Regulatory Transparency

Some risk decisions must be explainable by design. In regulated industries, models need to show clear reasoning behind outcomes.

Complex ML models often act as black boxes, making it difficult to provide audit trails, reason codes, or fairness justifications during regulatory reviews.

High-Stakes Situations With Limited Data

ML depends on historical data. Rare events such as major cyber incidents or unprecedented market crashes provide too few examples to learn from.

The same applies to new products or markets where no training data exists. In these cases, expert judgment outperforms statistical learning.

Environments With High Concept Drift

ML assumes future behavior resembles past data. When markets shift suddenly due to geopolitical events or rapid behavior changes, models trained on historical patterns lose relevance. Constant retraining becomes costly and unreliable.

Poor Data Quality

Messy, incomplete, or biased data leads to misleading outputs. Siloed systems and biased historical records cause models to amplify existing problems rather than solve them.

When Human Judgment Is Essential

Risk decisions often involve context, intent, and strategic trade-offs. Legal, reputational, and strategic risks require interpretation beyond correlations. In these areas, machine learning in risk management should support humans, not replace them.

When Overfitting Creates False Confidence

Overfitted models perform well in testing but fail in real conditions. This false confidence can lead to high-impact losses when unseen scenarios occur.

How Webisoft Help You with Machine Learning Service

Applying ML to risk requires more than technical skill. It requires knowing where risk hides, how anomalies surface, and how models should influence decisions. Webisoft applies machine learning through a risk-first lens, focusing on anomaly detection, predictive signals, and operational control rather than abstract model building.

This makes machine learning in risk management actionable instead of experimental. Here’s how Webisoft supports risk-focused ML initiatives:

- Risk trend forecasting: Use historical operational and behavioral data to anticipate emerging risk patterns, helping teams act before exposure materializes.

- Anomaly-driven ML software design: Build custom systems that convert raw, unstructured activity into risk indicators and anomaly signals tied to real decisions.

- Predictive performance monitoring: Apply predictive models to detect early degradation in processes, controls, or systems that signal rising operational risk.

- Complex pattern detection using neural systems: Identify non-linear risk interactions across large, multi-variable datasets that rule-based controls cannot capture.

- Outlier discovery through data mining: Surface irregular behavior, rare events, and hidden dependencies that indicate new or evolving risk conditions.

- ML-based cybersecurity risk detection: Apply learning systems to cyber security and operational data to identify abnormal access patterns and emerging threats early.

Ready to start a machine learning journey in risk reduction? Contact Webisoft to book a machine learning development service today!

Strengthen risk management with Webisoft’s machine learning service!

Work with Webisoft’s experts to evaluate your risk scenarios and apply ML where it truly fits!

Conclusion

In conclusion, machine learning in risk management has shifted how organizations identify, assess, and respond to uncertainty in complex environments. By detecting anomalies, adapting to changing behavior, and supporting earlier decisions, it strengthens risk control where traditional models fall short.

Still, its value depends on proper governance, data quality, and human oversight. Used thoughtfully, machine learning becomes a practical tool for reducing exposure, not just predicting outcomes.

FAQs

Here are some commonly asked questions regarding machine learning in risk management:

How long does it take to deploy machine learning in risk management?

Deployment time depends on data readiness and scope. Most production-grade machine learning in risk management initiatives take three to six months from data assessment to monitored deployment.

Can machine learning work with existing risk management systems?

Yes. Machine learning models are commonly integrated into existing risk platforms through APIs, data pipelines, or scoring layers, allowing teams to enhance current workflows without replacing core systems.

How is data privacy handled in machine learning risk systems?

Data privacy is managed through access controls, anonymization, encryption, and governance policies, ensuring sensitive information is protected while models still learn from behavioral patterns.