Machine Learning in Operations: Key Concepts Explained

- BLOG

- Artificial Intelligence

- January 22, 2026

Machine learning rarely breaks during training. It breaks later, when live data shifts, models slow down, and no one knows which version is running. That quiet failure is where most ML projects lose business trust. Machine learning in operations focuses on what happens after deployment, when models must function inside real systems and workflows.

This is the layer where MLOps turns experimental models into systems teams can actually operate. By reading this, you will understand why MLOps is required, how operational pipelines are structured, and what to monitor across a model’s lifecycle. You will gain practical clarity on running machine learning reliably in production.

Contents

- 1 What is Machine Learning Operations (MLOps)?

- 2 Why MLOps is Required

- 3 Build reliable machine learning systems with Webisoft.

- 4 Core Principles of MLOps

- 5 Key Components of a Machine Learning Operations Pipeline

- 6 Stages of a Machine Learning Operations Lifecycle

- 7 Benefits of Machine Learning in Operations

- 8 Challenges and Limitations of MLOps

- 9 Machine Learning Operations (MLOps) vs DevOps

- 10 How Webisoft Supports Machine Learning Operations

- 11 Build reliable machine learning systems with Webisoft.

- 12 Conclusion

- 13 Frequently Asked Question

What is Machine Learning Operations (MLOps)?

Machine Learning Operations, or MLOps, is the practice of deploying, managing, and maintaining machine learning models in real production environments. It focuses on what happens after training, when models must handle live data, support real users, and stay reliable over time.

MLOps introduces structured workflows for deployment, monitoring, version control, and retraining. These practices help teams detect data shifts, track model changes, and prevent silent failures that can disrupt business systems.

ML operations bring consistency, traceability, and operational control so machine learning operates as a stable component within systems, not a one off analytical experiment. This summarizes the practical MLOps meaning in real production environments.

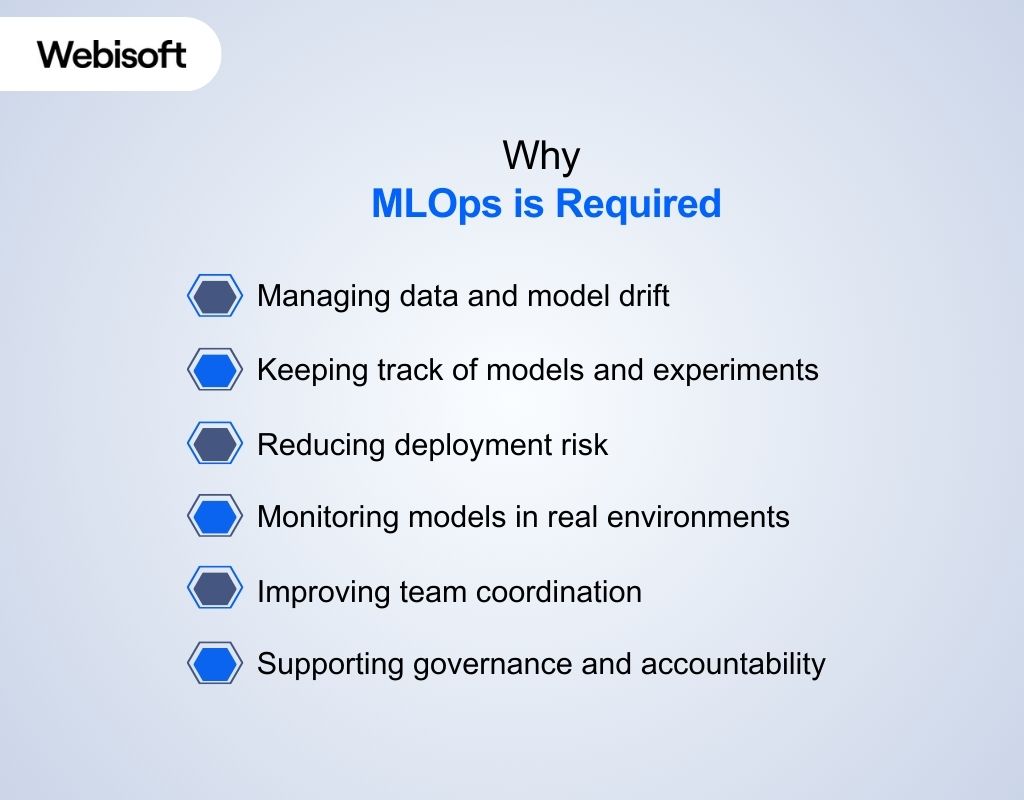

Why MLOps is Required

Understanding what is MLOps explains its role, but it does not explain why it becomes necessary in real systems. The need appears once machine learning moves beyond experimentation and starts operating under production constraints. Here are the key reasons why MLOps is needed:

Understanding what is MLOps explains its role, but it does not explain why it becomes necessary in real systems. The need appears once machine learning moves beyond experimentation and starts operating under production constraints. Here are the key reasons why MLOps is needed:

Managing data and model drift

Data rarely stays the same for long. Customer behavior, market conditions, and upstream systems change continuously. Over time, these shifts reduce model accuracy. MLOps helps teams detect drift early and respond before it affects business outcomes.

Keeping track of models and experiments

Machine learning development involves frequent experimentation. Without structure, teams lose visibility into which model is running and how it was created. MLOps maintains traceability across models, data, and code so production systems remain explainable.

Reducing deployment risk

Deploying a machine learning model introduces uncertainty that traditional releases do not face. Data dependencies and unpredictable behavior can cause failures. MLOps adds validation, controlled releases, and rollback paths to reduce operational risk, often supported by dedicated MLOps tools that standardize these processes.

A systematic study of MLOps best practices highlights how standardized workflows and maturity models improve reliability and scalability in production environments

Monitoring models in real environments

Models that perform well during training can behave differently in production. Latency issues, unexpected inputs, or scaling problems often appear later. MLOps enables continuous monitoring so issues are identified before they escalate.

Improving team coordination

Machine learning systems involve multiple teams with different responsibilities. Without shared processes, ownership becomes unclear. MLOps defines workflows that allow data scientists, engineers, and operations teams to collaborate effectively.

Supporting governance and accountability

Organizations need to understand how models change over time. MLOps keeps a clear record of data sources, model updates, approvals, and deployments, supporting audits, reviews, and long-term accountability.

Build reliable machine learning systems with Webisoft.

Book a consultation to operationalize machine learning across your production workflows.

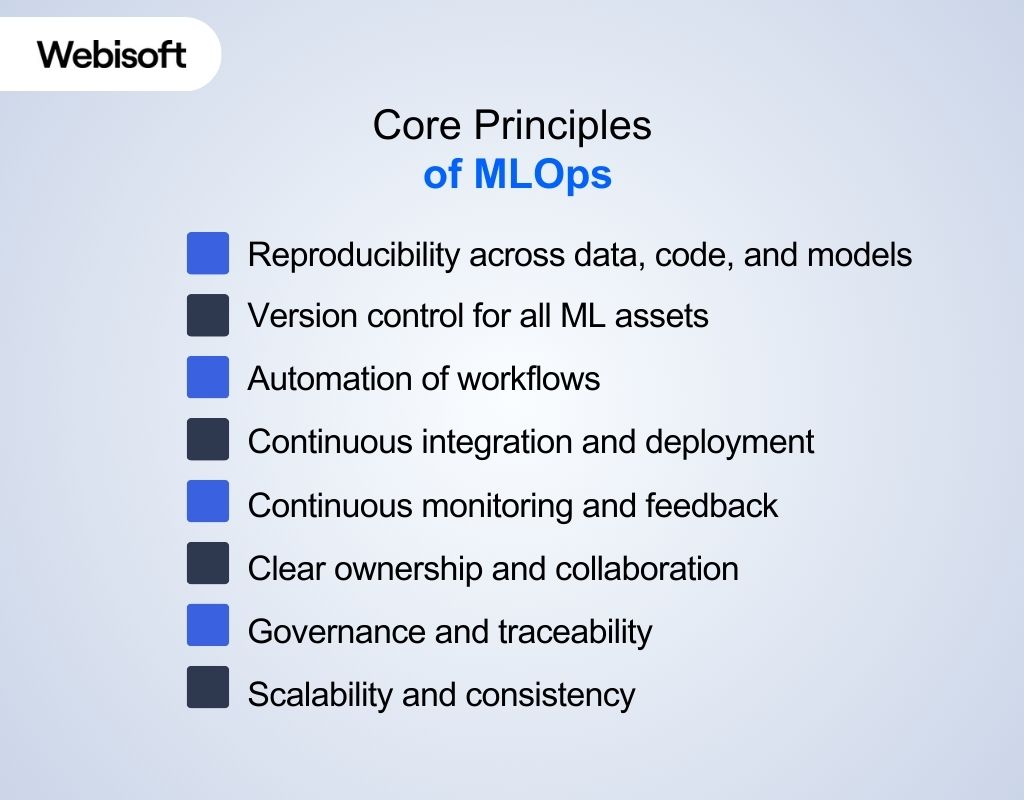

Core Principles of MLOps

MLOps is built on a set of principles that keep machine learning systems reliable once they operate in production. These principles focus on control, visibility, and repeatability across the entire lifecycle.

MLOps is built on a set of principles that keep machine learning systems reliable once they operate in production. These principles focus on control, visibility, and repeatability across the entire lifecycle.

Reproducibility across data, code, and models

Every production model must be reproducible. This requires tracking datasets, feature logic, training code, configurations, and model artifacts together. Reproducibility allows teams to understand how a model was created and to rebuild it when issues arise.

Version control for all ML assets

Machine learning systems change frequently. Data updates, feature adjustments, and model improvements happen continuously. Version control across data, code, and models allows teams to trace changes and compare outcomes without confusion.

Automation of workflows

Manual steps slow teams down and introduce errors. MLOps relies on automated pipelines for training, testing, validation, and deployment. Automation supports consistent execution and reduces dependency on individual contributors.

Continuous integration and deployment

Machine learning systems benefit from controlled, repeatable releases. Continuous integration validates changes early, while structured deployment processes allow models to be promoted safely into production environments.

Continuous monitoring and feedback

Once deployed, models must be observed continuously. Monitoring covers prediction quality, latency, failures, and data behavior. Feedback from production signals informs retraining and system adjustments.

Clear ownership and collaboration

MLOps defines responsibilities across data science, engineering, and operations. Clear ownership prevents gaps where issues go unresolved and supports smoother collaboration throughout the lifecycle.

Governance and traceability

Production machine learning requires accountability. MLOps maintains records of approvals, changes, and deployments. This traceability supports audits, reviews, and compliance requirements in regulated environments.

Scalability and consistency

As organizations deploy more models, operational complexity grows. MLOps principles promote consistency across pipelines and environments, allowing teams to scale without increasing risk or overhead.

Key Components of a Machine Learning Operations Pipeline

The principles of machine learning in operations describe how machine learning systems should operate in production. To apply them consistently, teams rely on concrete pipeline components that provide execution, visibility, and control. Each component plays a specific operational role as systems grow in complexity.

The principles of machine learning in operations describe how machine learning systems should operate in production. To apply them consistently, teams rely on concrete pipeline components that provide execution, visibility, and control. Each component plays a specific operational role as systems grow in complexity.

Data ingestion and validation layer

This component handles incoming data from source systems. It validates schemas, checks data quality, and ensures consistency before data enters training or inference workflows. Early validation prevents downstream failures caused by incomplete or corrupted inputs.

Feature management layer

Features used by models must remain consistent between training and production. This layer manages feature definitions, transformations, and storage so the same logic is applied across environments. It reduces training serving mismatches that often lead to inaccurate predictions.

Model training infrastructure

This component provides the compute and execution environment for training models. It supports repeatable training runs, controlled configurations, and resource management, allowing teams to train models reliably as data and parameters change.

Model registry

The model registry acts as a central system of record for trained models. It stores model artifacts along with metadata such as versions, performance metrics, and approval status. This component helps teams track which models are ready for deployment and which should remain experimental.

Deployment and serving infrastructure

Once approved, models must be made available to applications and services. This component manages how models are deployed, scaled, and served, ensuring predictable behavior under real workloads without exposing internal complexity to consuming systems.

Monitoring and observability component

After deployment, models and pipelines must be observed continuously. This component collects signals related to prediction behavior, system performance, and failures. It provides visibility into how models behave in production without assuming why changes occur.

Metadata and logging stores

Operational transparency depends on detailed records. Metadata and logs capture information about data versions, training runs, deployments, and runtime behavior. These records support debugging, audits, and long-term system analysis.

Stages of a Machine Learning Operations Lifecycle

Once pipeline components are in place, machine learning systems move through a recurring lifecycle. Each stage represents a distinct phase of operational responsibility and long term model management. For many readers, this serves as a conceptual MLOps tutorial.

Once pipeline components are in place, machine learning systems move through a recurring lifecycle. Each stage represents a distinct phase of operational responsibility and long term model management. For many readers, this serves as a conceptual MLOps tutorial.

Data preparation and readiness

This stage focuses on preparing data so it can safely support training and inference.

- Collecting data from approved source systems

- Validating structure, formats, and basic quality

- Applying consistent preprocessing rules

- Confirming data suitability for the intended use case

Model development and training

At this stage, models are created using prepared data and defined configurations.

- Training models using controlled environments

- Tracking parameters, datasets, and configurations

- Evaluating models against agreed performance criteria

- Selecting candidates for production consideration

Model validation and approval

Before deployment, models must be reviewed and approved.

- Comparing performance against baselines

- Checking operational constraints such as latency or resource usage

- Reviewing traceability and documentation

- Approving or rejecting models for production release

Deployment to production

Approved models are introduced into live environments.

- Packaging models for serving systems

- Releasing models through controlled deployment processes

- Managing traffic exposure and access

- Preparing rollback paths in case of failure

Monitoring and observation

Once deployed, models are continuously observed in real conditions.

- Tracking prediction behavior over time

- Monitoring system performance and stability

- Detecting abnormal patterns or failures

- Collecting signals for future decisions

Retraining and iteration

As data and conditions change, models require updates.

- Triggering retraining based on observed signals

- Updating datasets and feature logic as needed

- Repeating validation and approval steps

- Releasing updated models into production

Retirement and replacement

Models do not remain useful indefinitely.

- Identifying models that no longer meet requirements

- Phasing out outdated or unsupported versions

- Replacing models with improved alternatives

- Preserving records for audit and reference

Together, these stages describe how machine learning systems evolve over time, from initial preparation through active operation and eventual replacement. At Webisoft we make machine learning easy without putting extra burden on your operations.

Benefits of Machine Learning in Operations

Machine learning in operations focuses on keeping models reliable after deployment. The benefits below reflect outcomes that appear only when machine learning systems are managed as production assets.

- Stable model behavior over time: Models continue to perform within expected bounds even as data and operating conditions change.

- Faster transition from experimentation to production: Teams reduce delays between model development and live deployment through repeatable operational processes.

- Lower risk during releases and updates: Controlled deployment and rollback paths limit disruption when new models or updates are introduced.

- Improved visibility into production models: Teams gain ongoing insight into how models behave in real environments, rather than relying on offline metrics.

- Reduced operational firefighting: Clear processes and ownership prevent recurring incidents caused by undocumented changes or unclear responsibility.

- Consistent handling of multiple models: Organizations can manage many models simultaneously without increasing complexity or operational risk.

- Stronger accountability and traceability: Model changes, approvals, and deployments are recorded, supporting reviews and audits.

If these benefits align with your operational goals, Webisoft’s AI automation services help integrate machine learning into reliable, scalable workflows. Applying automation at the operations level is often key to sustaining ML performance in real production environments.

Challenges and Limitations of MLOps

Implementing machine learning in operations improves control over machine learning systems, but it also introduces practical challenges that teams must manage over time. These challenges are common in production environments and should be understood early.

Implementing machine learning in operations improves control over machine learning systems, but it also introduces practical challenges that teams must manage over time. These challenges are common in production environments and should be understood early.

High initial setup effort

Establishing MLOps workflows requires upfront investment in processes, infrastructure, and coordination. Teams often need time before the benefits become visible, which can create pressure in early stages.

Growing operational complexity

As more models, data sources, and environments are added, systems become harder to manage. Without strong structure, operational overhead increases and slows down teams.

Skill and knowledge gaps

MLOps sits at the intersection of data science, engineering, and operations. Many teams struggle to find or develop skills that cover all three areas effectively.

Continuous maintenance requirements

Once models are deployed, they require ongoing monitoring, updates, and reviews. This long-term effort is often underestimated during planning.

Cost control challenges

Compute, storage, and monitoring resources can grow quickly. Without careful management, operational costs may rise faster than expected.

Integration with existing systems

Many organizations rely on legacy platforms that were not designed for machine learning workflows. Integrating MLOps into these environments can be slow and complex.

Governance and process friction

Approval requirements, audits, and documentation can introduce delays if processes are unclear or overly rigid. Balancing control with agility remains a challenge.

Machine Learning Operations (MLOps) vs DevOps

Machine learning systems adopt many DevOps practices such as automation and continuous delivery, but they also introduce new artifacts and lifecycle needs. Understanding the differences between MLOps and DevOps helps teams choose the right practices for software versus machine learning systems.

| Aspect | MLOps | DevOps |

| Primary focus | Managing the full lifecycle of machine learning models alongside data and code | Streamlining software development and deployment workflows |

| Core artifacts | Models, data sets, and associated metadata | Application source code and binaries |

| Lifecycle complexity | Includes data preprocessing, model training, validation, deployment, monitoring, and retraining | Focuses on build, test, deploy, and operate phases of software |

| Team composition | Data scientists, ML engineers, DevOps engineers collaborating | Software developers and operations/IT specialists |

| Versioning requirements | Requires versioning not only of code but also of data and models | Typically version controls only code and configuration |

| Monitoring focus | Tracks model performance and data drift in addition to system health | Emphasizes uptime, error rates, and infrastructure metrics |

| Deployment challenges | Must handle retraining triggers, model validation, and data shifts | Manages application releases with predictable behavior |

How Webisoft Supports Machine Learning Operations

Moving a machine learning model into production is where most initiatives stall. Webisoft supports machine learning in operations by building practical MLOps foundations that turn experiments into dependable systems teams can deploy, monitor, and evolve with confidence.

Moving a machine learning model into production is where most initiatives stall. Webisoft supports machine learning in operations by building practical MLOps foundations that turn experiments into dependable systems teams can deploy, monitor, and evolve with confidence.

Assessing your current ML setup

Webisoft starts by understanding where you are today. This includes your data pipelines, deployment process, team structure, and operational risks. The goal is to identify what will actually block your models in production before changes are made.

Designing MLOps pipelines that match your workflows

Instead of generic pipelines, Webisoft designs MLOps workflows around your use cases. Training, validation, deployment, and updates are automated in a way that supports repeatable releases without slowing teams down.

Bringing control to model versions and releases

As models change, it becomes harder to track what is running and why. At Webisoft, we help you put clear versioning, approvals, and promotion steps in place so every production model is intentional and traceable.

Deploying models into real production systems

Webisoft supports integrating models into your existing applications and services. The focus stays on stable behavior under real traffic, not isolated experiments or demos.

Giving you visibility after deployment

Once models are live, we help you monitor how they behave over time. This visibility makes it easier to spot issues early and decide when updates or retraining are needed.

Supporting long-term operation and improvement

Machine learning systems change as your data and business change. Webisoft stays involved to refine pipelines, improve reliability, and adapt your MLOps setup as your needs grow. To continue building on this MLOps foundation, Webisoft works closely with you to refine pipelines, strengthen reliability, and scale operations as needs change.

Get in touch with the Webisoft team to discuss next steps and align machine learning in operations with your production goals.

Build reliable machine learning systems with Webisoft.

Book a consultation to operationalize machine learning across your production workflows.

Conclusion

Machine learning in operations is the moment where predictions stop being impressive and start being accountable. Once models face real users, real data, and real consequences, operations decide whether they quietly earn trust or slowly create confusion.

This is where Webisoft adds value. By applying practical MLOps discipline, Webisoft helps teams keep models visible, stable, and adaptable, so machine learning continues working long after the initial excitement fades.

Frequently Asked Question

Is MLOps only needed for large enterprises?

No, MLOps is not limited to large enterprises because operational issues appear as soon as models are deployed into real workflows. Smaller teams gain early structure, clarity, and control by avoiding manual fixes as data and models change.

Does every ML project require the same level of MLOps?

No, not every machine learning project needs the same operational rigor once it moves toward production use. The required MLOps depth depends on business risk, system scale, data volatility, regulatory exposure, and the cost of failure.

Can MLOps support real-time decision systems?

Yes, MLOps can support real time decision systems by managing deployment, monitoring, and reliability under strict latency requirements. With proper pipelines and controls, teams can operate both batch and real time models safely in production environments.