Machine learning in biotechnology: Basics Explained

- BLOG

- Artificial Intelligence

- February 8, 2026

Biotech is full of brilliant science, but it also comes with a brutal reality: most experiments generate more data than answers. Machine learning in biotechnology helps turn that chaos into signals you can actually use. That matters because biotech decisions are expensive.

A single wrong bet can waste months of lab time, budget, and momentum. Machine learning helps teams spot patterns in genomes, proteins, images, and assay results before the next experiment is even planned. So, this article shows how ML fits into real biotech workflows and where it creates measurable impact.

You will see the strongest real-world applications of ML in biotechnology, explained through practical examples that connect directly to real research work.

Contents

- 1 What Is Machine Learning in Biotechnology?

- 2 Why Biotech Needs Machine Learning

- 2.1 Biology is too complex for rule-based analysis

- 2.2 Biotech data volume is growing faster than human analysis

- 2.3 Discovery pipelines are expensive and time-sensitive

- 2.4 Hidden signals exist in noisy experimental data

- 2.5 Predictive modeling improves success rates in development

- 2.6 Biomanufacturing requires smarter monitoring and optimization

- 3 Build biotech machine learning systems with Webisoft today.

- 4 Real-World Applications of Machine Learning in Biotechnology

- 4.1 Drug discovery and lead optimization

- 4.2 Protein structure and function prediction

- 4.3 Genomics and variant interpretation

- 4.4 Biomarker discovery and precision medicine

- 4.5 Clinical decision support and outcome prediction

- 4.6 Medical imaging and digital pathology

- 4.7 High-throughput screening and lab automation

- 4.8 Bioprocess optimization in biomanufacturing

- 4.9 Quality control and anomaly detection

- 4.10 Biotechnology innovation and new discovery pathways

- 5 How Machine Learning Actually Works in Biotech (3 Real Pipelines)

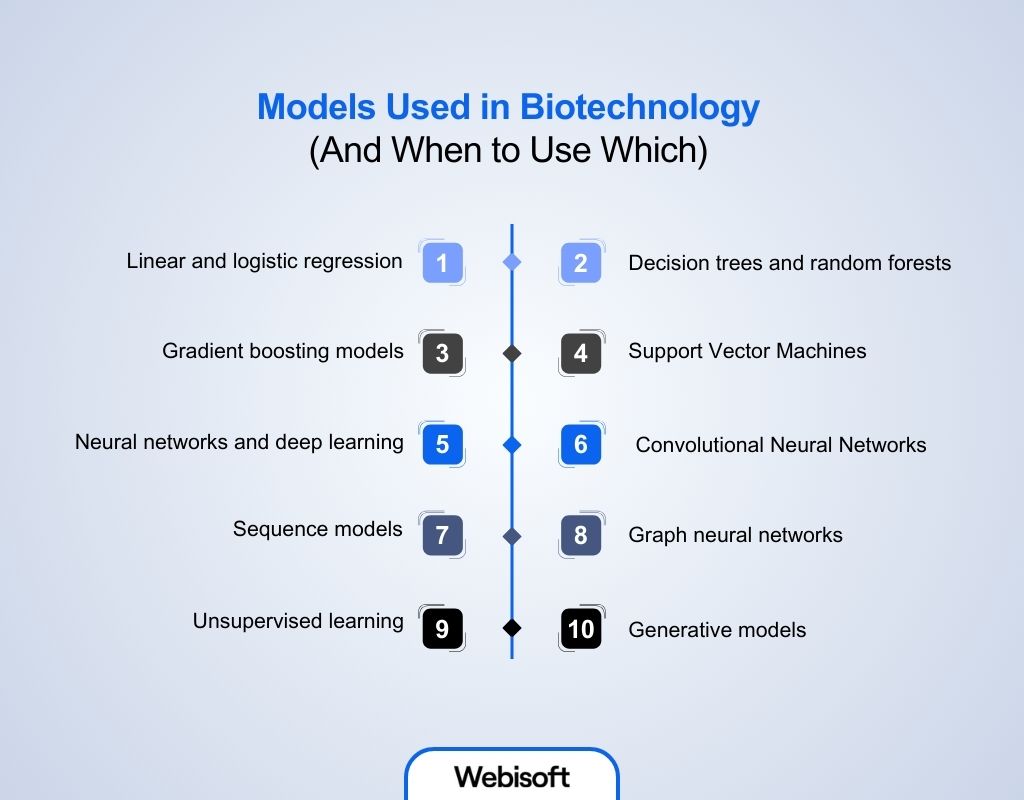

- 6 Models Used in Biotechnology (And When to Use Which)

- 6.1 Linear and logistic regression

- 6.2 Decision trees and random forests

- 6.3 Gradient boosting models (XGBoost, LightGBM, CatBoost)

- 6.4 Support Vector Machines (SVMs)

- 6.5 Neural networks and deep learning

- 6.6 Convolutional Neural Networks (CNNs)

- 6.7 Sequence models (RNNs and Transformers)

- 6.8 Graph neural networks (GNNs)

- 6.9 Unsupervised learning (clustering and dimensionality reduction)

- 6.10 Generative models

- 7 What Makes Biotech Machine Learning Hard

- 8 Machine Learning in Biotechnology in 2026

- 9 Building Biotech Machine Learning Systems With Webisoft

- 10 Build biotech machine learning systems with Webisoft today.

- 11 Conclusion

- 12 Frequently Asked Question

What Is Machine Learning in Biotechnology?

Machine learning in biotechnology refers to computer algorithms that learn from biological data to recognize patterns, make predictions, and support research decisions. It is a key subfield of artificial intelligence. It helps machines improve task performance as they receive more data, without being explicitly programmed for each scenario.

In biotechnology, these algorithms are applied to large and complex datasets. These datasets include genomes, protein measurements, metabolic profiles, clinical records, and imaging data. The goal is to find relationships and insights that traditional methods may fail to detect.

Machine learning does not replace scientists. Instead, it acts as a computational partner that accelerates analysis, improves accuracy, and uncovers hidden biological signals. This shift supports data-driven discovery and optimization across biotech research and development.

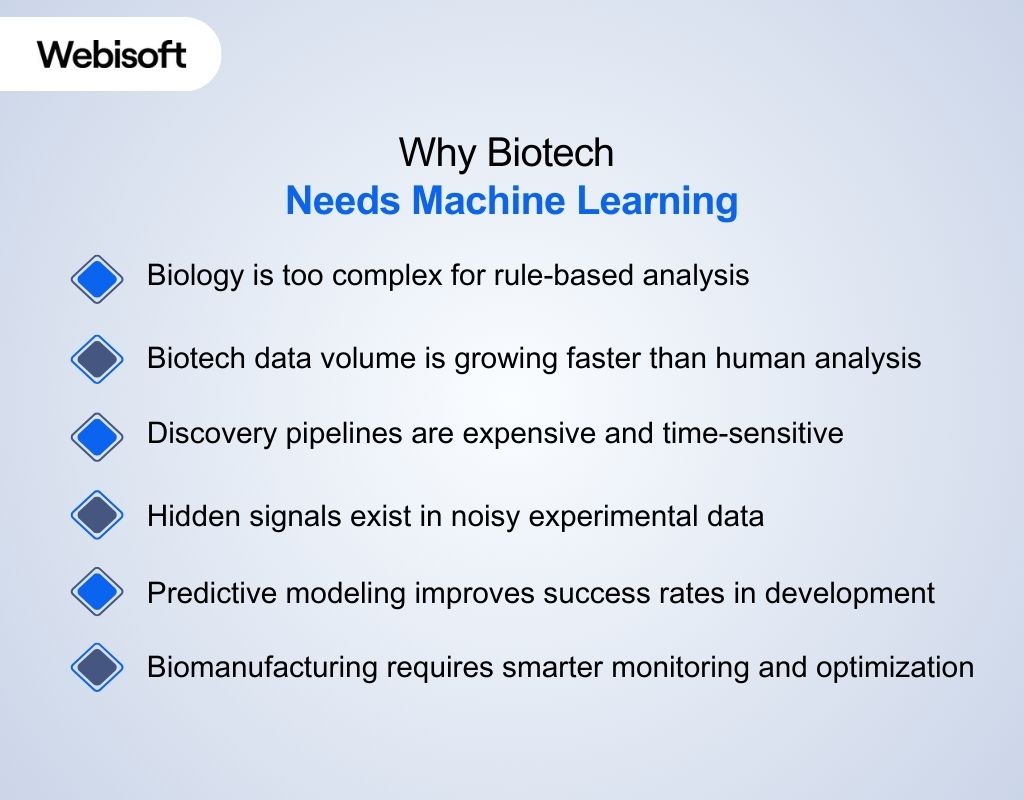

Why Biotech Needs Machine Learning

Biotechnology generates complex datasets that are too large and interconnected for manual analysis alone. This is why the importance of machine learning in biotechnology keeps growing across research and development. It helps biotech teams find patterns, reduce trial-and-error, and move insights into real-world development and production faster.

Biotechnology generates complex datasets that are too large and interconnected for manual analysis alone. This is why the importance of machine learning in biotechnology keeps growing across research and development. It helps biotech teams find patterns, reduce trial-and-error, and move insights into real-world development and production faster.

Biology is too complex for rule-based analysis

Biological systems involve nonlinear relationships across genes, proteins, cells, and environments. Traditional rule-based methods struggle to capture these interactions. Machine learning models learn patterns directly from data, even when relationships are not obvious.

Biotech data volume is growing faster than human analysis

Sequencing, imaging, and high-throughput screening produce massive datasets daily. Manual interpretation becomes slow and inconsistent at scale. Machine learning enables automated analysis that remains reliable as data grows.

Discovery pipelines are expensive and time-sensitive

Drug discovery and biotech R&D require costly experiments and long development cycles. Machine learning helps prioritize promising candidates early. This reduces wasted lab work and speeds up decision-making.

Hidden signals exist in noisy experimental data

Biological datasets often include noise, missing values, and measurement variability. Traditional methods may overlook subtle but meaningful patterns. Machine learning can detect weak signals and correlations that support better hypotheses.

Predictive modeling improves success rates in development

Biotech teams need to forecast outcomes like treatment response, toxicity risk, or protein behavior. Machine learning supports prediction-based development rather than pure experimentation. This increases the chance of success across R&D stages.

Biomanufacturing requires smarter monitoring and optimization

Production environments depend on stable quality, yield, and process control. Machine learning can detect anomalies early and support optimization decisions. This helps reduce batch failures and improve operational consistency.

Build biotech machine learning systems with Webisoft today.

Book a free consultation to plan, build, and deploy faster!

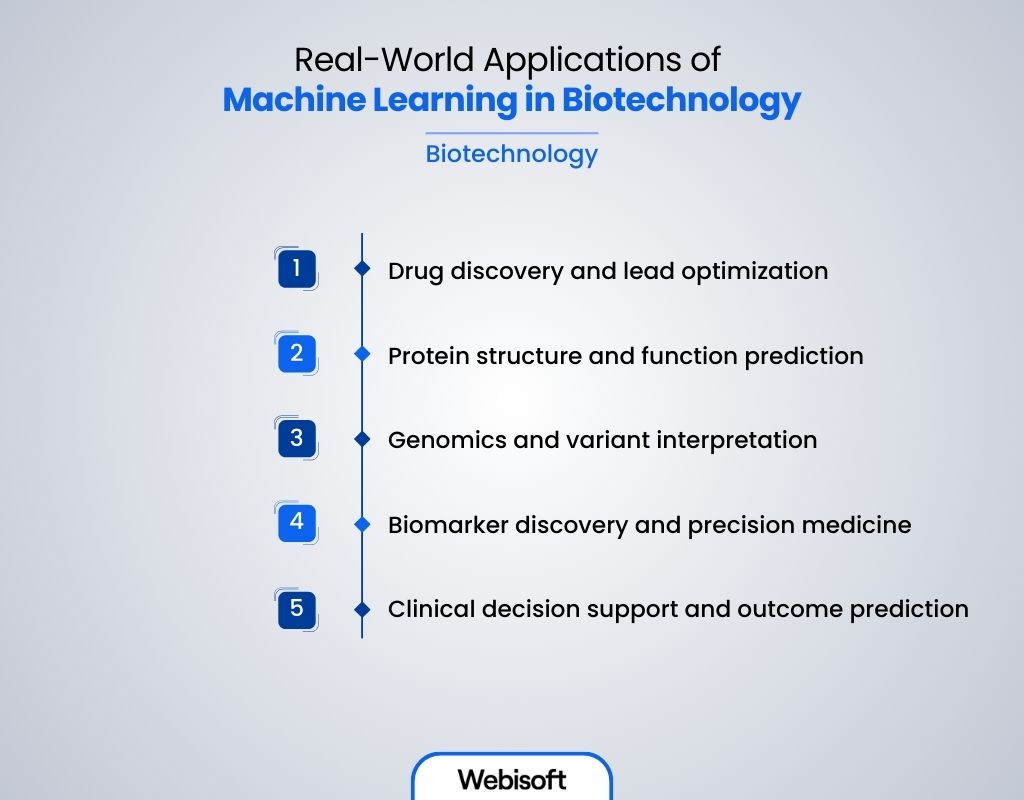

Real-World Applications of Machine Learning in Biotechnology

Machine learning is no longer limited to research papers or experimental prototypes. It is now used across biotechnology to improve discovery speed, reduce cost, and support better decisions. The strongest results come from using biological data to predict outcomes before running expensive experiments.

Drug discovery and lead optimization

AI and machine learning in biotechnology help teams screen large libraries of compounds faster than traditional trial-based testing. Instead of testing every molecule in the lab, models predict which candidates are most likely to succeed. This improves early-stage prioritization and reduces wasted lab cycles. Where it helps most:

- Predicts binding likelihood and activity before lab validation

- Speeds up hit identification using virtual screening

- Helps optimize ADMET properties like toxicity and solubility

- Reduces early-stage cost by cutting unnecessary experiments

Protein structure and function prediction

Proteins control most biological processes, but their structure and behavior are difficult to predict. Machine learning models learn patterns from sequences and structural data to predict folding, stability, and function. This supports faster iteration in therapeutic protein development. Where it helps most:

- Predicts protein folding and structural properties from sequence data

- Identifies functional regions and binding pockets

- Supports antibody and enzyme engineering

- Helps evaluate protein variants linked to disease mechanisms

Genomics and variant interpretation

Genomic sequencing produces massive datasets, but interpretation is the real challenge. Machine learning supports variant classification by predicting which genetic changes are likely to be harmful or clinically meaningful. This improves diagnostic workflows and speeds up genomic research. Where it helps most:

- Classifies variants as benign, uncertain, or pathogenic

- Prioritizes mutations for deeper biological review

- Supports rare disease research and genetic screening

- Reduces manual effort in sequencing interpretation pipelines

Biomarker discovery and precision medicine

Biotech teams use machine learning to identify biomarker patterns linked to diagnosis, progression, or treatment response. These models can detect complex signatures across omics and clinical data. This supports precision medicine and improves trial targeting. Where it helps most:

- Finds biomarker panels across gene, protein, and clinical features

- Supports patient stratification for targeted therapies

- Improves trial design by reducing population noise

- Helps predict responders vs non-responders more reliably

Clinical decision support and outcome prediction

Machine learning can analyze clinical datasets to predict risks, outcomes, and treatment effectiveness. In biotech and pharma, it supports trial planning and safety monitoring. These systems improve consistency and speed in clinical decision-making. Where it helps most:

- Predicts adverse event risk and clinical deterioration

- Improves clinical trial cohort selection and matching

- Supports treatment planning using outcome prediction

- Helps monitor patient risk across longitudinal records

Medical imaging and digital pathology

Imaging is a major source of biotech data, especially in pathology and microscopy. Machine learning models can detect patterns in images that humans may miss or interpret inconsistently. This supports faster diagnostics and better research measurements. Where it helps most:

- Detects tissue abnormalities and tumor regions in pathology slides

- Classifies cell morphology changes from microscopy imaging

- Quantifies biomarker expression and disease indicators

- Improves consistency by reducing human interpretation variability

High-throughput screening and lab automation

High-throughput screening generates results across thousands of experimental conditions. Machine learning helps identify meaningful signals, reduce false positives, and guide what to test next. This improves experiment efficiency and shortens discovery cycles. Where it helps most:

- Prioritizes compounds or conditions based on predicted outcomes

- Detects patterns across assay outputs and screening results

- Reduces false positives through signal-quality modeling

- Supports active learning for smarter next-experiment selection

Bioprocess optimization in biomanufacturing

Biomanufacturing depends on stable yields, predictable quality, and process control. Machine learning models use sensor and batch data to predict outcomes and detect drift early. This helps teams act before a batch fails. Where it helps most:

- Predicts yield and quality deviations early in production

- Detects process drift using time-series sensor signals

- Supports optimization of fermentation and cell culture conditions

- Reduces batch failures and improves operational consistency

Quality control and anomaly detection

Biotech production and lab workflows require strict quality standards. Machine learning can detect anomalies by flagging unusual patterns in sensors, assay outputs, or batch parameters. This helps teams catch issues early and improve root-cause analysis. Where it helps most:

- Flags abnormal batch behavior before failure occurs

- Detects unusual assay or sensor patterns in near real time

- Supports traceability and audit-ready monitoring

- Improves consistency across R&D and production workflows

Biotechnology innovation and new discovery pathways

Machine learning is changing how biotech teams approach discovery. Instead of relying only on trial-and-error, researchers use models to guide hypotheses and experiment design. This creates faster learning loops and more scalable innovation. Where it helps most:

- Speeds up hypothesis generation using pattern discovery

- Helps identify novel targets and biological relationships

- Improves experimental design by reducing unnecessary trials

- Enables scalable discovery workflows in synthetic biology and R&D

How Machine Learning Actually Works in Biotech (3 Real Pipelines)

Machine learning in biotechnology is not just about choosing an algorithm. It is a full workflow that starts with biological data and ends with a usable prediction or decision. Below are three real pipelines that show what the process looks like in practical biotech settings.

Machine learning in biotechnology is not just about choosing an algorithm. It is a full workflow that starts with biological data and ends with a usable prediction or decision. Below are three real pipelines that show what the process looks like in practical biotech settings.

Pipeline 1: Genomics workflow (variant classification and interpretation)

This pipeline is common in research labs and clinical genomics teams. The goal is to classify genetic variants and estimate whether they are likely to be harmless or clinically relevant. What the workflow looks like:

- Data input: Raw sequencing data or variant call files

- Quality control: Remove low-quality reads, check coverage and contamination

- Feature building: Encode variants by location, gene impact, conservation, and population frequency

- Model training: Train a classifier using labeled variants and known clinical annotations

- Validation: Test on independent datasets and ensure no patient overlap across splits

- Output: Variant risk score or classification label for downstream review

What makes this pipeline work in biotech:

- Reliable ground truth labels from curated databases

- Careful splitting strategy to prevent data leakage

- Strong interpretation layer, so results can be trusted by researchers

Pipeline 2: Drug discovery workflow (compound scoring and candidate prioritization)

This pipeline is widely used in early-stage drug discovery. Instead of testing every compound in a wet lab, the model predicts which molecules are most likely to succeed. What the workflow looks like:

- Data input: Compound libraries, assay results, and target information

- Data cleaning: Remove duplicates, normalize assay values, correct experimental noise

- Molecular representation: Convert molecules into fingerprints, descriptors, or graph formats

- Model training: Predict activity, binding probability, or toxicity risk

- Candidate ranking: Select top candidates for wet-lab validation

- Feedback loop: Retrain models using new assay results from validated experiments

What makes this pipeline work in biotech:

- Strong assay design and consistent labeling

- Iterative learning, since discovery data evolves quickly

- Multiple models working together, not just one prediction

Drug discovery ML succeeds when your data, modeling, and validation strategy are aligned from the start. Webisoft can help you plan and deliver a biotech-ready machine learning strategy that turns predictions into real decisions across your pipeline.

Pipeline 3: Biomanufacturing workflow (yield prediction and process optimization)

This pipeline supports biotech manufacturing, where consistency matters as much as innovation. The goal is to predict yield, detect drift, and prevent batch failures using sensor and production data. What the workflow looks like:

- Data input: Bioreactor sensor readings, batch logs, and lab measurements

- Preprocessing: Handle missing values, align timestamps, remove sensor noise

- Feature engineering: Extract trends, rates of change, and stability indicators

- Model training: Train time-series or regression models to predict yield and quality outcomes

- Monitoring: Run predictions continuously during production

- Action layer: Trigger alerts or recommendations for parameter adjustments

What makes this pipeline work in biotech:

- Continuous monitoring, not one-time analysis

- Clear thresholds for alerts and risk scoring

- Integration with manufacturing workflows and quality systems

Models Used in Biotechnology (And When to Use Which)

Machine learning in biotechnology and life sciences is not about using the most complex model available. It is about matching the model to the biological problem, data structure, and decision risk. Since biotech data is often noisy, sparse, and high-stakes, model choice directly affects reliability.

Machine learning in biotechnology and life sciences is not about using the most complex model available. It is about matching the model to the biological problem, data structure, and decision risk. Since biotech data is often noisy, sparse, and high-stakes, model choice directly affects reliability.

Linear and logistic regression

These models estimate a direct relationship between input features and an outcome using weighted coefficients. In biotech, they are commonly used as baselines because their behavior is easy to interpret and explain.

They are useful when biological relationships are relatively simple and when transparency matters more than raw accuracy. Use them when:

- You need clear explanations for clinical or research decisions

- Your dataset is small, structured, and well-defined

- You want a benchmark before using more complex models

Decision trees and random forests

Decision trees split data into branches based on feature thresholds, while random forests combine many trees to reduce overfitting.

These models handle nonlinear relationships and feature interactions well. In biotech, they work well with noisy experimental data and mixed biological features where relationships are not strictly linear. Use them when:

- Your data is tabular with interacting biological variables

- You need better accuracy than linear models

- You still want some interpretability

Gradient boosting models (XGBoost, LightGBM, CatBoost)

Gradient boosting builds models sequentially, with each new model correcting errors from the previous one. These models are strong performers on structured datasets with many features.

They are widely used in biotech for omics tables and clinical datasets where sample size is limited but feature count is high. Use them when:

- You need high predictive accuracy on tabular data

- Your dataset has many features and fewer samples

- You want strong performance with controlled training cost

Support Vector Machines (SVMs)

SVMs separate classes by finding the optimal boundary in high-dimensional space. They are effective when the number of features is large compared to the number of samples. In biotechnology, SVMs are often used in genomics and proteomics classification tasks. Use them when:

- You have high-dimensional biological features

- Your dataset is small to medium in size

- The task is classification rather than large-scale regression

Neural networks and deep learning

Neural networks learn layered representations directly from data, allowing them to capture complex patterns without manual feature engineering.

Deep learning is especially useful when raw data carries the signal. In biotech, these models are used for imaging, sequence analysis, and large-scale prediction problems. Use them when:

- Your data is complex and unstructured

- You have enough samples and compute resources

- Manual feature engineering is not reliable

Convolutional Neural Networks (CNNs)

CNNs are a type of deep learning model designed specifically for image data. They detect spatial patterns by learning filters across pixels. They are widely used in biotech for pathology slides, microscopy images, and cell-based screening. Use them when:

- Your input data is biological imaging

- You need detection, classification, or segmentation

- Consistent visual interpretation is required

Sequence models (RNNs and Transformers)

Sequence models treat biological sequences like ordered data, where context and position matter. Transformers are now preferred because they capture long-range dependencies more effectively. These models are used for DNA, RNA, and protein sequence analysis. Use them when:

- You work with genetic or protein sequences

- Order and context affect biological behavior

- Feature-based methods are insufficient

Graph neural networks (GNNs)

GNNs model data as graphs, with nodes and edges representing structure and relationships. In biotech, molecules and interaction networks naturally fit this format. They are commonly used in drug discovery and molecular property prediction. Use them when:

- You model small molecules or interaction networks

- Structural relationships are critical

- Fingerprint-based features lose important information

Unsupervised learning (clustering and dimensionality reduction)

Unsupervised models identify patterns without labeled outcomes. They are used to find structure, reduce dimensionality, and support exploratory analysis. In biotech, these methods are common in early research and omics exploration. Use them when:

- You lack reliable labels

- You want subgroup or pattern discovery

- Visualization and exploration are priorities

Generative models

Generative models create new data samples that follow learned biological patterns. In biotech, they are used to design molecules or protein sequences with desired properties. Their value depends heavily on downstream validation. Use them when:

- Your goal is candidate design, not just prediction

- You can validate outputs experimentally

- You want to expand discovery beyond known libraries

What Makes Biotech Machine Learning Hard

Machine learning can create real value in biotech, but biotech projects face constraints that are uncommon in typical ML work. These challenges come from biological variability, lab conditions, and the difficulty of validating results in the real world.

- Limited samples, massive features: You may have thousands of genes or proteins, but only a limited number of patient or experiment samples.

- Expensive, noisy labels: Many labels depend on lab assays, expert review, or long-term outcomes, and these can include noise or uncertainty.

- Batch effects and lab variability: Differences in protocols, instruments, reagents, or sites can create artificial signals that do not represent biology.

- Biological heterogeneity: Two people with the same condition can show different biological signatures, which makes prediction less stable.

- Weak signals, high noise: Measurement error, missing values, and variability can hide meaningful patterns and increase false positives.

- Poor cross-dataset generalization: Performance can drop sharply when the population, workflow, or experimental setup changes.

- High trust and validation requirements: When models affect clinical or production decisions, teams need explainability, traceability, and audit-ready evidence.

Machine Learning in Biotechnology in 2026

In 2026, machine learning is no longer treated as a “future trend” in biotech. It is now part of real research workflows, especially in discovery, diagnostics, and lab operations. The biggest shift is that ML is moving from analysis support into decision support.

In 2026, machine learning is no longer treated as a “future trend” in biotech. It is now part of real research workflows, especially in discovery, diagnostics, and lab operations. The biggest shift is that ML is moving from analysis support into decision support.

ML is becoming a standard layer in biotech workflows

Many biotech teams now treat machine learning as a built-in step, not an optional add-on. In fact, AI-powered literature review tools are used by 76% of biotech and biopharma organizations. This adoption supports earlier experiment planning, reduces trial-and-error, and speeds up iteration.

Multi-modal biotech modeling is becoming more common

Instead of training models on one dataset type, teams are combining multiple sources. This includes genomics, proteomics, imaging, and clinical data. The goal is stronger biological understanding and better predictions from a fuller view of the system.

AI agents and automation are entering the lab environment

Some biotech companies are starting to use AI-driven automation to support lab planning and execution. The focus is on faster experiment cycles and better documentation. This is pushing biotech toward more repeatable and scalable research workflows.

Reproducibility and traceability are becoming non-negotiable

As ML influences high-impact decisions, teams are being forced to prove results. Models need clear version control, training history, and audit-ready outputs. This is also driving adoption of stronger ML governance practices.

ML adoption is shifting toward practical value, not hype

In 2026, biotech teams care less about model complexity and more about measurable outcomes. They want models that reduce lab cost, improve success rates, and support real production decisions. This is why practical deployment matters more than experimental performance.

Building Biotech Machine Learning Systems With Webisoft

You have seen what biotech teams are doing with ML in 2026. Now the question is execution. At Webisoft, we build biotech-ready ML systems that hold up in real workflows, with production deployment, monitoring, and clear documentation built into delivery.

- Production-first architecture, not lab-only prototypes: We design model serving, failover, caching, and rollout controls from day one. This prevents “works locally” models from breaking under real traffic.

- Data strategy that fits biotech reality: We help you turn scattered data sources into training-ready datasets. Our team handles cleaning, transformation, and feature work with validation checkpoints.

- Domain-fit models that match your constraints: We build custom approaches when generic templates fail on edge cases. That includes neural networks, ensembles, and hybrid methods tuned to your domain needs.

- Monitoring, drift detection, and safe retraining: Our systems track feature shifts, set retrain schedules, and support rollback plans. This keeps performance stable as data changes over time.

- Integration into existing clinical and research systems: We connect ML outputs to the tools your team already uses, instead of forcing rebuilds. That keeps adoption practical and reduces disruption.

- Structured delivery with clear phases and checkpoints: We run ML delivery through a defined process that keeps scope controlled and progress visible. It reduces costly pivots and keeps work tied to outcomes.

Execution is where most biotech ML projects succeed or fail, and our role is to make sure yours delivers in production. Reach out to Webisoft to share your goals and get a clear delivery plan built around your data, workflows, and timelines.

Build biotech machine learning systems with Webisoft today.

Book a free consultation to plan, build, and deploy faster!

Conclusion

Machine learning in biotechnology is no longer about running models for the sake of it. The real win is simpler: fewer wasted experiments, faster decisions, and better direction when the data gets messy. When ML is used correctly, it becomes a practical research advantage, not a side project.

That said, results do not come from algorithms alone. They come from building the full system around them. At Webisoft, we help biotech teams turn ML into something usable in the real world, from clean data pipelines to deployment-ready delivery.

Frequently Asked Question

Can AI replace biotechnology?

No. AI cannot replace biotechnology because biotech depends on real biological experiments, lab validation, and human scientific judgment. AI supports biotech by improving analysis, prediction, and decision-making. It accelerates discovery, but wet-lab testing remains essential for proof and safety.

Does machine learning require large datasets in biotech?

Machine learning performs best with large datasets, but biotech often has limited samples. Techniques like transfer learning, data augmentation, and weak supervision help models learn from smaller biological datasets. Strong preprocessing and validation can also improve performance with fewer samples.

Can ML replace experimental validation in biotech?

No, machine learning cannot replace experimental validation in biotech. ML can predict outcomes and prioritize the best candidates, but wet-lab experiments are still required to confirm accuracy, safety, and biological effectiveness. Validation is essential for real-world biotech decisions.