How to Label Data for Machine Learning the Right Way

- BLOG

- Artificial Intelligence

- January 10, 2026

Machine learning models do not learn from intuition. They learn from examples, and those examples only work when someone labels them correctly. Poor labels quietly shape flawed behavior long before any algorithm shows results.

When you learn how to label data for machine learning, you are defining what your model considers correct, incorrect, important, or irrelevant. These decisions matter most in real-world conditions, not controlled demos. This guide breaks the process into clear steps, practical rules, and quality checks. It helps your training data guide models correctly before they reach users at scale.

Contents

- 1 What Data Labeling Means in Machine Learning?

- 2 When Data Labeling is Required and When It is Not

- 3 Build reliable machine learning datasets with Webisoft today!

- 4 Labeling Methods Used in Machine Learning Projects

- 5 How to Label Data for Machine Learning in Easy Steps

- 5.1 Step 1: Lock the learning objective and the label target

- 5.2 Step 2: Specify the annotation format the model will train on

- 5.3 Step 3: Build a label taxonomy with strict boundaries

- 5.4 Step 4: Write annotation guidelines that teach decisions

- 5.5 Step 5: Collect data and check relevance before labeling

- 5.6 Step 6: Clean, normalize, and assign stable IDs

- 5.7 Step 7: Choose the right workforce and review model

- 5.8 Step 8: Run a pilot and rewrite the rules, not the data

- 5.9 Step 9: Apply quality assurance and consensus checks

- 5.10 Step 10: Label in batches with controlled operations

- 5.11 Step 11: Add assisted labeling after humans stabilize

- 5.12 Step 12: Freeze splits and version the dataset for training

- 6 Quality Signals That Tell your Labeling is Working

- 7 Common Mistakes to Avoid When Labeling Data

- 8 Webisoft’s Approach to Data Labeling for Machine Learning Projects

- 8.1 Data labeling built for real production use

- 8.2 Annotation methods that match your model goals

- 8.3 Quality control that reduces rework and delays

- 8.4 Secure handling of sensitive datasets

- 8.5 Domain-aware labeling that reflects real-world conditions

- 8.6 Flexible engagement that adapts as your models evolve

- 8.7 Support beyond labeling when you need it

- 9 Build reliable machine learning datasets with Webisoft today!

- 10 Conclusion

- 11 Frequently Asked Question

What Data Labeling Means in Machine Learning?

Data labeling in machine learning is the process of assigning descriptive tags to raw data so a model can learn the relationship between input and output. These labels define what the data represents in the context of a prediction task. In supervised learning, labeled data is the reference used during training. The model compares its predictions against these labels and adjusts itself to reduce errors.

These labels are commonly referred to as ground truth. They represent the expected outcome the model should learn to reproduce when it encounters similar data. The form a label takes depends on the data type and the problem being solved. This distinction matters when deciding how to label data for AI, since different machine learning tasks require different annotation structures.

Common types of data labeling

Data can be labeled in different ways depending on its format and the prediction goal. Each type determines what information the model is trained to recognize.

- Classification: Assigning a single category to an entire item such as an image, text, audio clip, or video.

- Object detection: Identifying and labeling object locations using bounding boxes along with class labels.

- Segmentation: Labeling data at pixel or element level, including semantic and instance segmentation.

- Keypoints and pose labeling: Marking landmarks like joints, facial points, or body positions.

- Text and NLP labeling: Tagging entities, intent, sentiment, topics, or token-level structure in text.

- OCR and document labeling: Labeling text regions, fields, tables, and layout structure in documents.

- Audio transcription and sound labeling: Transcribing speech, identifying speakers, or tagging sound events.

- Video and temporal event labeling: Annotating actions or events with start and end times across frames.

When Data Labeling is Required and When It is Not

Data labeling is required when a machine learning model must learn a direct relationship between input data and a known, correct output. This is the foundation of supervised learning, where the model improves by comparing its predictions against labeled examples.

Data labeling is required when a machine learning model must learn a direct relationship between input data and a known, correct output. This is the foundation of supervised learning, where the model improves by comparing its predictions against labeled examples.

Labeling is also necessary when you need to measure model performance reliably. Even systems trained with minimal labels still require labeled data for validation and testing.

When data labeling is required

You should plan to label data in the following situations:

- Training supervised learning models: Any supervised model needs labeled examples to learn patterns, relationships, and decision boundaries from historical data.

- Natural language processing tasks: Text classification, named entity recognition, intent detection, sentiment analysis, and document parsing require labeled text to teach models linguistic structure and meaning.

- Speech and audio recognition systems: Speech-to-text, speaker identification, call analysis, and sound event detection depend on labeled audio segments and transcripts.

- Computer vision and object recognition: Image classification, object detection, segmentation, and pose estimation require labeled visual data to identify objects, locations, and spatial relationships.

- Fraud detection and anomaly detection with labels: When past fraud cases or confirmed anomalies exist, labeled examples are needed to train models to distinguish normal behavior from risky patterns.

- Evaluating model accuracy and reliability: Labeled validation and test datasets are required to measure performance, track regressions, and compare model versions.

- High-risk or regulated use cases: Domains such as finance, healthcare, and security require human-reviewed labels to support audits, traceability, and accountability.

When full data labeling is not required

In some cases, you can reduce or avoid labeling most of the dataset:

- Unsupervised learning: Models discover patterns using unlabeled data in machine learning, supporting clustering, grouping, and anomaly detection without predefined outputs.

- Semi-supervised learning: A smaller labeled dataset is combined with a larger unlabeled set.

- Self-supervised learning: Training signals are generated from the data itself rather than human-assigned labels.

- Reinforcement learning: Models learn through interaction and feedback from rewards or penalties rather than static labeled examples, making explicit data labeling unnecessary.

- Active learning setups: Only selected, high-value samples are labeled instead of the entire dataset.

Key takeaway

If your project relies on supervised learning or requires trustworthy performance evaluation, data labeling is essential. If the goal is pattern discovery or label efficiency, limited or selective labeling may be enough.

Build reliable machine learning datasets with Webisoft today!

Book a free consultation to plan accurate, scalable data labeling.

Labeling Methods Used in Machine Learning Projects

Data labeling is not performed the same way in every project. The method used depends on data volume, task complexity, risk tolerance, and model maturity. Choosing the wrong labeling method often creates quality issues that appear only after training. Below are the most common data labelling techniques used in real-world machine learning systems.

Data labeling is not performed the same way in every project. The method used depends on data volume, task complexity, risk tolerance, and model maturity. Choosing the wrong labeling method often creates quality issues that appear only after training. Below are the most common data labelling techniques used in real-world machine learning systems.

Manual labeling

Manual labeling relies entirely on human annotators to apply labels based on defined guidelines. This method provides the highest control and accuracy, especially for complex tasks such as medical data, legal text, or nuanced language understanding. The trade-off is higher cost and slower throughput.

Automated labeling

Automated labeling uses rules, heuristics, or existing models to assign labels without human input. It is useful for large datasets with simple patterns or well-defined rules. Automation alone can introduce systematic errors if not carefully validated.

Human-in-the-loop labeling

Human-in-the-loop labeling combines automation with human review. Models generate initial labels, and humans validate, correct, or reject them. This method balances speed and accuracy and is widely used once labeling rules and quality standards are stable.

Synthetic labeling

Synthetic labeling generates labeled data through simulations, programmatic rules, or synthetic data creation. It is useful when real data is scarce or expensive to label. Synthetic labels must be validated carefully to ensure they reflect real-world conditions.

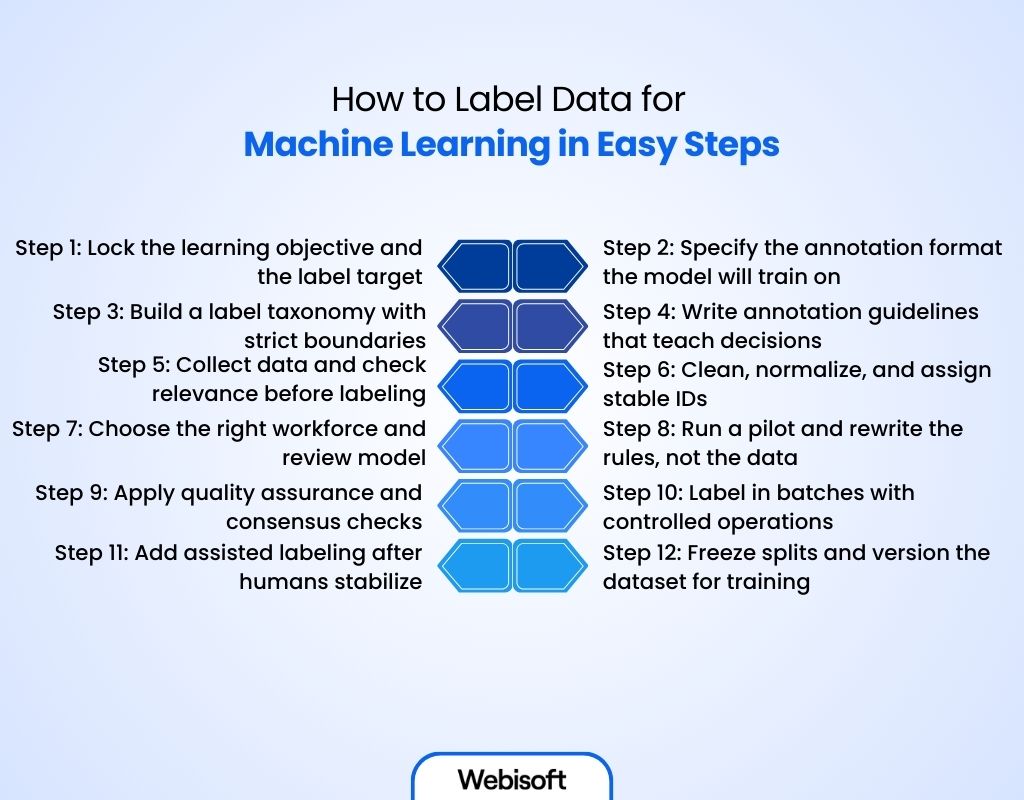

How to Label Data for Machine Learning in Easy Steps

Labeling data for machine learning becomes manageable when the process is broken into clear, repeatable steps. This section explains how to label data for machine learning using a practical, production-ready workflow.

Labeling data for machine learning becomes manageable when the process is broken into clear, repeatable steps. This section explains how to label data for machine learning using a practical, production-ready workflow.

Step 1: Lock the learning objective and the label target

This step exists to eliminate ambiguity before labeling begins. If the learning objective is unclear, labels will drift as different people interpret the task differently. Start by expressing the model’s goal as a single, concrete output.

- Define the expected output type, such as a class, text span, bounding box, segmentation mask, or timestamp

- Define the unit of labeling, such as one image, one message, one call, or a range of frames

- Clearly state what qualifies as “in scope” for labeling

- Define what is “out of scope” and should be rejected or flagged

- Write five to ten difficult edge cases that are likely to confuse labelers

These edge cases often reveal hidden assumptions early. Output: A one-page task brief that explains the prediction goal to labelers and reviewers.

Step 2: Specify the annotation format the model will train on

Labels are only useful if they match the format the training pipeline expects. Many teams lose time here by labeling data in formats that later need conversion or rework. This step ensures alignment between labeling and training.

- Choose the label schema, including label names, IDs, and allowed values

- Define coordinate systems and units, such as pixel space, token offsets, or time units

- Decide on export formats such as JSON, CSV, COCO, YOLO, or plain text

- Test the export by running a small sample through the training pipeline

This validation prevents late-stage incompatibilities. Output: A label schema document and a verified sample export file.

Step 3: Build a label taxonomy with strict boundaries

The taxonomy defines the complete set of labels and the rules that separate them. Its purpose is to remove overlap and subjective interpretation. Each label should be precise and enforceable.

- Write one clear definition for each label

- Add inclusion rules describing what must be present

- Add exclusion rules describing what must not be present

- Define tie-break rules for cases where two labels seem valid

- Allow fallback labels like “other” only under documented conditions

Without strict boundaries, disagreement increases as labeling scales. Output: A label dictionary that reviewers can consistently enforce.

Step 4: Write annotation guidelines that teach decisions

Guidelines turn abstract rules into repeatable actions. They are especially important when data is incomplete, noisy, or ambiguous. Guidelines must be understandable by new labelers on day one. For each label, include:

- Include a plain-language definition supported by concrete data labelling examples that demonstrate correct decisions across common and edge-case scenarios

- At least three correct examples that reflect real data

- At least three incorrect examples that show common mistakes

- Clear rules for borderline cases

- A confidence rule explaining when labelers must escalate instead of guessing

Guidelines should be versioned and updated intentionally. Output: Versioned annotation guidelines with a visible change log.

Step 5: Collect data and check relevance before labeling

Labeling irrelevant data wastes effort and weakens the model. Coverage that reflects real usage is more valuable than raw volume. Before labeling begins:

- Select data sources such as production logs, sensors, user submissions, or partners

- Check that data represents real environments and use cases

- Remove obvious non-signal items like blank files, duplicates, or corrupted media

- Record source metadata such as device type, locale, time, and consent status

This context often explains later model behavior. Output: A curated raw dataset with documented source context.

Step 6: Clean, normalize, and assign stable IDs

Cleaning is part of the labeling pipeline, not a separate concern. It ensures consistency and traceability. At this stage:

- Normalize formats such as image dimensions, audio sampling rates, or text encoding

- Standardize metadata fields so every record follows the same structure

- Assign stable identifiers that never change across dataset versions

- Remove or redact sensitive fields labelers do not need

- Split data by constraints such as language, domain, or privacy tier

This preparation protects both quality and compliance. Output: A labeling-ready dataset with stable identifiers.

Step 7: Choose the right workforce and review model

Human performance varies with task complexity and repetition. Labeling roles must be matched to risk and difficulty. Define roles clearly:

- Use domain experts for high-stakes areas like medical, legal, or safety data

- Use trained annotators for repeatable tasks such as bounding boxes or tagging

- Add a reviewer layer to resolve disagreements

- Define clear escalation paths for unclear cases

- Train all labelers using the same starter set to align expectations

Calibration early prevents divergence later. Output: A role assignment plan and a short labeler training guide.

Step 8: Run a pilot and rewrite the rules, not the data

The pilot phase is designed to expose rule gaps, not to produce final labels. During the pilot:

- Label a small, diverse batch from all data sources

- Track disagreements by label and scenario

- Identify unclear definitions or missing labels

- Update taxonomy and guidelines instead of correcting labels manually

- Repeat the pilot until disagreement levels stabilize

This step prevents large-scale relabeling. Output: Validated v1 guidelines and a stable pilot dataset.

Step 9: Apply quality assurance and consensus checks

Quality assurance verifies that labels match definitions, not individual judgment. Consensus checks ensure multiple labelers interpret rules the same way before errors reach training data. At this stage:

- Review a fixed percentage of labeled samples from every batch

- Measure inter-annotator agreement to detect ambiguity or drift

- Resolve disagreements using documented tie-break rules

- Escalate unclear cases to reviewers instead of forcing decisions

- Update guidelines only when disagreement patterns repeat

Consensus should be measured before scaling further. High disagreement signals rule problems, not labeler failure. Output: A QA report with agreement metrics and resolved conflicts.

Step 10: Label in batches with controlled operations

As labeling scales, consistency becomes harder to maintain. Controlled operations protect dataset integrity.

- Use fixed batch sizes to standardize throughput

- Freeze guideline versions per batch to avoid silent changes

- Maintain a shared log of unresolved edge cases

- Re-label data only when documented rule changes require it

This approach keeps labels coherent across time. Output: Labeled batches tied to specific guideline versions.

Step 11: Add assisted labeling after humans stabilize

Automation improves speed only after human decisions are consistent, a principle applied in AI automation services that combine models with human review. Common assisted workflows include:

- Pre-labeling, where models suggest labels and humans review

- Consolidation, where multiple opinions are merged into a final label

- Active selection, where only high-value or uncertain samples are labeled

Humans must remain responsible for final decisions. Output: Faster labeling supported by human-validated results.

Step 12: Freeze splits and version the dataset for training

Finalizing the dataset makes training repeatable and auditable. At this stage:

- Freeze training, validation, and test splits

- Store the label schema alongside the dataset

- Store the guideline version used to generate labels

- Maintain an audit trail showing who changed labels and why

This documentation supports debugging, retraining, and audits. Output: A versioned training dataset ready for model development. At this point, teams have a complete view of how to label data for machine learning from definition through delivery.

And if you are planning to operationalize this labeling workflow, Webisoft helps teams design, execute, and scale data labeling aligned with real machine learning objectives. Explore Webisoft’s machine learning development services to turn labeled data into production-ready models.

Quality Signals That Tell your Labeling is Working

After completing the labeling steps, quality signals help confirm whether how to label data for machine learning was applied consistently across the dataset. These indicators allow teams to catch issues early, before inconsistencies spread across the dataset.

- Disagreement rates steadily decline: As guidelines improve, labelers should disagree less on the same label types. Persistent disagreement usually signals unclear definitions or missing boundary rules.

- Strong agreement on a fixed reference set: When labelers consistently match labels on a small, pre-reviewed dataset, it shows that instructions and expectations are well understood.

- Label distributions remain stable across batches: Each label’s proportion should stay reasonably consistent unless the underlying data changes. Large swings often indicate rule drift or inconsistent interpretation.

- Reduced reliance on fallback labels: Usage of labels like “other” or “unknown” should decrease over time. Frequent fallback use suggests gaps in the taxonomy or unclear edge-case handling.

- Review feedback shifts toward minor adjustments: When reviewers mainly make small corrections instead of reversing labels, it indicates growing consistency and shared understanding among labelers.

- Edge-case backlog stops growing: Early growth in edge cases is normal. Over time, that list should shrink as rules are clarified and incorporated into guidelines.

- Model performance improves predictably with new data: As labeled data increases, model results should improve gradually or stabilize. Sudden drops often point to inconsistent labeling rather than modeling issues.

Common Mistakes to Avoid When Labeling Data

Once labeling is underway, small mistakes can quietly undermine data quality, even when teams understand how to label data for machine learning in theory. The points below highlight common failures teams encounter during labeling and why avoiding them is important for reliable machine learning outcomes.

- Starting labeling with vague objectives: When the prediction goal is not clearly defined, labelers apply personal judgment. This creates inconsistent labels that no amount of model tuning can fix later.

- Allowing overlapping or poorly bounded labels: Labels that are not mutually clear cause frequent disagreement. Overlap leads to noisy training data and forces repeated relabeling as rules change.

- Treating guidelines as static documents: Guidelines that are not updated when new edge cases appear quickly become outdated. This results in label drift across batches.

- Skipping pilot phases to save time: Labeling at scale without a pilot almost always leads to large rework. Early disagreements are signals to fix rules, not to push volume.

- Overusing fallback labels like “other”: Heavy reliance on fallback labels hides real structure in the data. It usually indicates missing classes or unclear boundary rules.

- Introducing automation too early: Applying pre-labeling or model assistance before human labels stabilize amplifies early mistakes instead of improving speed.

- Failing to track label and rule changes: When label updates are not documented, datasets lose traceability. This makes debugging model behavior difficult and breaks reproducibility.

Webisoft’s Approach to Data Labeling for Machine Learning Projects

Once a labeling strategy is defined, execution determines whether the data is truly usable for training. Webisoft approaches data labeling as a production discipline, not a one-off task. The focus stays on consistency, domain alignment, and datasets that integrate cleanly into real machine learning pipelines.

Once a labeling strategy is defined, execution determines whether the data is truly usable for training. Webisoft approaches data labeling as a production discipline, not a one-off task. The focus stays on consistency, domain alignment, and datasets that integrate cleanly into real machine learning pipelines.

Data labeling built for real production use

Webisoft supports labeling across image, text, audio, video, and complex data formats because most machine learning systems evolve beyond a single data type. This means you do not need to change partners or rebuild processes as your datasets grow or your use cases expand. Labels are designed to stay compatible with production pipelines, not just early experiments.

Annotation methods that match your model goals

Instead of applying generic annotation styles, Webisoft aligns labeling methods with what your model needs to learn. If your model requires precise localization, segmentation, structured extraction, or time-based understanding, the labeling format is selected to match those training requirements directly. This reduces post-processing work and improves model learning efficiency.

Quality control that reduces rework and delays

Webisoft builds quality control into the labeling process from the start. Reviews, checks, and guideline enforcement happen during labeling, not after delivery. This helps you avoid large relabeling cycles, reduces noise in training data, and keeps datasets stable across batches and updates.

Secure handling of sensitive datasets

If your data includes sensitive, regulated, or high-risk information, Webisoft applies controlled access and secure handling throughout the annotation process. This allows you to label critical datasets with confidence while maintaining compliance and protecting confidentiality.

Domain-aware labeling that reflects real-world conditions

Labels only make sense when they reflect real operational context. Webisoft brings domain understanding across industries such as healthcare, finance, insurance, and autonomous systems. So annotations reflect how data is actually used, not just how it looks in isolation.

Flexible engagement that adapts as your models evolve

Machine learning projects rarely stay static. Webisoft structures labeling work so it can adapt to changing data volumes, new label classes, and updated objectives. This flexibility allows you to extend or refine datasets without disrupting ongoing development.

Support beyond labeling when you need it

When labeling is part of a larger machine learning initiative, Webisoft can support you beyond data preparation. From development through deployment planning, Webisoft ensures your labeled data keeps delivering value as models are trained, evaluated, refined, and scaled over time. At this stage, the difference comes down to execution and consistency across real datasets. You can contact Webisoft to discuss how these labeling practices fit your data, model objectives, and production timelines.

Build reliable machine learning datasets with Webisoft today!

Book a free consultation to plan accurate, scalable data labeling.

Conclusion

Knowing how to label data for machine learning is where intention becomes outcome. The care you put into labels decides whether models behave predictably, adapt over time, or quietly fail in real conditions. Strong labeling turns data into understanding, not noise.

Webisoft supports teams at this final mile, where process matters more than theory. By aligning labeling workflows with real model goals and long-term use, Webisoft helps you close the loop between data preparation and dependable machine learning systems.

Frequently Asked Question

Can I reuse labeled data across different machine learning models

Yes, labeled data can be reused when the prediction objective, label definitions, and output format stay the same. Reuse usually fails when models require different structures, granularity, or semantics, which forces relabeling to avoid misleading training signals.

How long does a typical labeling project take

The duration of a labeling project depends on dataset size, data complexity, guideline maturity, review depth, and labeler expertise. Small pilots may take days, while production-scale projects often span weeks, with timelines clarified after an initial pilot phase.

Can poor labeling be fixed after model training

Poor labeling is difficult to fix after training because models directly learn label noise as a signal. Correcting labels usually requires retraining or fine-tuning with clean data, since models cannot reliably separate wrong labels from valid patterns once learned.