What is Deep Machine Learning? Expert Guide!

- BLOG

- Artificial Intelligence

- January 2, 2026

Deep machine learning is no longer just a trending term. It is a core technology driving real-world systems you rely on every day. Whether it’s detecting fraud in financial transactions, improving medical imaging accuracy, or powering intelligent search engines, its impact is practical and measurable.

Deep machine learning is a focused area of artificial intelligence that trains multi-layer neural networks on large datasets to identify patterns, make predictions, and adapt over time. What’s truly best about it is it can handle complex, unstructured data with precision. Read on to explore the details in this expert guide.

Contents

- 1 What Is Deep Machine Learning?

- 2 How Deep Machine Learning Works?

- 3 Deep Learning Vs Machine Learning

- 4 What Is The Purpose Of Deep Learning? Why Is It Popular?

- 5 Deep Learning Applications

- 5.1 1. Virtual Assistants (Alexa, Siri, Google Assistant)

- 5.2 2. Fraud Detection Systems

- 5.3 3. Autonomous Self-Driving Vehicles

- 5.4 4. Medical Diagnosis and Healthcare

- 5.5 5. Automatic Language Translation

- 5.6 6. Image and Facial Recognition

- 5.7 7. Personalized Content Recommendations

- 5.8 8. Chatbots and Customer Service

- 6 Build Your Deep Machine Learning Future with Webisoft!

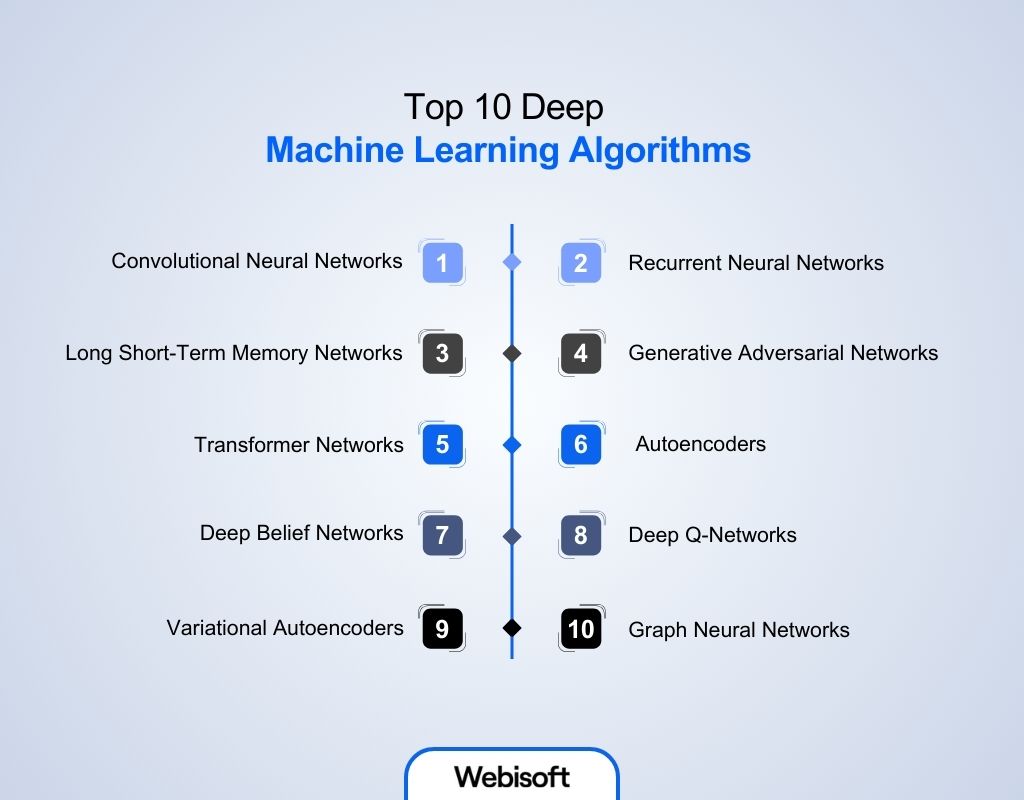

- 7 Top 10 Deep Machine Learning Algorithms

- 7.1 1. Convolutional Neural Networks (CNNs)

- 7.2 2. Recurrent Neural Networks (RNNs)

- 7.3 3. Long Short-Term Memory Networks (LSTMs)

- 7.4 4. Generative Adversarial Networks (GANs)

- 7.5 5. Transformer Networks

- 7.6 6. Autoencoders

- 7.7 7. Deep Belief Networks (DBNs)

- 7.8 8. Deep Q-Networks (DQNs)

- 7.9 9. Variational Autoencoders (VAEs)

- 7.10 10. Graph Neural Networks (GNNs)

- 8 What Are The Benefits Of Using Deep Learning Models?

- 9 Potential Challenges Of Using Deep Learning Models

- 10 Deep Learning Infrastructure Requirements

- 11 The Future of Deep Learning Technology

- 12 Webisoft: Your Senior Engineering Partner for Custom Deep Learning Architecture

- 13 Build Your Deep Machine Learning Future with Webisoft!

- 14 In Closing

- 14.1 Frequently Asked Questions

- 14.2 1. How long does it take to deploy a custom deep learning model?

- 14.3 2. Do we need to own our own GPUs to work with Webisoft?

- 14.4 3. What is the difference between Supervised and Unsupervised Deep Learning?

- 14.5 4. Can deep learning models work with our existing legacy software?

What Is Deep Machine Learning?

Deep machine learning represents a subset of machine learning powered by multilayered neural networks. Think of it as teaching computers to learn as human brains do. The technology trains computers to process extensive data sets, mimicking human cognitive processes.

The word “deep” refers to multiple layers in the network. More layers mean more sophisticated learning capabilities. These systems discover useful feature representations from data automatically. You don’t need to manually program every detail.

The network learns patterns on its own. Each layer builds something more complex than the previous one. This distributed, highly flexible structure explains deep learning’s incredible power and versatility.

This deep AI machine learning is more capable, autonomous, and accurate than traditional machine learning. The technology powers everything you interact with daily.

Defining Neural Networks

Neural networks form the foundation underlying all deep learning methods. Deep learning exists because neural networks exist first. Think of neural networks as collections of connected units called neurons.

These artificial neurons model biological neurons in your brain loosely. A neuron represents nothing more than a numeric value. Connections between neurons resemble axon-synapse connections in biological brains. Weights are positive or negative numbers representing connections between nodes.

Each neuron receives input data and processes it with learned weights. Higher weight values give neurons more influence over others. Think of weights as determining how much input passes through.

When neural networks learn something new, these weights change constantly. The backpropagation algorithm updates model weights based on prediction errors. This weight adjustment process is what we call training.

Historical Note:

- In 1943, Warren McCulloch and Walter Pitts introduced the first mathematical neural network model. Their goal was understanding how brains produce complex thought from simple neuron responses.

- Frank Rosenblatt built the Mark I Perceptron in 1957 at Cornell Aeronautical Laboratory. This room-sized machine used photocells and artificial neural networks for image recognition.

How Deep Machine Learning Works?

Deep machine learning works through multiple layers of artificial neurons that process data step by step. Each layer learns simple patterns first, then combines them to understand complex features.

During training, the model compares its predictions with correct answers and adjusts itself using algorithms like backpropagation. With large datasets and powerful GPUs, these models gradually improve accuracy and make intelligent decisions from unstructured data.

For example, in face recognition, one layer learns edges, another learns facial features, and deeper layers identify the person, even across different images or lighting conditions.

Deep Learning Vs Machine Learning

The primary difference lies in how each algorithm learns and processes data. Classic machine learning depends on human intervention to identify patterns and features. Deep learning requires less human intervention and can learn from its own errors.

What Is The Purpose Of Deep Learning? Why Is It Popular?

Deep learning learns from data, just as humans do, and solves problems faster than ever. The technology underpins all recent innovations in artificial intelligence. As of 2024, 72% of companies worldwide now use AI in at least one business function. Why is it so popular:

Deep learning learns from data, just as humans do, and solves problems faster than ever. The technology underpins all recent innovations in artificial intelligence. As of 2024, 72% of companies worldwide now use AI in at least one business function. Why is it so popular:

1. Scaling with Data

Traditional algorithms plateau in performance after reaching a certain point. Deep machine learning models actually get better the more data you give them. They continuously improve with additional training examples.

2. Unstructured Data

It is the only technology that can effectively understand unstructured data. Photos, videos, and human speech fall into this category.

Deep learning neural networks process unstructured data without requiring additional preparation. This capability unlocks previously inaccessible insights.

3. End-to-End Learning

It can handle a task from start to finish autonomously. End-to-end learning simplifies design by removing manual feature engineering and intermediate steps.

A single model takes raw audio files. It outputs translated text transcripts without needing five different sub-programs.

4. Automatic Feature Discovery

Building machine learning infrastructures requires manual feature engineering, where experts spend significant time. Deep learning algorithms possess the ability to automatically learn features from data. The network discovers patterns without human guidance. This eliminates tedious manual work completely.

5. Superhuman Performance

Modern architectures have demonstrated human-level or superhuman performance across numerous domains. Healthcare has witnessed diagnostic accuracy increases of up to 30%.

Self-driving cars navigate complex environments safely. Virtual assistants ranging from Alexa to Siri represent the most popular application. These achievements seemed impossible just years ago.

Deep Learning Applications

From cybersecurity for fraud prevention to autonomous vehicles, deep learning applications are rapidly transforming businesses and daily lives.

From cybersecurity for fraud prevention to autonomous vehicles, deep learning applications are rapidly transforming businesses and daily lives.

Today, it’s actively being used in various fields, including healthcare, finance, and cybersecurity. Let’s explore how this technology powers the services you interact with every single day.

1. Virtual Assistants (Alexa, Siri, Google Assistant)

The most popular application of deep learning is virtual assistants, ranging from Alexa to Siri to Google Assistant. These assistants utilize deep learning and neural networks to adapt and improve based on constantly updated data.

2. Fraud Detection Systems

Fraud detection systems excel at identifying anomalies in user transactions. They aggregate data from various sources, such as device location and credit card purchasing patterns.

Companies like Signifyd, Mastercard, and Riskified use these models to detect and prevent fraudulent activities.

3. Autonomous Self-Driving Vehicles

Deep learning is the force that is bringing autonomous driving to life. A million sets of data are fed to a system to build a model and train machines. Vehicles use convolutional neural networks to recognize road signs instantly. They classify different parts of the road accurately.

4. Medical Diagnosis and Healthcare

Deep learning techniques are being used to make quick and early diagnoses of life-threatening diseases. Its use is helping minimize the burden on clinicians and doctors.

Revolutionizing healthcare by enabling early and accurate disease detection. Doctors analyze medical images like X-rays and MRI scans.

5. Automatic Language Translation

Machine translation has evolved dramatically with deep learning advances. Distributed representations and convolutional neural networks are helping achieve greater maturity in natural language processing.

Translation systems now understand context and cultural nuances better. They provide accurate translations across dozens of languages instantly.

6. Image and Facial Recognition

Face recognition systems are used in authentication systems, attendance tracking, and photo organization apps. These systems build unique facial feature maps automatically. Deep learning models analyze eye spacing, nose shape, and jawline measurements.

7. Personalized Content Recommendations

Deep learning models help companies create tailored experiences by processing vast amounts of data and recognizing patterns. Streaming platforms analyze your viewing history, preferences, and access times. They predict what content you’ll enjoy next accurately.

8. Chatbots and Customer Service

Chatbots are essential in customer service, e-learning, and healthcare applications. AI assistants with deep learning algorithms always learn using their data and human-to-human dialogue.

They analyze existing interactions between customers and support staff and create messages and responses. Ready to lead the AI revolution? Partner with Webisoft, a premier deep machine learning company, to deploy these high-performance algorithms and scale your custom infrastructure today.

Build Your Deep Machine Learning Future with Webisoft!

Advanced artificial intelligence and custom infrastructure built for you.

Top 10 Deep Machine Learning Algorithms

1. Convolutional Neural Networks (CNNs)

CNNs are specialized for processing data with a grid-like topology, like images. They succeed in image classification, object detection, and face recognition tasks.

Filters recognize specific patterns like edges, textures, and shapes from images. Pooling layers reduce dimensionality while retaining essential information.

2. Recurrent Neural Networks (RNNs)

RNNs are designed to recognize patterns in data sequences like time series or natural language. They maintain hidden states, capturing information about previous inputs. This memory allows networks to remember past inputs over time. The hidden state updates based on the current input constantly.

3. Long Short-Term Memory Networks (LSTMs)

LSTMs are special RNNs capable of learning long-term dependencies. They avoid long-term dependency problems effectively through gate mechanisms.

Cell states run through entire sequences, carrying information across steps. Three gates control information flow: input, forget, and output.

4. Generative Adversarial Networks (GANs)

GANs comprise two neural networks competing against each other. A generator creates synthetic data while a discriminator evaluates authenticity.

This adversarial process creates realistic images, videos, and audio. They’re used for creating deepfakes and synthetic training data.

5. Transformer Networks

Transformers are the backbone of many modern NLP models. Self-attention mechanisms compute the importance of each input part differently.

They process input data using parallelization for improved efficiency. Positional encoding adds information about word positions in sequences. They power large language models like GPT and BERT.

6. Autoencoders

Autoencoders are unsupervised learning models for data compression and denoising. They learn to encode data into lower-dimensional representations efficiently. The decoder reconstructs the original data from encoded information accurately. They’re used for feature learning and dimensionality reduction tasks.

7. Deep Belief Networks (DBNs)

DBNs are composed of multiple layers of Restricted Boltzmann Machines stacked. They’re used for feature learning, image recognition, and pretraining.

Each layer learns increasingly complex features from input data. Layer-by-layer training uses greedy algorithms for efficient learning.

8. Deep Q-Networks (DQNs)

DQNs combine deep learning with Q-learning for reinforcement learning tasks. They handle environments with high-dimensional state spaces effectively. Neural networks replace Q-tables to approximate Q-values efficiently. They’ve been successfully applied to playing video games.

9. Variational Autoencoders (VAEs)

VAEs are generative models using variational inference for new data generation. They generate new data points similar to training data. VAEs are used for generative tasks and anomaly detection. They learn probability distributions of input data efficiently. Healthcare uses them for drug discovery and molecule generation.

10. Graph Neural Networks (GNNs)

GNNs generalize neural networks to graph-structured data effectively. Nodes represent entities and edges represent relationships between them.

Message passing allows nodes to aggregate information from neighbors. They’re used for social network analysis and recommendation systems. Molecular structure analysis benefits greatly from GNN applications.

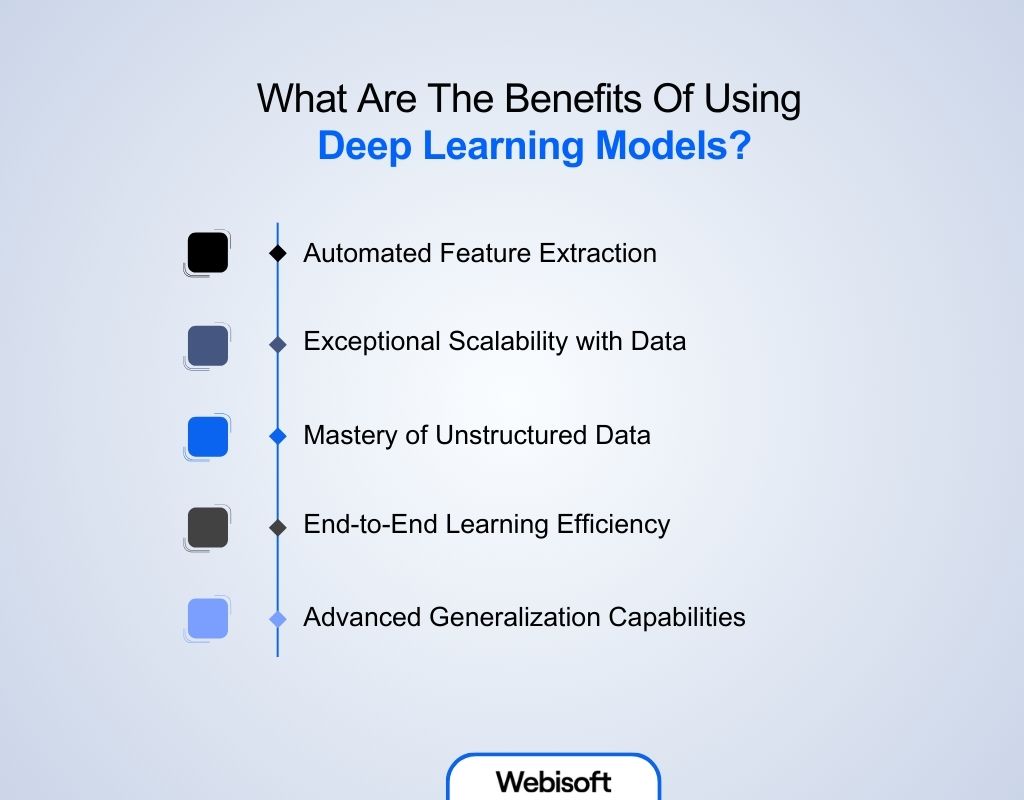

What Are The Benefits Of Using Deep Learning Models?

Deep learning models give computers a human-like ability to understand the world. They handle massive amounts of data and find hidden patterns that traditional software simply cannot see or process.

Deep learning models give computers a human-like ability to understand the world. They handle massive amounts of data and find hidden patterns that traditional software simply cannot see or process.

1. Automated Feature Extraction

You no longer have to tell the computer exactly what to look for. Deep learning models “teach” themselves to identify critical details, such as the specific curve of a lung nodule in an X-ray, saving experts thousands of hours of manual labeling.

2. Exceptional Scalability with Data

Most software hits a “brain ceiling” where more data doesn’t help. Deep learning is different: the more information you feed it, the smarter it gets. This makes it the perfect partner for businesses growing their digital footprints.

3. Mastery of Unstructured Data

Most of our world is “messy”, think of voice notes, CCTV footage, or chaotic emails. These models excel at making sense of this chaos, allowing you to search through videos or translate languages as easily as searching a spreadsheet.

4. End-to-End Learning Efficiency

Instead of building five different programs to handle one task, deep learning does it all in one go. This “all-in-one” approach makes your systems faster, more reliable, and much easier for your technical teams to maintain and update.

5. Advanced Generalization Capabilities

These models don’t just memorize; they understand. Because they learn the “essence” of a task, they can handle new situations they’ve never seen before, like a self-driving car safely navigating a construction zone it wasn’t originally programmed for.

Ready to Scale?

Stop struggling with rigid software and embrace the future. Partner with Webisoft, a leading artificial intelligence firm, to build custom deep learning models that automate your growth and secure your market lead

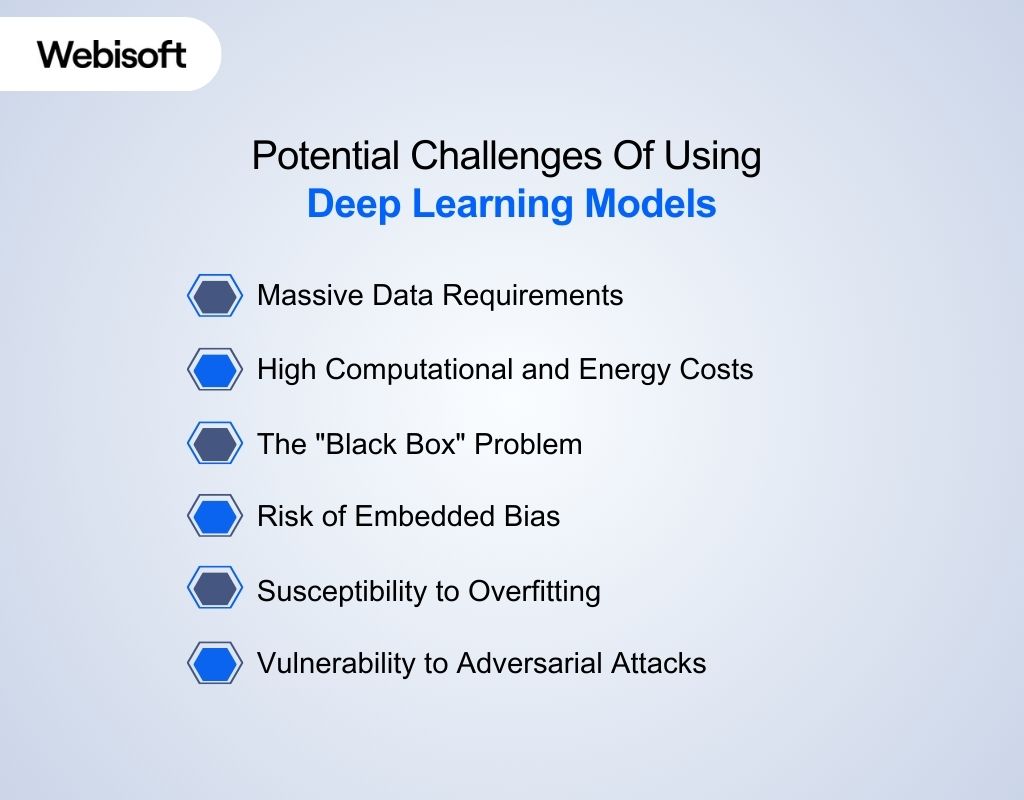

Potential Challenges Of Using Deep Learning Models

Deep learning can be a double-edged sword for your projects. While it is incredibly powerful, its complexity means you must manage high costs and “black box” decisions to ensure your AI remains a reliable and safe tool.

Deep learning can be a double-edged sword for your projects. While it is incredibly powerful, its complexity means you must manage high costs and “black box” decisions to ensure your AI remains a reliable and safe tool.

1. Massive Data Requirements

Deep learning models typically require millions of data points to achieve high accuracy, which can be expensive or impossible to collect. To solve this, experts use Transfer Learning, taking a pre-trained model and fine-tuning it with a much smaller, specialized dataset for your specific needs.

2. High Computational and Energy Costs

Training state-of-the-art models requires thousands of specialized chips and enough electricity to power small cities. The solution lies in “Green AI” techniques, such as model pruning (removing unnecessary connections) and quantization, which allow models to run efficiently on smaller, eco-friendly hardware.

3. The “Black Box” Problem

It is often impossible to see exactly why a deep learning model made a specific decision, which creates trust issues in healthcare or law. Developers are now integrating Explainable AI (XAI) tools like SHAP or LIME, which provide “heatmaps” to show which data influenced the result.

4. Risk of Embedded Bias

If training data contains human prejudices, the model will automate that unfairness. The professional fix is Algorithmic Auditing, using diverse datasets and fairness metrics to strip out bias. Adopting this rigorous standard is essential for a true data driven culture that values accuracy.

5. Susceptibility to Overfitting

A model can become so focused on the training data that it fails to work in the real world. Engineers combat this with Regularization and Dropout, which forces the model to learn broader, more flexible patterns rather than just memorizing the specific examples it was shown.

6. Vulnerability to Adversarial Attacks

Small, invisible changes to input data, like a few pixels on a stop sign, can trick a deep learning model into making dangerous errors. To fix this, teams use Adversarial Training, intentionally attacking their own models during the build phase to “immunize” them against future hacking.

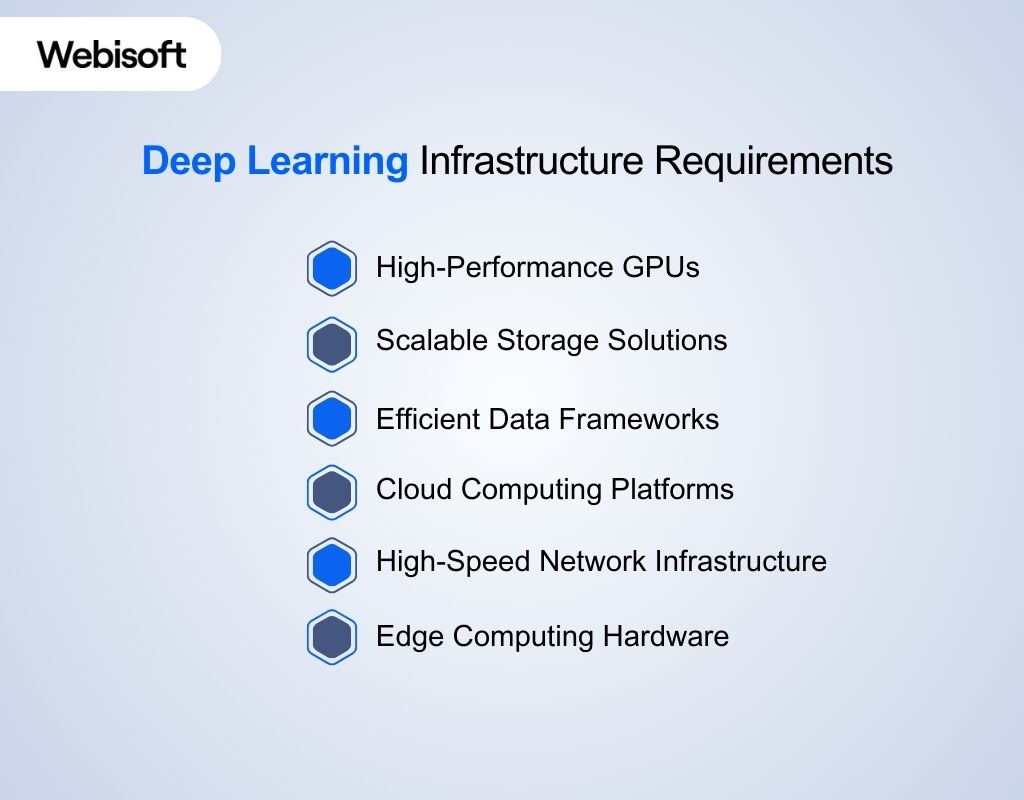

Deep Learning Infrastructure Requirements

Building a deep learning system requires more than a standard computer; you need a high-performance ecosystem designed for speed. By choosing the right mix of specialized chips, fast storage, and smart networking, you ensure your AI projects run efficiently without expensive delays.

Building a deep learning system requires more than a standard computer; you need a high-performance ecosystem designed for speed. By choosing the right mix of specialized chips, fast storage, and smart networking, you ensure your AI projects run efficiently without expensive delays.

1. High-Performance GPUs

Graphics Processing Units (GPUs) are the “muscles” of your AI, capable of performing thousands of calculations at once. To maximize their value, you should use GPU Clusters, which link multiple cards together so they can tackle massive model-training tasks in hours rather than months.

2. Scalable Storage Solutions

Deep learning thrives on millions of files, which can quickly overwhelm standard hard drives and create “data traffic jams.” The solution is All-Flash Storage or Data Lakes, which allow your GPUs to pull massive amounts of data instantly, keeping the processors busy and productive.

3. Efficient Data Frameworks

Tools like PyTorch or TensorFlow are the “blueprints” that help you build models without starting from zero. To stay organized, you can use Containerization (like Docker), which packages your code and tools together so they work perfectly on any machine without setup headaches.

4. Cloud Computing Platforms

If you don’t want to buy expensive hardware, cloud providers like AWS or Google Cloud let you “rent” AI power. You can use Auto-scaling, which automatically adds more computing power when your task is heavy and turns it off when finished to save you money.

5. High-Speed Network Infrastructure

Training a single AI across multiple machines requires them to “talk” to each other at lightning speed. You should implement InfiniBand or 800G Ethernet, which provides a “super-highway” for data, ensuring that no single machine is left waiting for information from another.

6. Edge Computing Hardware

Sometimes, your AI needs to live on a phone or a drone rather than a giant server. To do this, you use AI Accelerators (NPUs), which are tiny, power-efficient chips designed to run your deep learning models locally without needing a constant internet connection.

The Future of Deep Learning Technology

The future of deep learning is shifting from machines that simply “chat” to systems that “act” and “understand physics.”

The future of deep learning is shifting from machines that simply “chat” to systems that “act” and “understand physics.”

| Trend | The “Viral” Feature | Why It Matters to You |

| Agentic AI | Self-executing tasks | Your AI acts like a digital employee, not just a tool. |

| World Models | Physics-aware reasoning | Robots can perform real-world tasks without constant human correction. |

| Liquid AI | Continuous learning | The system improves as you use it, day by day. |

| Neuromorphic AI | ~1% energy consumption | Devices run AI longer without draining batteries. |

| Neuro-Symbolic AI | Reduced hallucinations | AI outputs become more reliable for legal or medical use. |

1. The Rise of Agentic AI

We are moving past chatbots that wait for your command toward Autonomous Agents. These systems don’t just give you a flight link; they use deep learning to plan your entire trip, negotiate prices, and handle the booking across multiple apps without you lifting a finger.

2. “Liquid” Neural Networks

Current AI is “frozen” after training, but Liquid Neural Networks (LNNs) can change their own underlying equations on the fly. This “neuroplasticity” allows them to adapt to new, noisy data, like a drone suddenly flying into a storm, without needing to be retrained from scratch.

3. World Models and Physical AI

New models are being trained to understand the “rules of reality,” such as gravity and friction. By 2026, World Models will allow robots to simulate millions of scenarios in their “mind” before ever moving a physical limb, making them incredibly safe and precise in human environments.

4. Neuromorphic “Brain-Like” Chips

To stop AI from consuming massive amounts of energy, the trend is shifting toward Neuromorphic Computing. These chips mimic the human brain’s “spiking” nature, only using power when information is actually moving, which could make your phone’s AI 1,000x more energy-efficient.

5. Neuro-Symbolic Reasoning

Deep learning is great at patterns but bad at logic. The future lies in Neuro-Symbolic AI, which combines the creative “guessing” power of neural networks with the hard, unbreakable rules of logic. This will virtually eliminate “hallucinations” in critical fields like medicine and law.

6. Small Language Models (SLMs) at the Edge

The hype is moving away from “bigger is better” toward SLMs that live entirely on your device. These compact models use advanced “distillation” techniques to provide GPT-4 level intelligence on a smartwatch or a pair of AR glasses without needing an internet connection.

Webisoft: Your Senior Engineering Partner for Custom Deep Learning Architecture

In 2026, the cost of ignoring deep learning is a permanent loss of market share to competitors who automate faster than you.

Without direct access to senior engineering expertise, your company faces high technical debt and inefficient AI models that drain your budget.

Webisoft is an elite team of senior engineers that delivers high-performance, custom deep learning systems to solve your most critical business bottlenecks.

Why Webisoft Stands Out in Deep Learning

- 90% Senior-Level Architects: You work directly with veteran engineers who eliminate the errors of junior developers, ensuring your neural networks are stable for enterprise-scale use.

- GPU & TPU Cost Optimization: We architect your systems to reduce hardware overhead, maximizing computational output so you get faster results without overspending on cloud resources.

- Agentic AI Integration: Our team builds autonomous systems that do not just generate text; they execute multi-step business workflows and interact with external APIs to complete tasks.

- Model Transparency & Safety: We implement Explainable AI (XAI) frameworks, providing your team with technical data and logic logs to verify the accuracy and fairness of every automated decision.

- Infrastructure Scalability: We engineer your deep learning models to handle sudden spikes in data volume, maintaining 99.9% uptime while integrated directly into your existing software stack.

Build Your Deep Machine Learning Future with Webisoft!

Advanced artificial intelligence and custom infrastructure built for you.

In Closing

Deep machine learning is no longer a futuristic concept; it is the fundamental architecture driving modern business automation and intelligence. By mastering specialized infrastructure and navigating current technical challenges, your organization can turn massive data into autonomous action.

As trends shift toward agentic and efficient AI, having a senior-led engineering strategy is the only way to maintain a true competitive edge. Ready to Build Your AI Future? Partner with the senior architects at Webisoft to deploy custom, high-performance deep learning solutions that scale with your business.

Frequently Asked Questions

1. How long does it take to deploy a custom deep learning model?

For an enterprise-grade solution, development typically takes 3 to 6 months. This includes data engineering, model architecture design, and rigorous stress testing before going live.

2. Do we need to own our own GPUs to work with Webisoft?

No, you don’t. We can set up and manage cloud-based GPU clusters (like AWS or Google Cloud) for you, allowing you to pay only for the computing power you actually use.

3. What is the difference between Supervised and Unsupervised Deep Learning?

Supervised learning uses labeled data (like photos tagged “cat”) to learn specific tasks. Unsupervised learning finds hidden patterns in raw data without labels, which is perfect for discovering new customer segments.

4. Can deep learning models work with our existing legacy software?

Yes. We specialize in API-driven integration, where we wrap the deep learning model in a secure layer that communicates seamlessly with your current databases and applications.