Data Tokenization Tools: 7 Best Side-by-Side Picks

- BLOG

- Blockchain

- September 20, 2025

Over 80% of data breaches involve sensitive customer data like credit card numbers, health records, or personal IDs. One mistake, just one leak can cost you millions in fines and destroy customer trust. If you’re handling that kind of data, security can’t be an afterthought. That’s where data tokenization tools make a real difference. Instead of storing real information, they replace it with random tokens that are useless if stolen. In this guide, we’re not just listing tools. You’ll understand what is tokenization, the working process, which tools match your needs, and how to pick and implement the right one, without slowing things down.

Contents

- 1 Data Tokenization Explained: How It Works and When to Use It

- 2 7 Best Data Tokenization Tools and What They’re Best At

- 2.1 1. CipherTrust – Best for complex enterprise deployments

- 2.2 2. Very Good Security (VGS) – Best developer-first solution

- 2.3 3. Skyflow – Best for SaaS & PII tokenization

- 2.4 4. Enigma Vault – Best lightweight, fast implementation

- 2.5 5. IBM Guardium – Best for legacy systems

- 2.6 6. Protegrity – Best for format-preserving encryption (PFE)

- 2.7 7. ShieldConex (Bluefin) – Best for card tokenization in payments

- 3 What to Look for in a Tokenization Tool

- 4 Start Building Secure Your Data with Webisoft!

- 5 Vaulted vs Vaultless Tokenization: Which Should You Choose?

- 6 Data Tokenization vs Data Masking: What’s the Difference?

- 7 Why Webisoft Is Your Implementation Partner for Data Tokenization

- 8 Conclusion: What’s Your Next Step?

- 9 Frequently Asked Question

Data Tokenization Explained: How It Works and When to Use It

Data tokenization is a method that replaces sensitive information, like credit card numbers, health records, or personal identifiers with random strings called tokens. These tokens have no real value on their own and cannot be traced back to the original data without access to a secure system that manages the mapping. Unlike data encryption, tokenization doesn’t rely on encryption keys. The original data is removed from your environment and stored separately, either in a secure vault or processed using vaultless methods. This makes tokenization an effective approach to sensitive data protection and tokenization security. Here’s why data tokenization tools are widely used:

- Tokens maintain the same format as real data, so your applications continue to run smoothly.

- Even if an attacker gains access to your system, tokenized values reveal nothing useful.

- It supports real-time operations without slowing performance.

- It reduces compliance scope for standards like PCI DSS and HIPAA.

Use tokenization when:

- Use tokenization when handling credit card data, medical records, or regulated identifiers. Especially in sectors where blockchain in healthcare is shaping how sensitive data is processed.

- Needing to comply with regulations like PCI, HIPAA, or GDPR.

- Working with APIs or cloud-based systems that require fast, secure data processing.

Unlike data masking, tokenization allows for safe, controlled detokenization. And compared to tokenization vs encryption, it offers stronger separation of data and security logic. If you’re exploring data tokenization software, understanding how data tokenization works is the first step. It helps you build a reliable tokenization solution that fits your compliance and performance needs.

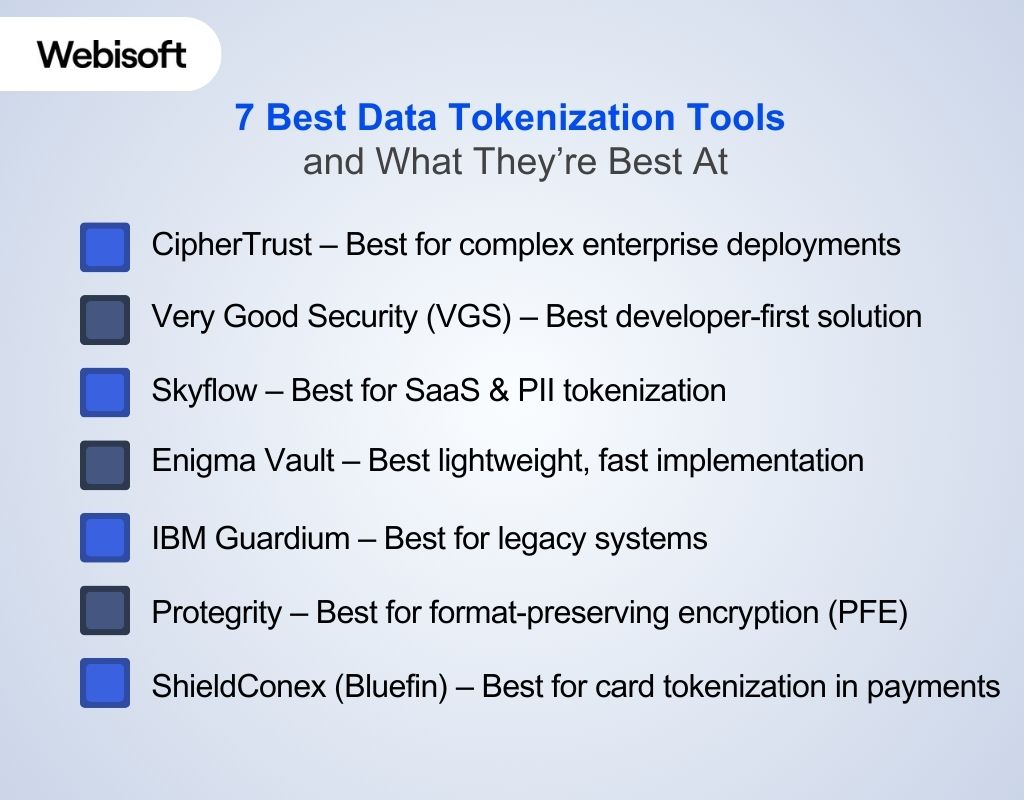

7 Best Data Tokenization Tools and What They’re Best At

Not all data tokenization tools are built the same. Some are perfect for SaaS and APIs, others shine in enterprise or payment systems. To save you hours of research, here are the 7 best tools, and exactly what each one does best.

1. CipherTrust – Best for complex enterprise deployments

CipherTrust Tokenization offers both vaulted and vaultless tokenization methods alongside format-preserving masking. It replaces sensitive fields without altering database schemas, and deploys easily in cloud, big‑data, and data center environments. Thus helping reduce PCI and GDPR scope efficiently. Vault type: Offers both vaulted and vaultless architectures Key integrations: REST APIs, LDAP/AD, dynamic masking templates, support for databases and ERP systems like SAP Compliance certifications: Built to meet PCI DSS, GDPR, HIPAA; FIPS 140‑2 certified platform Pros: Flexible architecture, strong governance, format-preserving support Cons: High enterprise complexity and licensing costs Our Take: Ideal if you need masking and tokenization tightly integrated across enterprise systems with audit-ready compliance.

2. Very Good Security (VGS) – Best developer-first solution

VGS provides a vault-based token vault with developer-friendly REST APIs and SDKs. It abstracts all PCI data handling so your applications never store real card or PII data. This dramatically shrinks PCI audit scope and speeds up deployment in modern SaaS environments. Vault type: Vaulted universal token vault Key integrations: APIs for card, ID, PHI, e‑commerce platforms; cloud services like AWS Compliance certifications: PCI DSS ready; significantly reduces audit burden Pros: Minimal setup, developer-centric, fast integration Cons: Fewer enterprise features like dynamic masking or vaultless options Our Take: Perfect for agile teams building SaaS and payment flows needing fast, secure compliance without heavy infrastructure.

3. Skyflow – Best for SaaS & PII tokenization

Skyflow’s privacy vault uses distributed vault architecture with hybrid tokenization and encryption, preserving usability for analytics while strong access controls protect data. It supports PII workflows across third-party systems and cloud data pipelines. Vault type: Distributed (hybrid token + encryption vault) Key integrations: Connectors for Snowflake, Databricks, MongoDB; API-first architecture Compliance certifications: GDPR, HIPAA, CCPA with data residency support Pros: Analytics-compatible, governance-ready, PII focus Cons: Learning curve for hybrid token/encryption model Our Take: Use when you need secure, analytics-ready PII tokenization in scalable SaaS environments.

4. Enigma Vault – Best lightweight, fast implementation

Enigma Vault is a cloud-based, vaulted tokenization service certified to PCI Level 1 and ISO 27001. With easy plug-and-play APIs and payment provider integrations, it enables rapid deployment for payment card and file tokenization. Vault type: Cloud-based vaulted token service Key integrations: Card vault iframe, file vault APIs, integrators like Clover or WorldPay Compliance certifications: PCI Level 1, ISO 27001 Pros: Minimal dev work, fast PCI compliance Cons: Limited customization beyond card-based workflows Our Take: Choose for quick tokenization projects with minimal engineering effort and strong compliance focus.

5. IBM Guardium – Best for legacy systems

IBM Guardium offers field-level tokenization and dynamic masking tailored to legacy or on-prem systems. It integrates with SIEMs, databases, and big data platforms, helping enterprises maintain compliance in regulated industries. Vault type: Vault-based tokenization with masking Key integrations: Databases, big data, SIEM tools, enterprise data platforms Compliance certifications: Built for HIPAA, PCI DSS, government regulations Pros: Enterprise controls, legacy-friendly, strong governance Cons: Complex interface, less cloud or SaaS-friendly Our Take: Ideal for clients with legacy architecture needing strong field-level control and auditing.

6. Protegrity – Best for format-preserving encryption (PFE)

Protegrity’s solution offers vaultless tokenization that preserves data format and structure, ideal for analytics and reporting workloads. It scales across cloud data repositories without lookup overhead, improving performance while meeting compliance standards. Vault type: Vaultless (static codebook-based) tokenization Key integrations: AWS Glue, Athena, Redshift, JDBC/SDK, big data frameworks Compliance certifications: Supports PCI DSS, GDPR, HIPAA; centralized governance Pros: High performance, analytics-friendly, cloud-native Cons: Requires policy-driven governance setup Our Take: Choose when you need format-preserving tokens for data lakes and analytics pipelines at scale.

7. ShieldConex (Bluefin) – Best for card tokenization in payments

ShieldConex provides hardware-assisted, vaultless tokenization-as-a-service tailored to real-time payment and PII flows. It integrates with network token systems and streamlines PCI compliance across multiple payment channels. Vault type: Vaultless (hardware-backed) Key integrations: Payment orchestration, card network tokens, iframe/API entry tools Compliance certifications: Optimized for PCI DSS, CCPA, GDPR Pros: Fast payment flows, reduced PCI scope, major card network support Cons: Focused mainly on card data, less suited for general PII or analytics use Our Take: Go with ShieldConex if you need tight, compliant integration for card processing and minimal PCI burden.

What to Look for in a Tokenization Tool

Choosing a data tokenization tool isn’t just about security, it’s about finding the right fit for your systems, compliance needs, and day-to-day operations. To get the full benefits of data tokenization, here are key things you should focus on:

Choosing a data tokenization tool isn’t just about security, it’s about finding the right fit for your systems, compliance needs, and day-to-day operations. To get the full benefits of data tokenization, here are key things you should focus on:

Real-Time Processing Without Performance Lag

You need a tool that doesn’t slow your app down. If you’re dealing with high-traffic systems or live customer interactions, look for tokenization that supports real-time processing with minimal delay. This is especially important if you’re using vaultless methods for performance and scale.

Flexible Integration with Your Tech Stack

Make sure the tool plays well with your current environment, databases, APIs, cloud platforms, and even legacy systems. Good tokenization tools offer RESTful APIs, SDKs, and connectors so you can integrate without rebuilding your architecture from scratch.

Role-Based Access and Granular Detokenization Control

Not everyone in your team should see real data. Choose a solution with detailed access control, one that lets you manage who can detokenize what, and logs every access event for security and audits.

Support for Multi-Cloud and Hybrid Deployments

Your tokenization setup should work wherever your data lives, AWS, Azure, Google Cloud, or on-premise. The best tools offer flexible deployment options so you can stay protected across environments without making major changes.

Compliance Mapping and Built-in Audit Trails

Don’t just protect the data, prove it. The right data protection platform will support PCI DSS, HIPAA, or GDPR with built-in logs, policy controls, and audit reports that reduce compliance risks.

Start Building Secure Your Data with Webisoft!

Get custom tokenization solutions built for performance, compliance, and scale!

Vaulted vs Vaultless Tokenization: Which Should You Choose?

When it comes to data tokenization, you’ll hear two main approaches: vaulted and vaultless. Both protect sensitive data differently and choosing the right one depends on your performance needs, architecture, and compliance goals. Below is a quick comparison to guide you.

| Feature | Vaulted Tokenization | Vaultless Tokenization |

| Where data is stored | In a secure central vault | No central vault; uses algorithms |

| Speed | Slightly slower due to lookup | Faster; no database queries |

| Complexity | Easier to manage and audit | More complex to implement securely |

| Compliance readiness | Strong fit for PCI DSS, HIPAA | Also compliant, depends on implementation |

| Scalability | May face bottlenecks under high load | Scales better in high-performance systems |

| Best for | Legacy systems, centralized architectures | Cloud-native, real-time, distributed systems |

| Detokenization control | Centralized and tightly controlled | Decentralized, requires strong access rules |

Data Tokenization vs Data Masking: What’s the Difference?

Data tokenization and data masking both aim to protect sensitive data, but they solve different problems in different ways. Data tokenization replaces real data with random-looking tokens that hold no value on their own. The original data is stored separately and can only be retrieved by authorized systems through a secure process called detokenization. This makes it perfect for live systems where you still need to use the data without exposing it.

- Reversible when needed

- Ideal for real-time apps, APIs, and payment systems

- Helps meet compliance standards like PCI DSS and HIPAA

- Keeps production workflows running without compromising privacy

Data masking, on the other hand, alters data permanently. The masked version looks real but the original value is gone and cannot be restored. It’s best used in environments where the real data isn’t required, like training, testing, or demos.

- Irreversible once masked

- Great for development, QA, and user training

- Prevents accidental data exposure in non-secure environments

- Doesn’t require managing token vaults or detokenization logic

In short, use data masking when no one needs to see the real data again. Choose data tokenization when you need security plus the ability to bring back original values in a controlled, compliant way. Understanding the difference helps you protect data the right way in the right context.

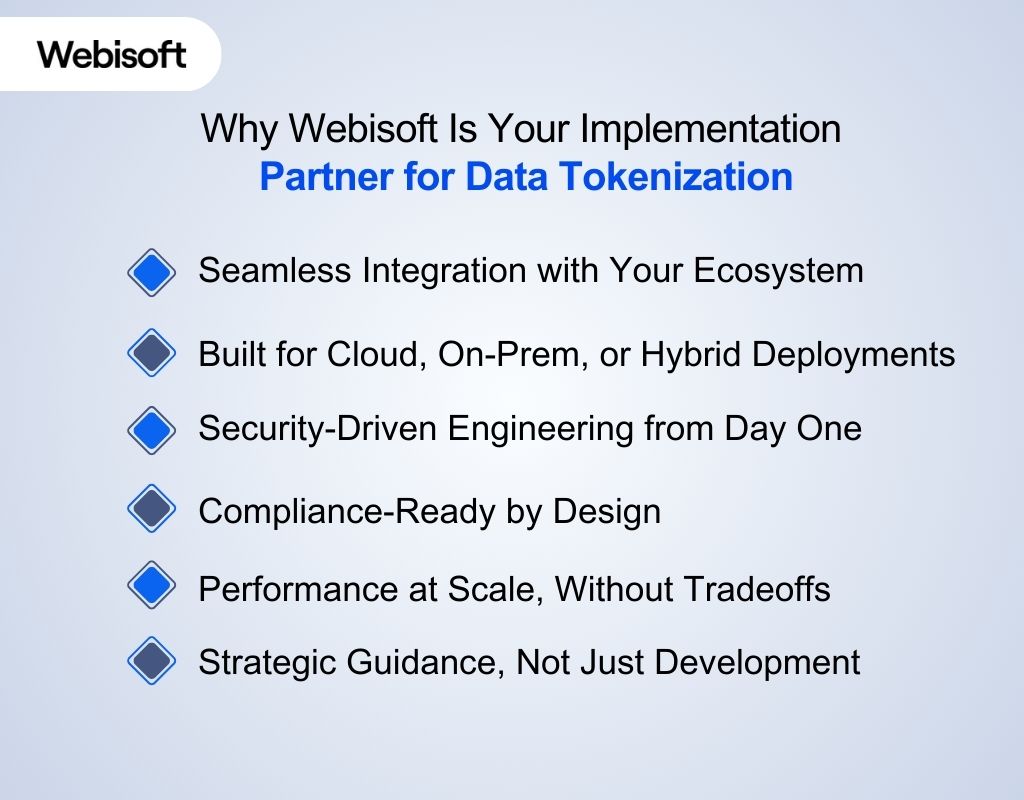

Why Webisoft Is Your Implementation Partner for Data Tokenization

Finding the right tokenization tools is only the first step. Webisoft goes beyond development, we’re a data security platform partner focused on building solutions that scale securely with your business. Here’s how:

Seamless Integration with Your Ecosystem

Webisoft doesn’t apply one-size-fits-all templates. We study your existing systems, architecture, and workflows, whether you’re cloud-native, legacy-based, or hybrid. Then we implement data tokenization software that fits naturally without forcing you to rebuild your tech stack. You get:

- Tokenization that integrates across APIs, databases, CRMs, and ERP systems

- Custom-built logic for detokenization and access control

- Deployment support for both vaulted and vaultless models

Built for Cloud, On-Prem, or Hybrid Deployments

Data lives in many places like cloud platforms, local servers, edge environments. Webisoft designs tokenization solutions that match your infrastructure, not fight it. Our expertise ensures consistent security across environments. We support:

- AWS, Azure, GCP, or multi-cloud architectures

- Kubernetes and container-based deployments

- Private and regulated infrastructure setups

Security-Driven Engineering from Day One

Webisoft is built on blockchain development services, smart contracts, and secure systems, which means data security tokenization is second nature to us. From encryption layers to RBAC policies, we harden every part of your tokenized data flow. What we focus on:

- Zero-trust architecture

- Strong authentication and audit logs

- Minimal attack surface for detokenization endpoints

Compliance-Ready by Design

If you’re dealing with PCI DSS, HIPAA, or GDPR, tokenization isn’t optional, it’s essential. Webisoft aligns each implementation with audit requirements so you’re not scrambling during inspections. We help you:

- Reduce audit scope through vault isolation

- Map token flows to regulatory standards

- Generate automated logs for reporting and compliance checks

Performance at Scale, Without Tradeoffs

Some tokenization setups slow everything down. Ours don’t. We implement real-time, low-latency tokenization that supports live transactions, API workflows, analytics, and web and mobile app development environments without delay, especially with vaultless configurations. Performance includes:

- Real-time tokenization for web and mobile

- Token compatibility with analytics platforms

- Simplifies detokenization under strict access rules

Strategic Guidance, Not Just Development

Webisoft doesn’t stop at code. We advise you on vendor selection, compliance strategies, and token architecture that evolves with your business. Whether you’re early-stage or scaling fast, we help you stay one step ahead. You get:

- Vendor-agnostic guidance on the best data tokenization tools

- Architecture consulting based on your stack and goals

- Ongoing support and iteration as needs change

Conclusion: What’s Your Next Step?

You’ve now seen the 7 best data tokenization tools, how they compare, and when to use each. You also have a clear framework to evaluate tools and real examples of how businesses use them. That puts you ahead of most teams still stuck in research mode. If this guide helped clarify your options, save it for later or pass it to your security or engineering lead. It can make decision-making faster when it counts. When you’re ready, book a free 30-minute consultation with Webisoft to review your current data tokenization setup, or plan a new one from scratch.

Frequently Asked Question

Can I use tokenization in the cloud?

Yes, many tokenization tools are cloud-native or offer cloud deployment. They integrate with cloud databases, APIs, and services, helping businesses secure sensitive data while maintaining performance, scalability, and compliance in cloud environments.

What’s a detokenization risk?

Detokenization risk arises when access controls or audit mechanisms fail. If unauthorized users can reverse tokens back to real data, it defeats the purpose of tokenization. Strong access policies and logging are essential to reduce this risk.

Can tokenization slow down apps?

Tokenization can introduce latency, especially with vaulted models that require database lookups. However, modern vaultless tools and optimized APIs minimize impact, allowing real-time performance with minimal disruption to application speed or user experience.