AI Development Life Cycle: Key Stages and Best Practices

- BLOG

- Artificial Intelligence

- November 9, 2025

Artificial intelligence is the driving force behind business innovation in 2025. Yet, successful AI systems don’t emerge overnight; they follow a structured journey known as the AI development life cycle.

Understanding the AI development life cycle is essential for both developers and decision-makers. In this article, we’ll explore the complete AI development life cycle. We will also discuss best practices, tools, and governance principles that enable organizations to build intelligent systems.

Contents

- 1 What Is the AI Development Life Cycle?

- 2 The Eight Core Phases of the AI Development Life Cycle

- 3 Best Practices for Effective AI Lifecycle Management

- 4 Advance your AI initiatives with Webisoft’s proven development expertise!

- 5 Ethical and Governance Considerations Across the AI Life Cycle

- 6 Tools for the Stages of AI Development Life Cycle

- 7 Applications of the AI Development Life Cycle

- 8 Challenges in the AI Development Life Cycle

- 9 How Webisoft Helps Businesses Navigate the AI Development Life Cycle

- 10 Advance your AI initiatives with Webisoft’s proven development expertise!

- 11 Conclusion

- 12 FAQs

- 12.1 1. How does the AI development life cycle differ from traditional software development?

- 12.2 2. What are the key metrics used to evaluate AI model performance during the lifecycle?

- 12.3 3. How often should AI models be retrained in a production environment?

- 12.4 4. What security considerations are important in the AI development life cycle?

- 12.5 5. How can businesses accelerate their AI development life cycle?

What Is the AI Development Life Cycle?

The AI development life cycle is a structured, end-to-end process that guides the creation, deployment, and long-term management of artificial intelligence systems. It provides a systematic framework that ensures every stage of building reliable, scalable, and effective AI solutions.

Unlike the traditional software development life cycle, which centers on writing and testing code, the AI lifecycle stages are heavily data-driven and iterative. Each phase depends on continuous feedback and refinement, as the performance of AI models evolves with new data and environmental changes.

This makes the process more experimental and cyclical, focusing not only on building the model but also on ensuring its adaptability and fairness.

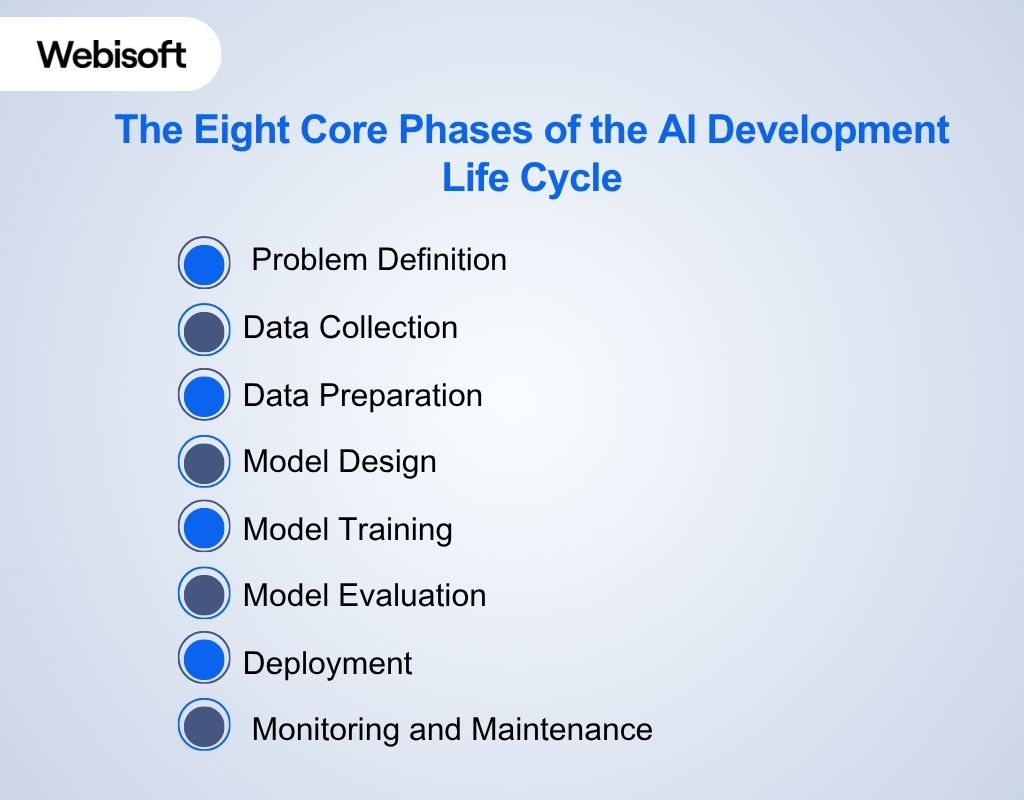

The Eight Core Phases of the AI Development Life Cycle

The AI development life cycle follows a structured sequence of interconnected stages. These AI lifecycle stages are iterative, meaning feedback from each phase influences the next, ensuring accuracy, scalability, and ethical compliance across the system’s lifespan.

The AI development life cycle follows a structured sequence of interconnected stages. These AI lifecycle stages are iterative, meaning feedback from each phase influences the next, ensuring accuracy, scalability, and ethical compliance across the system’s lifespan.

1. Problem Definition

The lifecycle begins with a clear understanding of the problem the AI solution must solve. Teams define objectives, measurable success criteria, and feasibility parameters.

Stakeholder analysis helps align the project scope with business goals, ensuring every requirement is captured. Ethical assessment and regulatory compliance reviews are also critical at this stage to mitigate bias, ensure fairness, and protect user rights.

Example: Establish KPIs such as reducing prediction errors by 10% or improving customer response time while maintaining compliance with AI governance standards.

2. Data Collection

Data serves as the backbone of any AI initiative. At this stage, teams identify, source, and acquire relevant data from various channels, including internal databases, APIs, IoT devices, and public repositories. Quality and representativeness are crucial; incomplete or biased datasets can derail performance.

Data privacy, ownership, and governance protocols must also be established in accordance with regulations like GDPR. Example: Use APIs and third-party integrations to collect structured and unstructured data, ensuring each record meets relevance and security benchmarks.

3. Data Preparation

Raw data often contains noise, inconsistencies, and missing values. Data preparation transforms this raw material into a clean, structured dataset ready for model training. The process includes cleaning (removing duplicates or errors), integration (combining sources), and transformation (normalizing or encoding values).

Accurate labeling and version control ensure traceability and reproducibility. Teams may also develop automated data pipelines for efficiency. Example: Implement ETL (Extract, Transform, Load) workflows to maintain versioned datasets optimized for real-time machine learning operations.

4. Model Design

In this phase, data scientists select algorithms and design architectures suited to the defined problem and prepared dataset. The model’s type, supervised, unsupervised, or reinforcement learning, depends on the nature of the data and objectives.

Frameworks like TensorFlow, PyTorch, or Scikit-learn are often used to experiment with different configurations. Security and interpretability are built into the architecture to protect against adversarial threats and ensure explainability.

Example: Design a neural network architecture with multiple layers to enhance image recognition accuracy while integrating explainable AI modules for transparency.

5. Model Training

Model training is where AI truly begins to learn. The model processes training data, identifying patterns and relationships to make predictions. Engineers adjust parameters through algorithms such as stochastic gradient descent, optimizing batch size and learning rate for efficiency.

Hyperparameter tuning and checkpointing ensure the model improves iteratively without overfitting or underfitting. Example: Use cross-validation techniques and scheduled learning rates to balance accuracy and performance across large datasets.

6. Model Evaluation

After training, the model’s performance is validated using unseen data. Evaluation focuses on metrics such as accuracy, precision, recall, and F1-score to gauge predictive capability.

Cross-validation ensures the model generalizes beyond the training set, while fairness and bias assessments maintain ethical integrity. Explainable AI (XAI) methods like SHAP or LIME help interpret decisions and verify transparency.

Example: Compare model predictions against a validation dataset to identify areas of bias or misclassification, ensuring the system meets business KPIs.

7. Deployment

Successful models are deployed into production environments where they start processing real-world data. Deployment strategies vary: cloud, on-premises, or edge, depending on performance and compliance needs.

Containerization technologies such as Docker or orchestration platforms like Kubernetes help standardize environments, manage scalability, and support rollback versions.

Example: Deploy a trained model via REST APIs, enabling it to deliver live predictions within enterprise systems with automatic scaling under heavy traffic.

8. Monitoring and Maintenance

Once deployed, ongoing monitoring safeguards the model’s relevance and accuracy. Teams track key metrics, detect data drift, and retrain models when performance declines.

MLOps practices integrate monitoring tools like MLflow or Evidently AI to automate alerts and retraining pipelines. Example: Establish an automated feedback system that retrains models quarterly based on new data inputs, maintaining stable accuracy across business operations. At Webisoft, we specialize in building and deploying enterprise-grade AI solutions that seamlessly move from concept to production. Our end-to-end expertise ensures every phase from data to deployment aligns with your business goals and delivers measurable impact.

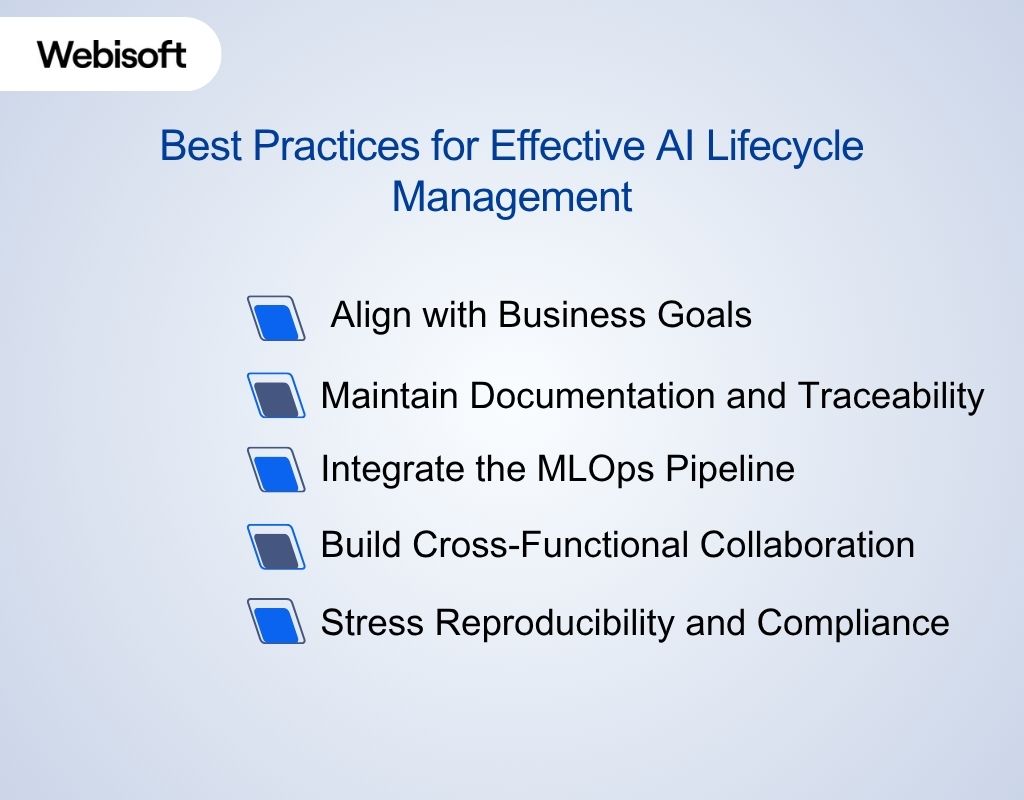

Best Practices for Effective AI Lifecycle Management

Successful AI model lifecycle management requires disciplined execution, collaboration across teams, and continuous alignment with business objectives. Building an AI system depends on adopting practices that balance experimentation with structure and ethics with innovation.

Successful AI model lifecycle management requires disciplined execution, collaboration across teams, and continuous alignment with business objectives. Building an AI system depends on adopting practices that balance experimentation with structure and ethics with innovation.

1. Align with Business Goals

AI projects should begin with a clear understanding of business objectives and evolve through continuous validation. Iterative evaluation where results are regularly reviewed against predefined success metrics ensures that every model iteration delivers measurable value.

2. Maintain Documentation and Traceability

Documenting every stage of the AI lifecycle builds accountability and makes future modifications easier. Versioning datasets, recording model configurations, and maintaining audit trails for experiments help ensure transparency. Proper documentation also simplifies handoffs between teams, supports compliance requirements, and reinforces reproducibility in large-scale development environments.

3. Integrate the MLOps Pipeline

A well-structured MLOps pipeline enables seamless automation across the AI lifecycle. It connects model training, testing, deployment, and monitoring through continuous integration and delivery (CI/CD). Automated pipelines reduce human intervention, minimize deployment errors, and enable rapid iteration. Integrating MLOps ensures that updates are consistently tested, approved, and deployed, maintaining operational reliability while accelerating innovation.

4. Build Cross-Functional Collaboration

AI development succeeds when engineers, data scientists, and product teams work as a unified force. Engineers manage infrastructure and performance, while product teams translate technical potential into business outcomes. Regular collaboration sessions bridge communication gaps, encourage shared ownership, and ensure AI systems remain both technically sound and strategically relevant.

5. Stress Reproducibility and Compliance

Reproducibility strengthens the integrity of AI models. Teams should ensure that experiments can be replicated using consistent datasets, code, and configurations. Compliance with data privacy laws and ethical standards such as GDPR or ISO/IEC 42001, further safeguards the system.

Advance your AI initiatives with Webisoft’s proven development expertise!

Book a free consultation. Learn, design, and deploy AI solutions that deliver measurable results.

Ethical and Governance Considerations Across the AI Life Cycle

To ensure reliability and trust, AI solutions must adhere to ethical standards and governance frameworks:

To ensure reliability and trust, AI solutions must adhere to ethical standards and governance frameworks:

1. Promoting Fairness and Eliminating Bias

AI models are only as fair as the data they learn from. Addressing bias begins at data collection and continues through training and validation. Teams must evaluate datasets for representation gaps and ensure outcomes do not disadvantage any group.

2. Ensuring Transparency and Explainability

Transparency enables stakeholders to understand how an AI model makes decisions. Explainability tools such as SHAP and LIME provide visual interpretations of prediction logic. These tools help data scientists and compliance teams trace model behavior, detect irregularities, and explain decisions to end users.

3. Strengthening AI Governance Frameworks

Effective governance ensures ethical standards are followed at every lifecycle stage. Organizations should define roles, responsibilities, and documentation protocols to track model lineage and decision processes. Governance frameworks also enable better version control and auditability, supporting long-term operational reliability.

4. Upholding Regulatory and Legal Compliance

Compliance with global and regional laws is essential for responsible AI operations. Frameworks such as the GDPR, EU AI Act, and ISO/IEC 42001 promote data protection, risk mitigation, and transparency. Integrating compliance into early project phases helps avoid violations and ensures readiness for evolving legal standards.

5. Embedding Accountability and Human Oversight

Even with automation, humans remain central to ethical AI. Teams should define accountability for model behavior and maintain oversight for critical decisions. Human-in-the-loop mechanisms allow intervention in high-stakes cases, ensuring that ethical judgment complements algorithmic accuracy.

Tools for the Stages of AI Development Life Cycle

The success of any AI project depends greatly on selecting the right tools to support each phase of the AI development process. Below are essential AI lifecycle tools that help teams manage complexity and maintain high performance throughout the entire pipeline.

The success of any AI project depends greatly on selecting the right tools to support each phase of the AI development process. Below are essential AI lifecycle tools that help teams manage complexity and maintain high performance throughout the entire pipeline.

1. Data and Preparation Tools

AI systems rely heavily on data quality and consistency. Tools like Pandas, NumPy, and Apache Spark enable efficient data manipulation, cleaning, and transformation at scale.

- Pandas and NumPy provide powerful data structures for handling arrays and data frames within Python workflows.

- Apache Spark supports distributed computing, allowing teams to process massive datasets quickly and reliably.

These tools ensure the data entering the model is clean, standardized, and ready for analysis, forming the backbone of any successful AI workflow.

2. Modeling Frameworks

Modeling frameworks provide the foundation for training and optimizing intelligent systems.

- TensorFlow and PyTorch are leading open-source frameworks offering GPU acceleration and deep learning capabilities for complex architectures.

- Scikit-learn remains a top choice for traditional machine learning tasks, providing robust algorithms for classification, regression, and clustering.

These tools support flexible experimentation, scalability, and rapid prototyping across both academic and enterprise AI applications.

3. Deployment Platforms

Once a model is trained, efficient deployment ensures seamless integration into production systems.

- Docker and Kubernetes containerize and orchestrate applications, allowing for consistent, reproducible environments and easy scalability.

- Cloud-based platforms like AWS SageMaker and Azure Machine Learning automate model deployment, monitoring, and retraining pipelines.

These technologies make it easier to manage large-scale deployments while reducing downtime and manual intervention.

4. Monitoring and Maintenance Tools

Continuous monitoring ensures that AI models remain accurate and compliant after deployment.

- MLflow helps track experiments, record parameters, and manage model versions throughout the AI development lifecycle, ensuring reproducibility and organized model management.

- Evidently AI and WhyLabs detect data drift, evaluate model accuracy, and trigger retraining workflows when performance drops.

Integrating these tools into post-deployment operations provides transparency and reliability, ensuring that AI systems evolve with changing data conditions.

Applications of the AI Development Life Cycle

The AI lifecycle framework supports a range of practical applications across industries, enabling organizations to optimize workflows, predict outcomes, and make informed decisions. By tailoring each phase of the machine learning lifecycle to specific challenges, businesses can drive innovation, efficiency, and measurable impact across key sectors.

The AI lifecycle framework supports a range of practical applications across industries, enabling organizations to optimize workflows, predict outcomes, and make informed decisions. By tailoring each phase of the machine learning lifecycle to specific challenges, businesses can drive innovation, efficiency, and measurable impact across key sectors.

1. Healthcare: Predictive Analytics and Patient Diagnostics

In healthcare, AI models analyze large volumes of patient data to identify trends, detect anomalies, and predict potential risks before they occur. Predictive analytics helps hospitals anticipate admissions and resource needs, while diagnostic AI tools interpret X-rays and MRIs with remarkable precision. Systems like Google DeepMind and IBM Watson Health support early detection of conditions such as cancer or kidney failure, leading to improved patient outcomes and optimized treatment planning.

2. Finance: Fraud Detection and Risk Management

Financial institutions use AI to monitor transactions in real time and identify irregular activity that signals fraud. Machine learning algorithms assess credit risk, evaluate customer profiles, and improve investment decisions. For instance, PayPal leverages AI to analyze millions of transactions daily, minimizing false positives and preventing financial losses. Automated AI-driven scoring systems also expand credit access to underbanked populations through alternative data analysis.

3. Retail: Personalization and Demand Forecasting

Retailers deploy AI to create seamless, customized shopping experiences. Recommendation engines suggest products based on user behavior, while demand forecasting models optimize inventory and pricing strategies. Amazon and Netflix exemplify this approach, using behavioral data to personalize content and product offerings. Predictive AI ensures retailers maintain balanced stock levels and anticipate customer needs effectively.

4. Manufacturing: Predictive Maintenance and Automation

AI-powered monitoring systems analyze sensor data to detect early signs of equipment wear, preventing costly downtime. In quality control, computer vision algorithms identify defects with accuracy that surpasses manual inspection. Companies like General Electric and Siemens utilize predictive maintenance models to extend machinery lifespan and reduce operational costs.

5. Government: Policy Optimization and Citizen Services

Public sector organizations use AI to improve efficiency and accessibility. Data-driven models analyze socioeconomic indicators to inform better policy decisions, while AI chatbots enhance citizen engagement. For example, the UK government employs virtual assistants to support visa processing and public service queries. These applications increase transparency, reduce administrative delays, and ensure citizens receive timely, personalized support.

Challenges in the AI Development Life Cycle

Each phase of the AI development life cycle presents unique obstacles related to data, technology, ethics, and stakeholder alignment. Addressing these challenges early ensures that AI models remain accurate, fair, and adaptable in real-world conditions.

Each phase of the AI development life cycle presents unique obstacles related to data, technology, ethics, and stakeholder alignment. Addressing these challenges early ensures that AI models remain accurate, fair, and adaptable in real-world conditions.

1. Data Quality and Availability Issues

The foundation of any AI system lies in its data, yet this is where many projects face their first hurdle. Inconsistent, incomplete, or biased data can degrade model accuracy and reliability. Access to domain-specific datasets, particularly in sensitive industries like healthcare or finance, is often limited by cost, privacy laws, or security concerns. Furthermore, labeling data remains a time-consuming and resource-intensive process.

2. Computational Resource Constraints

Advanced AI models, especially those involving deep learning, require vast computing power and energy. High-performance GPUs and TPUs are expensive to operate, and training large models can be time-consuming and carbon-intensive. And now you also have to think about political variables.

3. Managing Stakeholder Expectations

AI projects often involve diverse stakeholders with varying levels of technical understanding. Unrealistic expectations, unclear objectives, or resistance to adopting new workflows can hinder progress. Teams may also encounter misalignment between business priorities and technical feasibility, leading to inefficiencies or project delays.

4. Addressing Bias and Ensuring Fairness

Bias in data or algorithm design can lead to skewed outcomes, reducing the credibility and fairness of AI solutions. Historical inequalities in datasets may perpetuate discrimination, while subtle biases can emerge unintentionally through feature selection or model weighting.

5. Continuous Maintenance and Upgrades

Once deployed, AI models must adapt to evolving data patterns and user behavior. Model drift, performance degradation, and outdated datasets can reduce reliability over time. Without proactive monitoring and retraining, even the best AI systems risk becoming obsolete.

How Webisoft Helps Businesses Navigate the AI Development Life Cycle

Webisoft stands as a trusted technology consulting and development firm, blockchain, and other emerging technologies. With a strong focus on delivering scalable and secure solutions, Webisoft empowers organizations to harness the power of intelligent automation and data-driven decision-making within a structured AI development process.

Webisoft stands as a trusted technology consulting and development firm, blockchain, and other emerging technologies. With a strong focus on delivering scalable and secure solutions, Webisoft empowers organizations to harness the power of intelligent automation and data-driven decision-making within a structured AI development process.

Applying the AI Development Life Cycle Across Projects

Every Webisoft project follows a clear and systematic AI development life cycle. From ideation and data collection to model design, training, and deployment, each phase is meticulously executed to ensure technical precision and business alignment. Webisoft also emphasizes post-deployment maintenance, ensuring models remain adaptive, reliable, and compliant as market conditions evolve.

Technical Expertise in Model Training and MLOps Pipelines

Webisoft’s team of AI engineers and data scientists brings deep expertise in advanced modeling, algorithm optimization, and MLOps pipeline automation. This includes setting up continuous integration and delivery systems for machine learning, version control for datasets, and automated retraining mechanisms that keep models performing at their peak efficiency.

Bridging Business Objectives and Technical Execution

One of Webisoft’s defining strengths lies in its collaborative approach. By engaging both technical and business stakeholders early in the project, Webisoft ensures AI solutions are strategically aligned with core business goals. This approach accelerates adoption, minimizes risks, and maximizes the measurable impact of each AI initiative.

Delivering Scalable, Ethical, and Secure AI Systems

Organizations that partner with Webisoft gain a reliable roadmap for scaling AI responsibly. Using a proven AI lifecycle framework, Webisoft helps businesses design, deploy, and maintain intelligent systems that operate efficiently, ethically, and securely, turning AI innovation into long-term business advantage.

Advance your AI initiatives with Webisoft’s proven development expertise!

Book a free consultation. Learn, design, and deploy AI solutions that deliver measurable results.

Conclusion

The AI development life cycle remains the foundation of successful AI implementation, ensuring every stage. Its iterative nature allows organizations to refine models continuously, adapt to new data, and maintain performance over time. As industries evolve, adopting a structured and well-managed lifecycle becomes essential for sustainable growth. To accelerate your AI journey with expert guidance, consider partnering with Webisoft for tailored solutions that scale securely and deliver measurable business value.

FAQs

1. How does the AI development life cycle differ from traditional software development?

The AI development life cycle differs from traditional software development because it is data-driven, iterative, and model-centric rather than code-centric. Traditional development focuses on writing and testing static code, whereas AI systems require continuous model training, evaluation, and retraining as new data emerges.

2. What are the key metrics used to evaluate AI model performance during the lifecycle?

Evaluating model performance relies on metrics such as accuracy, precision, recall, F1 score, and AUC-ROC for classification tasks. For regression, developers use mean absolute error (MAE) or root mean squared error (RMSE). In business contexts, Webisoft also measures real-world impact metrics, such as process efficiency, customer satisfaction, and ROI, to ensure the AI solution delivers tangible value.

3. How often should AI models be retrained in a production environment?

The frequency of retraining depends on the rate of data drift and changes in business conditions. For example, financial or e-commerce models may require weekly or monthly updates, while industrial systems might need retraining quarterly.

4. What security considerations are important in the AI development life cycle?

Security plays a critical role at every lifecycle stage. Developers must protect data integrity, prevent adversarial attacks, and ensure compliance with frameworks such as GDPR or ISO/IEC 27001.

5. How can businesses accelerate their AI development life cycle?

Organizations can speed up their AI development by adopting end-to-end AI platforms that combine data management, model experimentation, deployment, and monitoring under one ecosystem. Leveraging cloud-based MLOps tools, pre-trained models, and agile collaboration frameworks helps teams shorten time-to-value.